I've known Greg Shove, the CEO of Section and author of Personal Math, for over 30 years. We met when I was chairman of Red Envelope (e-commerce firm). Since then Greg has started and sold three companies. Section (Disclosure: founder/investor) helps upskill enterprises for AI. This week, we asked Greg to create a cheat sheet on how to register personal ROI from AI.

Thought Partner

By Greg Shove

This is the summer of AI discontent.

In the last few weeks, VCs, pundits, and the media have gone from overpromising on AI to raising the alarm: Too much money has been poured in, and enterprise adoption is faltering. It's a bubble that was overhyped all along. If the computer is what Steve Jobs called the " bicycle for our minds," AI was supposed to be our strongest pedaling partner. It was going to fix education, accelerate drug discovery, and find climate solutions. Instead, we got sex chatbots and suggestions to put glue on pizza.

Investors should be concerned. Sequoia says AI will need to bring in $600 billion in revenue to outpace the cost of the tech. As of their most recent earnings announcements, Apple, Google, Meta, and Microsoft are estimated to generate a combined $40 billion in revenue from AI. That leaves a big gap. At the same time, the leading AI models still don't work reliably - and they're prone to ketamine-like hallucinations, Silicon Valley-speak for "they make shit up." Sam Altman admits ChatGPT-4 is "mildly embarrassing at best," but he's also pumping up expectations for GPT-5.

Unless you're an investor or an AI entrepreneur, though, none of this really matters. Let's refocus: For the first time, we can talk to computers in our language and get answers that usually make sense. We have a personal assistant and adviser in our pocket, and it costs $20 a month. This is Star Trek (58 years ago) - and it's just getting started.

If you're using "AI is a bubble" as an excuse to ignore these capabilities, you're making a big mistake. Don't laugh. I know Silicon Valley tech bros need a win after their NFT/metaverse consensual hallucination. And as you're likely not reading this on a Meta Quest 3 purchased with Dogecoin, your skepticism is warranted.

Super Soldier Serum

For all the hype of AI, few are getting tangible ROI from it. OpenAI has an estimated 100 million monthly active users worldwide. That sounds like a lot, but it's only about 10% of the global knowledge workforce.

More people may have tried ChatGPT, but few are power users, and the number of active users is flatlining. Most people "bounce off," asking a few questions, getting some nonsense answers, and return to Google. They mistake GPT for "Better Google." "Better Google" is Google. Using AI as a search engine is like using a screwdriver to bang down a nail. It could work, but not well. That's what Bing is for.

The reason people make this mistake? Few have discovered AI's premier use case: as a thought partner.

I've personally taught more than 2,000 early adopters about AI (and Section has taught over 15,000). Most of them use AI as an assistant - summarizing documents or contracts, writing first drafts, transcribing or translating documents, etc.

But very few people are using AI to "think." When I talk to those who do, they share that use case almost like a secret. They're amazed AI can act as a trusted adviser - and reliably gut-check decisions, pre-empt the boss's feedback, or outline options.

Last year, Boston Consulting Group, Harvard Business School, and Wharton released a study that compared two groups of BCG consultants - those with access to AI and those without. The consultants with AI completed 12% more tasks and did so 25% faster. They also produced results their bosses thought were 40% better. Consultants are thought partners, and AI is Super Soldier Serum.

Smart people are quick to dismiss AI as a cognitive teammate. They think it can automate call center operators - but not them, because they're further up the knowledge-work food chain. But if AI can make a BCG consultant 40% stronger, why not most of the knowledge workforce? Why not a CEO? Why not you?

AI vs. the Board

Last fall I started asking AI to act like a board member and critique my presentations before I sent them to the Section directors.

Even for a long-time CEO, presenting to the board is a test you always want to ace. We're blessed with a world-class board of investors and operators, including former CEOs of Time Warner and Akamai. Also: Scott. I try to anticipate their questions to prepare me for the meeting, and inform my decisions around operations.

I prompt the AI: "I'm the CEO of Section. This is the board meeting pre-read deck. Pretend to be a hard-charging venture capitalist board member expecting strong growth. Give me three insights and three recommendations about our progress and plan.".

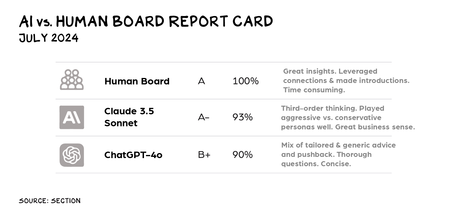

Claude and ChatGPT-4o's performance was breathtaking. AI returned 90% of the same comments or insights our human board made (we compared notes). They were able to suggest the same priorities the board did (with the associated tradeoffs) - including driving enterprise value, balancing growth and cash runway, and taking on more technology risk.

Since then, I've used AI to prepare for every board meeting. Every time, AI has close to a 90% match with the board's feedback. At a minimum, it helps me know most of what Scott is going to say before he says it. A free gift with purchase? The AI is nicer, doesn't check its phone, and usually approves management comp increases. Let's call it a draw.

Think of what this could mean for any of your high brain power work. Less stress, knowing you didn't overlook obvious angles or issues. A quick gut-check to anticipate questions and develop decent answers (which you will improve). And a thought partner to point out your blind spots - risks you forgot to consider or unintended consequences you didn't think of. Whether interviewing for a job, admissions to a business school, or trying to obtain asylum ... I can't imagine not having the AI role play to better prepare.

Other scenarios where AI has helped as my thought partner:

- Discussing the pros and cons of going into a real estate project with several friends as co-investors.

- Getting a summary of all my surgical options, after uploading my MRI, to fix my busted ankle - so I can hold my own with my overconfident, time-starved Stanford docs.

- Doing industry and company research to evaluate a startup investment opportunity.

Right now, the "smartest people in the room" think they're "above" AI. Soon, I think they'll be bragging about using it. And they should. Why would anyone hire a doctor, lawyer, or consultant who's slower and dumber than their peers? Would you hire someone with a fax number on their business card? As Scott says: "AI won't take your job, but someone who understands AI will."

How to Use AI as a Thought Partner

- Ask for ideas, not answers. If you ask for an answer, it will give you one (and probably not a very good one). As a thought partner, it's better equipped to give you ideas, feedback, and other things to consider. Try to maintain an open-ended conversation that keeps evolving, rather than rushing to an answer.

- More context is better. The trick is to give AI enough context to start making associations. Having a "generic" conversation will give you generic output. Give it enough specific information to help it create specific responses (your company valuation, your marketing budget, your boss's negative feedback about your last idea, an MRI of your ankle). And then take the conversation in different directions.

- Ask AI to run your problems through decision frameworks. Massive amounts of knowledge are stored in LLMs, so don't hesitate to have the model explain concepts to you. Ask, "How would a CFO tackle this problem?" or "What are two frameworks CEOs have used to think about this?" Then have a conversation with the AI unpacking these answers.

- Ask it to adopt a persona. "If Brian Chesky and Elon Musk were co-CEOs, what remote work policies would they put in place for the management team?" That's a question Google could never answer, but an LLM will respond to without hesitation.

- Make the AI explain and defend its ideas. Say, "Why did you give that answer?" "Are there any other options you can offer?" "What might be a weakness in the approach you're suggesting?"

- Give it your data. Upload your PDFs - business plans, strategy memos, household budgets - and talk to the AI about your unique data and situation. If you're concerned about privacy, then go to data controls in your GPT settings and turn off its ability to train on your data.

When you work this way, the possibilities are endless. Take financial planning. Now I can upload my entire financial profile (assets, liabilities, income, spending habits, W-2, tax return) and begin a conversation around risk, where I'm missing opportunities for asymmetric upside, how to reach my financial goals, the easiest ways to save money, be more tax efficient, etc.

The financial adviser across the table doesn't (in my view) stand a chance - she's incentivized to put you into high-fee products and doesn't have a billionth of the knowledge and case studies of an LLM. In addition, she's at a huge disadvantage as the person in front of them (you) is self-conscious and unlikely to be totally direct or honest - "I'm planning to leave my husband this fall."

Coach in Your Pocket

We all crave access to experts. It's why people show up to hear Scott speak. It's why someone once paid $19 million for a private lunch with Warren Buffet. It's why, despite all the bad press re: their ethics and ineffectiveness, consulting firms continue to raise their fees and grow.

But most of us can't afford that level of human expertise. And the crazy thing is, we're overvaluing it anyway. McKinsey consultants are smart, credentialed people. But they can only present you with one worldview that has a series of biases including how to create problems only they can solve with additional engagements, and what will please the person who has a budget for follow-on engagements. AI is a nearly free expert with 24/7 availability, a staggering range of expertise, and - most importantly - inhumanity. It doesn't care whether you like it, hire it, or find it attractive, it just wants to address the task/query at hand. And it's getting better.

The hardest part of working with AI isn't learning to prompt. It's managing your own ego and admitting you could use some help and that the world will pass you by if you don't learn how to use a computer, PowerPoint ... AI. So get over your immediate defense mechanism - "AI can never do what I do" - and use it to do what you do, just better. There is an invading army in business: technology. Its weapons are modern-day tanks, drones, and supersonic aircraft. Do you really want to ride into battle on horseback?

P.S. Last week Scott spoke with Stanford Professor and podcast host Andrew Huberman about the most important things we need to know about our physiological health. Listen here on Apple or here on Spotify.