If you read this and think 'hey, this sounds like something they do in [insert teaching method here]', please let me know. I've had some chats about the Montessori method, and there's certainly overlap there. But I'm on the hunt for a literature I can connect to, and any help would be appreciated.

I've been thinking a lot about education lately. I'm home-schooling the kids, I've been chatting a lot with coaches about ecological approaches to their teaching (most publicly here and here), and I'm reading Tim Ingold's Anthropology And/As Education. I'm also wondering why the demonstrated success of the ecological dynamics approach in sports pedagogy has had zero consequences for education more broadly.

I think a couple of things. I think the reason why ecological dynamics hasn't spilled over is that we live in a dualist world where knowledge and physical skills are two distinct domains (think about how physical education is treated in schools). I also think that because the ecological approach doesn't endorse that dualism, there is simply no reason for classroom education to work completely differently from physical education. And finally, I think this might be really, really important.

I used to teach a module called Foundation Research Methods, and after a while I finally realised that I was teaching it in a constraints-based, ecological dynamics style. (This explains why a lot of my colleagues were genuinely confused by what I was doing at times, I think!). The module developed over the years, and the last year I taught it we solved our attendance problem and the students crushed the exam.

I want to walk through what I did, and reflect on how it embodied an ecological approach. This is not me saying this is how all classes should be taught. This is just me laying out what a constraints-based approach looked like in the class, what I thought worked, and what I would like to have done next.

The Class

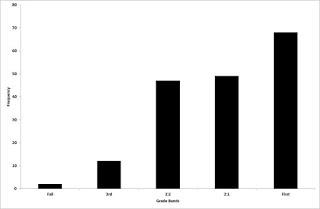

The module was called Foundation Research Methods. It was a first semester, first year module for Psychology undergraduates, and it led them through the basics of study design and data analysis (from means and standard deviations to one-way ANOVA). My goal was to take 180-200 students with a mix of experience in statistical thinking and get them all to the point where they could all be on the same page and build on their skills in the follow-up Intermediate and Advanced Research Methods modules. Like most psychology statistics classes, this had originally been taught with hour long lectures for the whole group, separate workshops to practice SPSS, and practical sessions on study design and research ethics. I actually inherited the design I'll tell you about from Sabrina, who rebuilt the module before a maternity leave. I took the module over and ran it for 5 years.Figure 1. The organisation of FRM in 2018

The new design worked as follows, over 12 weeks:- Week 1 I gave them all an introductory lecture where I explained the module and the way it would run

- Every week there were 5 or 6 identical 90 minute sessions for 30 students per session. I usually taught 3 or 4 of these, with a GTA running the remainder. Sessions took place in a computer lab.

- The first 30 minutes or so was taken up by me giving a mini-lecture in which I set the scene for the day's activities. I'd introduce the topic, and provide information and context about why we were doing this and how it fit into the developing module.

- The next 60 minutes had the students work on a task based on the day's topic that had them using SPSS (and eventually JASP as well) and data sets.

- There were also 4 weeks where there were multiple small group practical sessions, where I had them draft and mark components of lab reports and engage with research ethics basics.

- Assessment varied over the years; there was generally an MCQ to assess basic knowledge, there was sometimes a lab report based on an experiment they had taken part in, and sometimes there was a reflective report where they took part in studies for credit and wrote about what the study design was like to experience as a participant.

General Principles of Delivery

Each week built on the previous weeks. Week 1 covered measures of central tendency, then Week 2 did that again and added standard deviations, then Week 3 did those plus various kinds of variables, and so on. The workshop activities embodied this structure too - every week the activity would have them do everything, in order, that they had already covered, and then added that week's new thing. The lectures did not ever tell them how to, say, run a t-test. Instead, I would explain what t-tests were for, the nature of the question that they could answer, and how they fit into the general statistical framework I was building over the module. The workshop activities also never told them how to run the t-test. Instead, activities included finding where in the textbook (Andy Field's Discovering Statistics Using SPSS) they could find step-by-step instructions, and linking to YouTube walkthroughs on running the test in SPSS. A GTA and I would wander the lab, answering questions and keeping the students on the right path. Again, we would never tell them how to run SPSS. Instead, we would ask them questions such as 'did you find the page in the textbook yet?' and if not, help them with that. If they had questions about vocabulary that was just blocking their progress we'd help directly, but generally our job was to keep them constrained to working within the range of the day's activity. I also actively encouraged the students to work together in groups. If one had solved a problem, I would often direct a student with that question to the other student. I'd encourage them to share resources, on the premise that success in the module was not a zero-sum game.How It Went

The students were always a bit nervous at the start, but this was more 'new psychology undergrads scared of statistics' than 'confused by the module format'. I had the advantage that I was one of their first modules, so I got to set the rules and didn't have to fight against experience with other university-level modules. Every year, something didn't work, but every year, I fixed that problem and it never came back. These problems were mostly student experience problems, rather than content problems. One example was that I saw their progress, but they couldn't. Every week they experienced running into something they didn't know how to do, and as a result every week it felt like they weren't getting anywhere. From my perspective, though, I could see their progress - every week the class would be quiet as they cracked on and did all the parts of the activity that was material from previous weeks, and they would only start talking and asking questions when they hit the new thing. After I realised what their first-person experience was, every year following I made a point to explicitly draw their attention a few times to their progress, and that problem never repeated. Most years, the MCQ performed just fine; a nice normally distributed grade curve centred on 58-62% (in the UK this is the boundary between a 2:2 and a 2:1, so it's a sensible mean). My final year teaching this, though, we solved our attendance problem. The course team as a whole had been working on this for a while, and nothing had worked until the year we took an attendance register for all small teaching sessions. I literally called out names and marked people present or absent, like school. Because FRM was small group teaching, I got to do this for all the sessions, and attendance stayed good at around 80%+ for the whole semester. And that year, they destroyed my exam. Only two fails, and a huge proportion of great scores.Figure 2. The insanely skewed grade distribution for FRM