The idea of technical SEO is to minimize the work of bots when they come to your website to index it on Google and Bing. Look at the build, the crawl and the rendering of the site.

Tools Required:

- SEO Crawler such as Screaming Frog or DeepCrawl

- Log File Analyzer – Screaming Frog has this too

- Developer Tools – such as the ones found in Google Chrome – View>Developer>Developer Tools

- Web Developer Toolbar – giving you the ability to turn off Javascript

- Search Console

- Bing Webmaster Tools – shows you geotargetting behaviour, gives you a second opinion on security etc.

- Google Analytics – With onsite search tracking *

*Great for tailoring copy and pages. Just turn it on and add query parameter

Tech SEO 1 – The Website Build & Setup

The website setup – a neglected element of many SEO tech audits.

- Storage

Do you have enough storage for your website now and in the near future? you can work this out by taking your average page size (times 1.5 to be safe), multiplied by the number of pages and posts, multiplied by 1+growth rate/100

for example, a site with an average page size of 1mb with 500 pages and an annual growth rate of 150%

1mb X 1.5 X 500 X 1.5 = 1125mb of storage required for the year.

You don’t want to be held to ransom by a webhost, because you have gone over your storage limit.

- How is your site Logging Data?

Before we think about web analytics, think about how your site is storing data.

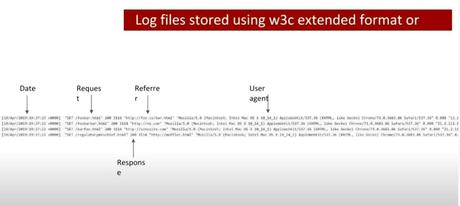

As a minimum, your site should be logging the date, the request, the referrer, the response and the User Agent – this is inline with the W3 Extended Format.

When, what it was, where it came from, how the server responded and whether it was a browser or a bot that came to your site.

- Blog Post Publishing

Can authors and copywriters add meta titles, descriptions and schema easily? Some websites require a ‘code release’ to allow authors to add a meta description.

- Site Maintenance & Updates – Accessibility & Permissions

Along with the meta stuff – how much access does each user have to the code and backend of a website? How are permissions built in?

This could and probably should be tailored to each team and their skillset.

For example, can an author of a blog post easily compress an image?

Can the same author update a menu (often not a good idea)

Who can access the server to tune server performance?

Tech SEO 2 – The Crawl

- Google Index

Carry out a site: search and check the number of pages compared to a crawl with Screaming Frog.

With a site: search (for example, search in Google for site:businessdaduk.com) – don’t trust the number of pages that Google tells you it has found, scrape the SERPs using Python on Link Clump:

Too many or too few URLs being indexed – both suggest there is a problem.

- Correct Files in Place – e.g. Robots.txt

Check these files carefully. Google says spaces are not an issue in Robots.txt files, but many coders and SEOers suggest this isn’t the case.

XML sitemaps also need to be correct and in place and submitted to search console. Be careful with the <lastmod> directive, lots of websites have lastmod but don’t update it when they update a page or post.

- Response Codes

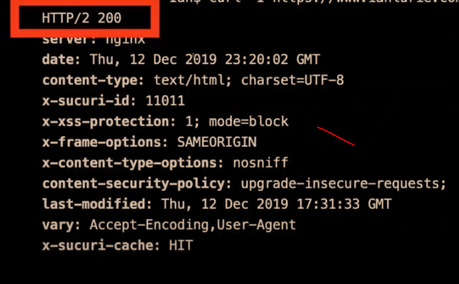

Checking response codes with a browser plugin or Screaming Frog works 99% of the time, but to go next level, try using curl and command line. Curl avoids JS and gives you the response header.

Type in Curl – I and then the URL

e.g.

curl – I https://businessdaduk.com/

You need to download cURL which can be a ball ache if you need IT’s permission etc.

Anyway, if you do download it and run curl, your response should look like this:

Next enter an incorrect URL and make sure it results in a 404.

- Canonical URLs

Each ‘resource’ should have a single canonical address.

common causes of canonical issues include – sharing URLs/shortened URLs, tracking URLs and product option parameters.

The best way to check for any canonical issues is to check crawling behavior and do this by checking log files.

You can check log files and analyze them, with Screaming Frog – the first 1,000 log files can be analysed with the free version (at time of writing).

Most of the time, your host will have your logfiles in the cPanel section, named something like “Raw Access”. The files are normally zipped with gzip, so you might need a piece of software to unzip them or just allow you to open them – although often you can still just drag and drop the files into Screaming Frog.

The Screaming Frog log file analyser, is a different download to the SEO site crawler – https://www.screamingfrog.co.uk/log-file-analyser/

If the log files are in the tens of millions, you might need to go next level nerd and use grep in Linux command line

Read more about all things log file analysis-y on Ian Lurie’s Blog here.

This video tutorial about Linux might also be handy. I’ve stuck it on my brother’s old laptop. Probably should have asked first.

With product IDs, and other URL fragments, use a # instead of a ? to add tracking.

Using rel-canonical is a hint, not a directive. It’s a work around rather than a solution.

DYK the rel-canonical is a hint, not a directive? It's a very strong hint, but it may still be outweighed by other signals pic.twitter.com/Aqkg4HmfnF

— Gary 鯨理/경리 Illyes (@methode) March 23, 2017

Remember also, that the server header, can override a canonical tag.

You can check your server headers using this tool – http://www.rexswain.com/httpview.html (at your own risk like)

Tech SEO 3 – Rendering & Speed

- Lighthouse

Use lighthouse, but use in with command line or use it in a browser with no browser add-ons.If you are not into Linux, use pingdom, GTMetrix and Lighthouse, ideally in a browser with no add-ons.

Look out for too much code, but also invalid code. This might include things such as image alt tags, which aren’t marked up properly – some plugins will display the code just as ‘alt’ rather than alt=”blah”

- Javascript

Despite what Google says, all the SEO professionals that I follow the work of, state that client-side JS is still a site speed problem and potential ranking factor. Only use JS if you need it and use server-side JS.

Use a browser add-on that lets you turn off JS and then check that your site is still full functional.

- Schema

Finally, possibly in the wrong place down here – but use Screaming Frog or Deepcrawl to check your schema markup is correct.

You can add schema using the Yoast or Rank Math SEO plugins

The Actual Tech SEO Checklist (Without Waffle)

Site Speed

Tools – Lighthouse, GTMetrix, Pingdom

Check – Image size, domain & http requests, code bloat, Javascript use, optimal CSS delivery, code minification, browser cache, reduce redirects, reduce errors like 404s.

Site UX

Mobile Friendly Test, Site Speed, time to interactive, consistent UX across devices and browsers

Clean URLs

Image from Blogspot.com

Image from Blogspot.com

Make sure URLs – Include a keyword, are short – use a dash/hyphen –

Secure Server HTTPS

Use a secure server, and make sure the unsecure version redirects to it

Neighbours