It's a terrible paper, so it's apparently time for another in what might have to become a more frequent series, In which I am a bit rude about a rubbish paper and worry about how to kill papers like it. At the end, I've also talked a little about the role science journalism plays in maintaining the momentum for papers like this, via their own version of the file drawer problem. I'd be interested in people's thoughts.

The claim

Eerland, Guadalupe & Zwaan (2011) tested the hypothesis that we mentally represent magnitudes as a number line (Restle, 1970). This line runs from left to right; smaller numbers are to the left, larger numbers to the right. The suggestion was that leaning to the left should make smaller numbers more readily accessible, while leaning to the right should make larger numbers easier to call to mind; a priming effect. They tested this by manipulating posture while people made estimates of the magnitude of a bunch of things.

The questions were designed to be things people didn't know the exact answer to, but that they could generate a non-random estimate for. Example questions are "What is the height of the Eiffel tower in meters?", "How many people live in Antwerp?" and "What is the average life expectancy of a parrot in years?". While people were generating their estimates, they stood on a Wii Balance Board which tracked their centre-of-pressure (a measure of posture). They were instructed to stand 'upright', and to do this the data from the balance board was presented to them as a dot on a screen with a target - a computer warned them if their posture slipped away from the target. The mapping from posture to the screen was manipulated without participant's knowledge, so that in order to keep the dot on the target people were actually leaning left or right or were upright (varying by about 2%).

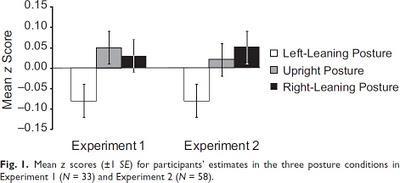

The question was whether this postural manipulation had any effect on estimates of magnitude. The questions all entailed different scales with different units (e.g. metres vs kilos), so to make the data comparable the authors z-transformed the results. This is a standard transformation; a z-score is equal to (data point - the mean)/the standard deviation, and the numbers that come out are unitless; they can be directly compared. Experiment 2 was the same design as Experiment 1 but all the questions had answers that ranged from 1-10 and participants knew their answer had to fit this range.

In both experiments, there was only a main effect of Posture, and comparisons revealed that leaning left made estimates smaller than standing upright or leaning right, but that leaning right was not different from standing upright. The authors conclude they have supported their hypothesis and that posture influences estimation of magnitude in accordance with the mental number line theory.

In both experiments, there was only a main effect of Posture, and comparisons revealed that leaning left made estimates smaller than standing upright or leaning right, but that leaning right was not different from standing upright. The authors conclude they have supported their hypothesis and that posture influences estimation of magnitude in accordance with the mental number line theory. Why this is all entirely wrong

Where to begin? First, and I really can't seem to emphasize this enough, this is not embodied cognition. Second, the experimental question itself is ill-posed - at no point do the authors ask 'what is the function of magnitude estimation? What's it for?'. If we estimate magnitudes in order to actually do things in the real world, and these estimates are so fragile that they can be nudged by a 2% variation in posture, we would never be able to achieve our goals that depend on these magnitudes. So the paper itself is theoretically incoherent, yet another victim of psychology's lack of a central theory. Third, Restle's (1970) paper is not about magnitude estimation - it's about how people do fast addition of numbers. In addition, it claims that the mental number line is very fuzzy, with large regions being treated as the same; this is relevant to the discussion below about power and effect sizes.

But, just like the 'moving through time' paper (Miles, Nind & Macrae, 2010) the real disaster area is the experiment itself. If I had reviewed the paper, it would have gone something like this:

1. The hypothesis was clearly written once the data were in

The authors say 'We hypothesized that people would make smaller estimates when they leaned slightly to the left than they would when they leaned slightly to the right." (pg. 1511). They make no reference to the idea that leaning left and right relative to upright should produce different results, which is clearly implied by the mental number line theory.

2. The data don't actually support the mental number line theory

Leaning left produced smaller magnitude estimates on average across all questions and participants, but leaning right did not differ from upright. There's nothing in a mental number line that should make the left pull on behavior harder than the right.

3. Handedness doesn't explain this

The authors do try to explain this asymmetry by saying that all their participants were right handed and there is evidence that "when people attempt to balance themselves, they show a subtle directional bias favoring whichever hip is on the same side as their dominant hand (Balasubramaniam & Turvey, 2000)" (pg. 1513). In essence, they claim that 'upright' for a right hander is actually slightly right of actual upright.

The reason this doesn't explain their results is that they utterly fail to test this. They recorded centre-of-pressure data, but do not analyze it at all and so they have no evidence of an actual shift. If they did have evidence of such a shift, they should have run a control condition where they identified each person's 'upright' position and calibrated 'left' and 'right' relative to that baseline (and a real journal would have demanded that they run this experiment; resolving a confound requires data, not a half-hearted citation). Actually, in the Methods, they mention calibrating the balance board for each participant, so either they calibrated it the way I just suggested (thus ruling out their handedness explanation) or they calibrated something else and it's not clear from the paper. Regardless, they don't get to call on handedness.

(A side note: if this handedness bias exists in their data, and if the lean influences magnitude estimates, then the bias is the only reason they found the effect to the left; it increased the size of the experimental manipulation to the point where it could be seen in the data. So even if the author's theoretical claims are true, this manipulation check suggests they were very lucky to see anything because they didn't manipulate posture enough).

4. There is no postural data in this paper

The authors report no data to support their manipulation of posture. Posture involves sway - we bipeds are dynamically unstable (like upright pendulums) and postural control is an active process of error correction. The authors report that the manipulation moved the centre-of-pressure by about 2%; but this will be on average, and given that the postural control system wants to keep you actually upright, there will have been plenty of sway back towards being in line with the pull of gravity. This time series of sway data should then have been indexed so that you knew which way people were swaying when you asked them the question.

As it stands, we have no way to evaluate how successful their postural manipulation was (which, given the manipulation failed in one direction, is critical information) nor what posture was actually up to when they answered the questions (which, given the next point, seems fairly crucial to me as well).

5. Many of the questions do not show the effect

The Supplementary data reports the median response for each question and sway condition. Only 25 of the 39 questions have larger numbers in the right sway condition compared to the left sway condition; only 9 show a pattern of increasing magnitude from left to right when you include the upright condition. The authors clearly got lucky in finding their result, suggesting the odds of replicating the effect are low.

6. The effect sizes are tiny

Backing up that last claim, I looked at the effect sizes. The authors report η2 which is effectively 'proportion of variance explained by the effect'. The effect sizes ranged from 0.07 - 0.17; 7-17% of the variance was explained by the posture manipulation. These effect sizes are very small (in my experiments with 8-10 people I typically see effect sizes around .7 or .8). In other words, the statistical trend that enabled the main effect of posture to become statistically significant is very, very small, and only the large amount of data is allowing sufficient power to find it. This type of tiny effect is fairly common in psychology (hence the typical large n's in psychology research) but it makes many of us worry about statistical vs actual significance.

Summary

This paper is not an example of embodied cognition, and worse, there are methodological and analysis flaws throughout the paper that make the data fairly uninterpretable. But, just like Miles et al (2010) this effect is now in the literature and in the popular press and there's no obvious way to kill it. Replication is unlikely to work, and even if it did, snazzy journals like Psychological Science don't like publishing mere replications (or,worse, failures to replicate). These high-impact journals publish exciting results that never get looked at again and thus are never corrected in the literature; it's a serious problem with real consequences (e.g. Diederik Stapel's fraudulent work showed up in many of these high impact journals and was never subjected to the replication attempts which would have revealed the fraud).

A note to science journalists

The popular coverage of papers like this, and the general lack of interest in follow-up on the internet, helps make these ideas spread and never get corrected. The problems with this paper, however, are mostly the kind of thing you spot when you're a scientist with experience with the literature and the analyses. Science journalists typically do not have this expertise; their job tends to make them less specialised, so they tend to lack the specialist knowledge and broader context that makes these papers stick out like a sore thumb to a reader like me. I'm not passing judgment on this lack of specialisation - it makes perfect sense and it allows them to engage with a broader audience than I will ever attract. That's their job, and that's fine.

But what I would love would be if running their analysis past an interested scientist became more standard. We have our biases and foibles so you'd have to be able to take all the advice with a grain of salt and with one eye on the different goals of science and science journalism. In this age of 'research impact', where we are heavily evaluated on our ability to see our work have consequences beyond the 20 people who read our journal article, I'm willing to bet you could find plenty of people happy to give a paper and a write-up a once over and you could take our advice or not. Maybe this just runs into the various problems with peer review, and I'm depressingly aware that it would be fairly easy to get glowing reviews on this paper, for example (clearly they've already had a couple via Psych Science). But as we try to develop a sustainable and sustained research programme centred on our theoretical approach, these results create noise we have to fight through, and I am personally very keen to address these issues in front of a wider audience.

Another issue is science journalism's version of the file drawer problem. The big science blogs all write positive write-ups of the exciting news, because that's what they're trying to communicate. But not writing a more critical piece on something that's getting a lot of attention creates the same bias as not publishing failures to replicate. I wrote this post in part because no-one else was going to; science journalists, do you have room to ever do this kind of work? One good example may be the 'arsenic life' story, although that was still kicked off by a scientist who happened to blog; so maybe there is a use for us after all!

References

Balasubramaniam, R., & Turvey, M. T. (2000). The handedness of postural fluctuations. Human Movement Science, 19, 667–684.

Eerland, A., Guadalupe, T., & Zwaan, R. (2011). Leaning to the Left Makes the Eiffel Tower Seem Smaller: Posture-Modulated Estimation Psychological Science, 22 (12), 1511-1514 DOI: 10.1177/0956797611420731

Miles, L., Nind, L., Macrae, C. (2010). Moving Through Time Psychological Science, 21 (2), 222-223 DOI: 10.1177/0956797609359333

Restle, F. (1970). Speed of adding and comparing numbers. Journal of Experimental Psychology, 83 (2, Pt.1), 274-278 DOI: 10.1037/h0028573