Since the Penguin/Penguin 2.0/2.1 and other link based penalties were introduced, I have frequently been asked to remove Google filters. In most cases, there were hundreds of spammy links but there were many good links too. How to prepare a disavow file? How to filter out the filthy?

First of all, you will need some tools. I recommend:

- ScrapeBox – If you don’t already own one, try to get one of the last 21 copies ;). It’s $57 instead of $97 if you click the link

- Excel/OpenOffice/Libre – You can use Google Drive, but off-line spreadsheets should be faster

- Quirk Search Status for Firefox

- Seobook toolbar for Firefox

- NotePad++

- NetPeak Checker

There are two cases of link based penalties:

- Algorithmic - Penguin updates. You need to remove as many links as you can, prepare the disavow file with links you can’t remove and wait.

- Manual penalties – you will get a notice in Google Webmaster Tools. You need to do the same as with Penguin penalties. You should remove links, upload the disavow file and send a reconsideration request.

Ok. Let’s get to the first step.

1) Log in to your Google Webmaster Tools

https://www.google.com/webmasters/tools

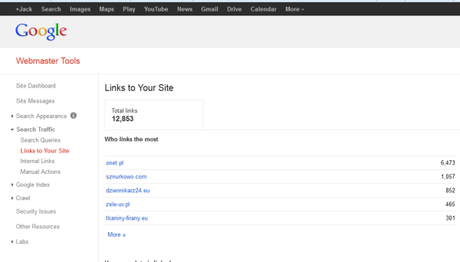

You can get a link report from majestic for free with verification or from opensiteexplorer.org. For the first two reconsideration requests, I recommend downloading GWT links only. If it’s not enough to remove link penalties and google filters, then it is better to add other link lists to a GWT link export.

2) In Google Webmaster Tools

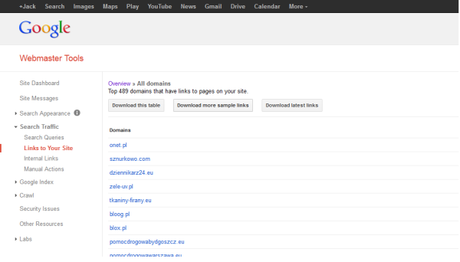

Click Search Traffic -> “Links to your site” -> “More”

Next click “Download more sample links”

You will get a CSV file. Open it in NotePad++

This is just an example of how it should look like. Copy all the links to the clipboard.

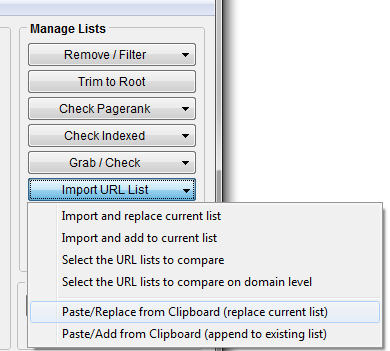

3) Open Scrapebox

Click import links from clipboard:

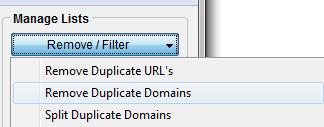

4) Remove duplicate domains (optional)

I strongly recommend disavowing whole domains rather than target links. You can skip this step if you need to remove more than one link from the domain but not the whole domain itself.

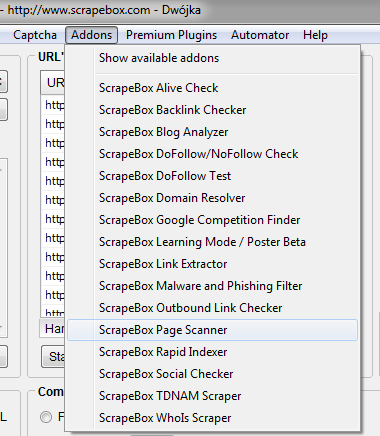

5) Do the Page Scan

There are different opinions about disavowing links – some say you should disavow dead links, some say you should disavow only active links. In the first few reconsideration requests I would disavow only active links.

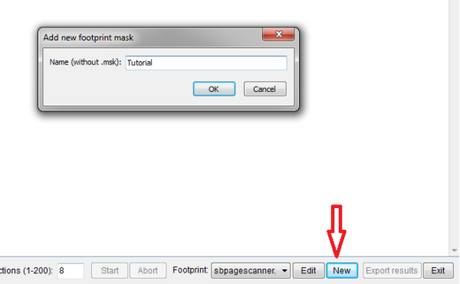

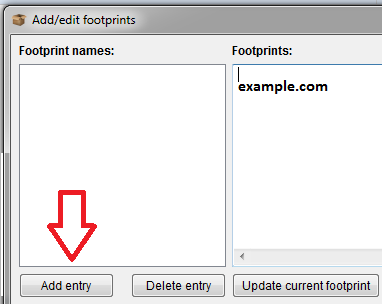

Add new footprint:

It is much better not to include www. in your domain name. It will find sites that have no active link to you but it doesn’t matter.

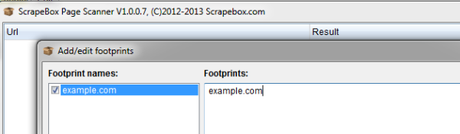

Load links from scrapebox harvester and start checking. I use 8 connections but it depends on the quality of your Internet. Using too many can skew the results.

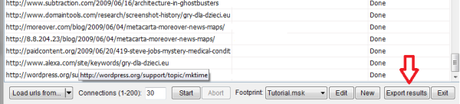

6) Export Data

OK, now you have the results. You should export them from the page scanner to a specified folder.

7) Get links to your website

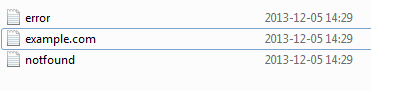

You will get three text files – ‘error’, ‘notfound’ and the file with the correct results.

Get back to the Scrapebox main window. Import and replace from the “footprint_file” – here it is “example.com”

8) Checking PageRank

Run a domain PageRank check.

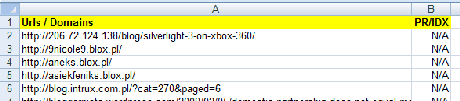

9) Export the list to Excel

I recommend the “import/export with PR directly to Excel” option

10) Open Excel

Open the xls file with Excel or OpenOffice.

11) Create disavow file

Edited: Google updated PageRank today. Disavow all the domains with PageRank n/a, PageRank 0 and PageRank 1 as they provide low quality.

This can be a little tricky as Google hasn’t updated the Toolbar PageRank for more than 8 months. I definitely would add all PageRank n/a and 1 links to the disavow file. These are low quality links. Even if some of PR1 could be good, the link value is low. If you have hundreds of PR1 links then you can remove them. Otherwise you can browse them and select manually.

Websites with PageRank 0 are tricky. Google hasn’t updated the Toolbar PageRank and good domains can appear on the list if they are less than a year old. Most of them should be disavowed but you should look for quality links there.

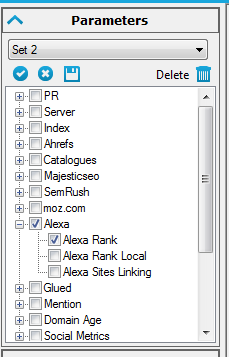

In this case I use NetPeak Checker set only to check Alexa Rank:

Then I sort the results by Alexa Global Rank. After exporting the links from NetPeak checker to Excel I manually scan the websites with Alexa lower than 1.000.000. If you find directories, article sites and other low quality websites put them in the disavow file. Disavow all links with Alexa Rank higher than 1.000.000.

12) Preparing the disavow file

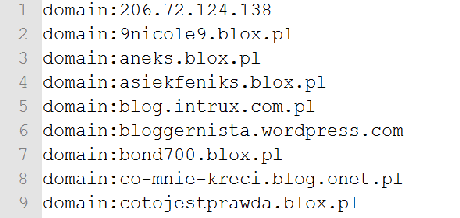

Your list of links should be ready:

We have to make it “disavow-ready”:

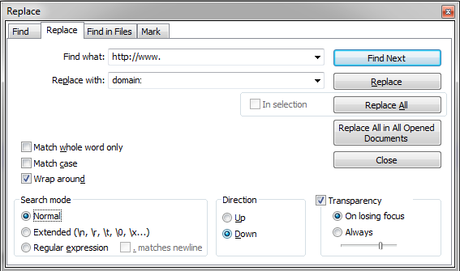

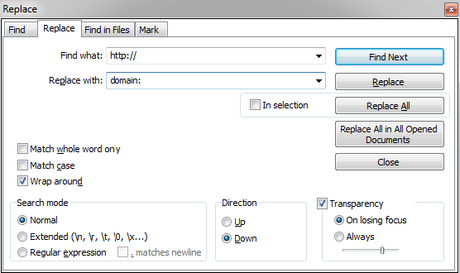

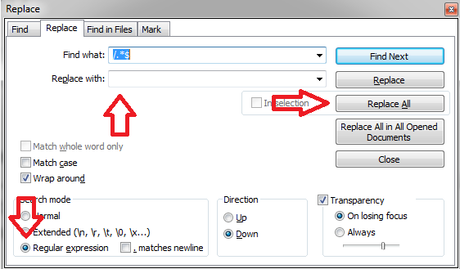

Type ctrl+H in notepad++ to replace:

1) http://www. -> domain:

2) http:// ->domain:

3) Change search mode to “regular expression” and replace “/.*$” -> nothing (leave the second box empty).

13) Enjoy the results

Congratulations! You have your disavow file ready.

Upload it to the Disavow Tool, file a reconsideration request and wait for SQT’s response.