This is a spectacularly complicated topic and I applaud Jacobs & Michaels for their gumption in tackling it and the clarity with which they went after it. I also respect the ecological rigour they have applied as they try to find a way to measure, analyze and drive learning in terms of information, and not loans on intelligence. It is way past time for ecological psychology to tackle the process of learning head on. I do think there are problems in the specific implementation they propose, and I'll spend some time here identifying those problems. I am not identifying these to kill off the idea, though; read this as me just at the stage of my thinking where I am identifying what I think I need to do to improve this framework and use it in my own science.

Prediction 1: Information Variables Live in a Continuous Space

Direct learning provides the concept of an information space as a way to conceptualise relationships between variables and the learner's path from using one variable to another. They commit themselves to a very specific claim about these spaces; they are continuous, i.e. they are defined at every possible point.

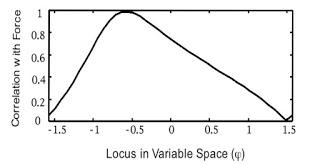

Figure 1. A 1 dimensional information space

In Figure 1, the x axis covers all possible values of a composite variable, Eφ = cos(φ)*velocity + sin(φ)*displacement. This equation describes a particular higher order relation between the two candidate discrete variables for perceiving force in this particular task. The direct learning theory is committed to the idea that a) all the points along the axis correspond to states of affairs in an energy array and b) that a perceiver-actor can therefore in principle end up anywhere in that space.Prediction 2: Information Spaces are Structured by Information for Learning

If you are a learner and you are currently using an information variable at φ = 0.5, there is much room for improvement. But what makes the learning process change in one way over another, let alone in the right way? Jacobs & Michaels claim (pg 337) thatMost boldly, our assumption is that there exist higher-order properties of environment-actor systems that are specific to changes that reduce nonoptimalities of perception–action systems, at least in ecologically relevant environments. We also assume that there exists detectable information in ambient energy arrays that specifies such properties.We refer to this information as information for learning.Properties of the task can tell the learning process how to change, and there is information for it. The goal here is to avoid requiring an internal teaching signal that somehow knows what to do (which entails a 'loan on intelligence' you can't ever pay back).

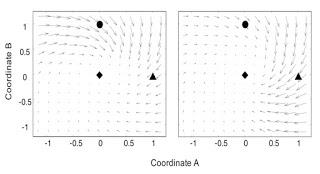

Figure 2. The vector fields describing two potential sets of information for learning

Evaluating These Claims

We have argued that one of the strengths of the ecological approach is that it allows us to develop mechanistic models of perception-action systems. These models only make reference to real parts and processes, rather than relying on box-and-arrow diagrams describing the functions being performed, and they are the gold standard for scientific explanation.The mechanism mindset forces me to ask one question when evaluating new ideas; how are the proposed parts actually implemented in ecologically scaled physical systems?

Are Information Spaces and Information for Learning Real?

Discrete information variables are real parts. The optic array literally can take the form of things like tau, relative direction of motion, and so on. We see evidence of these forms in patterns of behavior (e.g. in coordination dynamics) and even in patterns of neural activity. Discrete information variables can also definitely be described as points in a continuous space defined by a relation between those variables. But does that description actually point to a real component? Can the optic array (for example) actually take the form of all the intermediate values of the relation? What about information for learning? Those vector fields are a nice description, but they embody a very particular assumption; that information about some property in the world somehow implements the distance and direction to the best information about another property of the world. That's quite the claim (and Jacobs & Michaels are very upfront about this). The first thing I know is that there is as yet no evidence that either of these are real, let alone continuous;Note again that the preceding does not prove that the information fields are embodied in ambient energy arrays; it does not prove that the relevant non-optimalities of perceptual and perceptual-motor systems reveal themselves during perceiving and acting. Nevertheless, the existence of information for learning is the cornerstone of the direct learning theory.We argue that the typical complexity of behavior in natural environments should motivate ecological psychologists to start from the assumption that information for learning exists.This is not that crazy; your science has to start somewhere and at least this is a clear and testable hypothesis. But it's important to keep in mind that this framework as yet has little direct empirical support.

Jacobs & Michaels, 2007, pg 339

I do not currently think that the energy arrays that information spaces describe can always implement the full space of possible informational values. It's not yet clear to me that it is legitimate to take discrete variables and treat them as independent axes of an information space. I also think that right now, the way information spaces are defined is wildly under-constrained - in the example shown in Figure 1, why is it that that equation is the right analysis? You could iterate this process, of course; try various kinds of relations, see what space does best. But unless we can come up with a way to figure out what parts, if any, of the information space are possible real states of energy arrays, this will be a very data-fitting exercise.

Are Information Space Variables Cue Combination?

This all leads me to think that, despite the authors' claim to the contrary, this analysis currently reads very much as cue combination. This is the representational idea that you can take in non-specifying cues and integrate them internally into a composite variable taking weighted combinations of the cues to achieve the best outcome. Because it all happens in the head, any combination of cues is technically possible, unconstrained by whether that combination exists in nature. Jacobs & Michaels are, right now, taking the work of cue combination out of the head, putting it into the information space description of perceptual energy arrays and saying 'let's all assume this will work'. At this point, I don't think they have sufficiently dodged the cue combination mindset. But again, you have to start somewhere, and the mechanistic mindset is useful here because it forces you to front up to the question of whether your description points to anything real. I think that approach will help burn off this lingering whiff of cue analysis.Why Must Information Fields be Continuous?

As far as I can tell, the drive towards insisting on continuous information spaces comes from wanting to explain how performance over learning changes fairly continuously. When they reanalyse older data in information space terms, they do see evidence that people seem to follow trajectories through information space, rather than jumping from discrete variable to discrete variable. I worry this is an artefact of their analysis though.What they do is identify the value of the information space axis that correlates most highly with the judgements people made, and then they correlate performance with the dynamical property the person is trying to perceive. This gives you x,y coordinates in the information space for every block of trials, and you can track how those change over time; see Figure 3.

Figure 3. Movement of 3 individuals through the information space over blocks 1-6

This analysis does allow people to land anywhere in the space and does show people heading towards the specifying variable. But the correlational analysis driving the x coordinate concerns me. They don't note how good the correlations are, and the assumption here is that the best correlation points to good use of one of the intermediate values.If information variables really are discrete, and information spaces really aren't that continuous, then I suspect you could get this result too. If I start using one variable and am in the process of learning another, then I may switch within a block so that I am sometimes using one and sometimes the other; on average my performance will correlate with some intermediate value, depending on the proportion of trials I was using each variable on. I might also be learning to improve my detection of the new variable, so that even if I have switched I am still improving my ability to discriminate it. My errors might not be due to using an intermediate non-specifying variable, but my variable ability to get to the information.

This intuition comes from a paper I like a lot by Wenderoth & Bock (2001). They identify that the learning process seems to include three distinct processes - identifying the variable to be learned, getting yourself into the ballpark of using it, and then honing that use by improving your detection. Intermediate levels of performance come from the averaging we do. I like this because it accords nicely with the almost law-like appearance of power laws in learning data; steep initial improvements with diminishing returns. By Wenderoth and Bock, that first jump comes after you have identified a new variable and reflects your jump to using it; the latter part is you improving your discrimination of the variable and your use of it to control action.

When I study how information use changes in learning, I use perturbation experiments to identify which variable is being used (see this post for a general explanation and this post for a specific example). It is a less continuous measure (and so I may be imposing discreteness where none exists) but it is a direct measure of which variable is currently being used, rather than an inference based on a correlation. Perhaps the way forward is to integrate these methods somehow.