Most of the people have heard about Microsoft Azure, and many of us even know that ADF Azure data factory is a cloud computing platform provided by Microsoft, but it is so much more than that. So what exactly is Azure and how it works?

Microsoft Azure data factory is an on-demand data centre in the cloud that scales up and down, as you need it to, We all are familiar with on-demand services.

Let's take one example-we pay for our electricity at different rates and for different amounts as we move through the day. We have peak times in our day where we all consume a lot more power. We don't pay for electricity as if we are regularly using it at peak the entire day. Instead, we only pay for what we use, at the times we use it.

More importantly, It is available whenever we need it.

It provides us with the capability to scale our data centres up or down when we need to. Microsoft Azure act as our:-

Azure Data Factory Tutorial points - Azure is so flexible that it easily integrates with on-premises server and data centres or stand alone as needed.

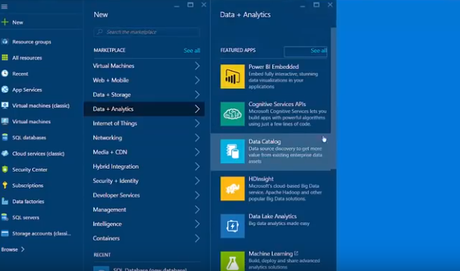

Azure Data factory

Azure Data factory(ADF) allows the developer to integrate sources disparately. It is a platform like SSIS in the cloud to handle the data. Drag and drop Interface like SSIS is not available in ADF. (Azure Data Factory SSIS)

ADF provides customers with a middle place to deal with a weblog, clickstream analysis, social sentiment etc.

One of the essential tools for a customer that is called Microsoft views Data Factory which helps to look a cross-breed story with SQL Server, Azure SQL Database, Azure Blobs.

The Azure Data Factory services is an entirely managed services for creating information stockpiling, preparing, and development administrations into streamlined, versatile, and reliable information generation pipelines.

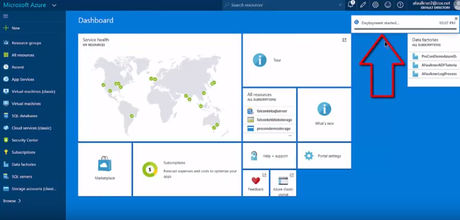

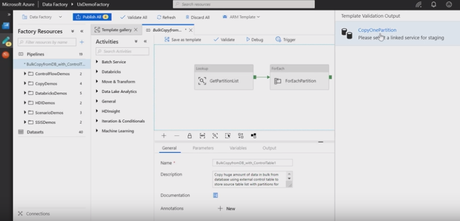

If you are a day zero user and you want to get quickly started building data factory pipelines. They are releasing templates and template gallery where you have pre-defined templates to choose from, and you can essentially begin to build your pipeline, and you can add more to it. It is an excellent way to getting started big data factory by using those templates. It will improve the productivity of our customer. So now we are going to create the Azure Data Factory tutorial points.

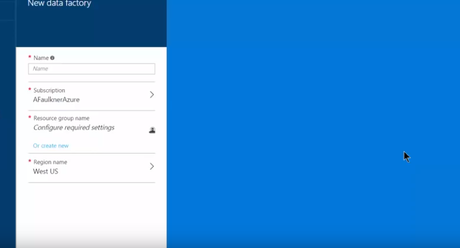

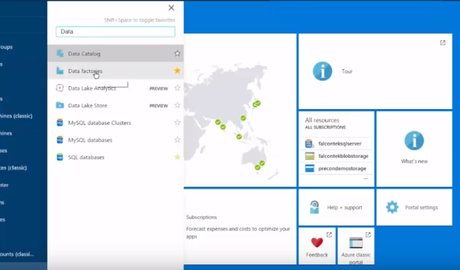

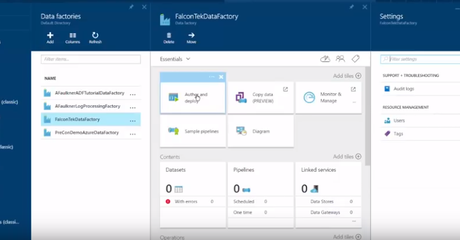

Steps involve to Create an Azure Data factory

- First, we should have Azure Data factory login portal

- Two pieces of information required-

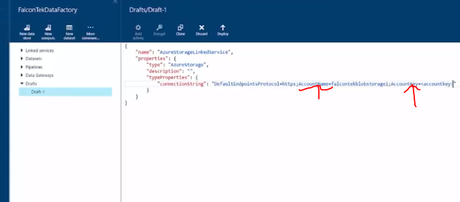

1.Azure Blob Storage Account Name

2.Azure Blob Storage Account Key

Change the Account name and its key.

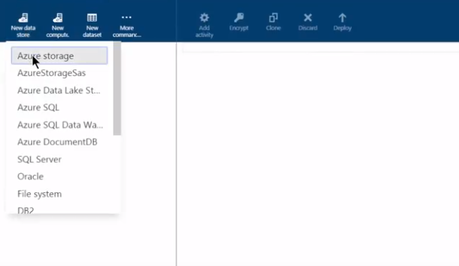

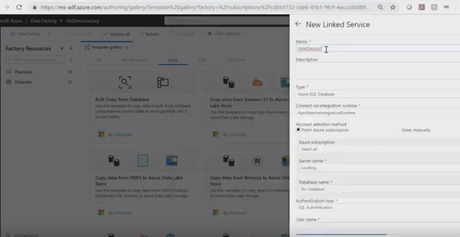

The process of creating a data factory and Linked service for Azure Storage Account. In the same way, we have to create

- Linked service for the Azure SQL Database

Replace-Server name, database name,username@Servername and password

So After Creating the Data Factory, I am interested in sharing some knowledge about Templates and how you will Implement in Azure Data Factory for better performance. Let's begin:-

What is Template?

A template is nothing but a built-in Azure Data Factory Pipeline that will help you to start immediately with Data Factory. It is useful when you are working the First time in a data factory and want to get started quickly. It helps to reduce development time and improve productivity.

Here is the list of some templates:-- Copy template

- My template

- SSIS template

- Transformation template

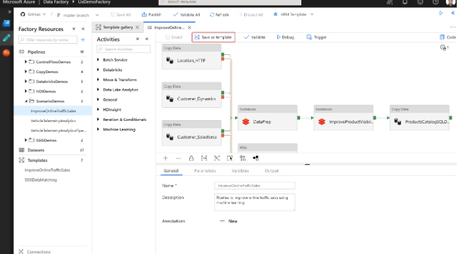

- You can click on the overview page, and there we have created the pipeline from templates.

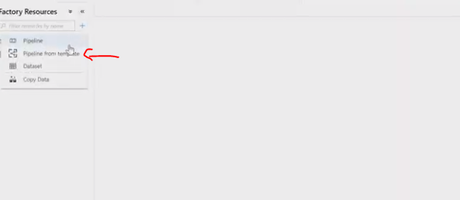

2. You could come to the Author page and click (+) and select pipeline from templates.

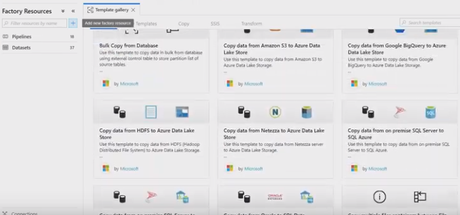

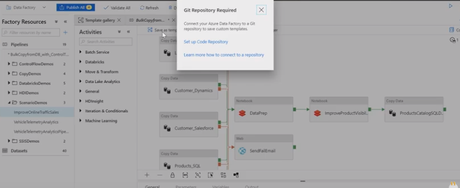

3. This one is the template gallery, and we have a bunch of out of box templates available here, where we can use a different template and improve the operational productivity.

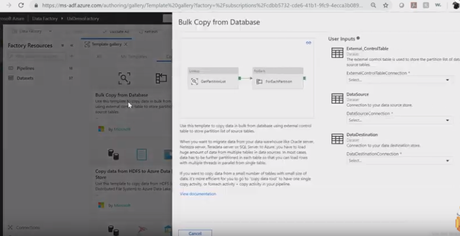

4. There are different templates such as "copy templates" where we have a bunch of templates to move data from various sources like a bulk copy from the database, S3 to Azure data lake Store, Google big Query etc.

There are a lot of customers wants a bulk copy of data from one database to another. So we can click -> Bulk copy from the database.

5. It will primarily provide information such as "what kind of pattern this is using", and give the partition list of the entire database that you are trying to move. This particular pattern uses External control table to maintain the list of the database that already ran. So you need to provide us where we can create the external control table. So for this, you can select the option or create new and provide all the essential data.

6. After filling the form click on ->use this template

Using the template, they are guiding us what the required properties you have to field for this particular pipeline to operate.you have to check the settings, parameter and parameterise everything for you to get started with all of this quickly are.

My Template

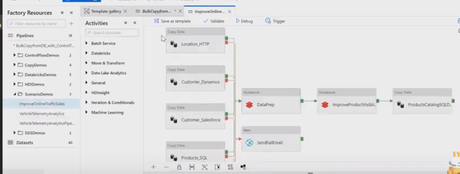

Lot of time the customer has requirements where they won't save their existing pipelines as a template as well. It is important because as an enterprise you might have developed pipeline at different business unit might want to use with a different configuration. So you can save your pipeline as a template as well.

Let's take an Example of My templates,

- If you want to save this template, you click on save as a template on the pipeline canvas. If you click on the save button one pop-up will generate when you are going to keep the pipeline as a template you need to enable Git integration.

- For this, It supports both Azure DevOps Git and GitHub.

- In this particular case, we click on GitHub and provide GitHub Account. Once you provide Account, it will automatically pull all the Repository you have access too. Fill all the remaining block and press the save button.

- After pressing the save button whatever you have in your data factory the code representation of that is being saved to the GitHub repository.

- If you can save your pipeline as a template, you have to fill the description, templates name and Git location where you can save.

If you go and check in the git repository, you can find your template folder that called created.

So here we are not reinventing the wheels, we are just using our template as a backend store.

SSIS Template- Common pattern SSIS Template.If you want to scheduled SSIS integration runtime to execute SSIS packages. So we have Template for that also.

Transformation Templates - In this template, you perform ETL with Databricks and transforming data using on-demand HDInsight.using this template to create a simple word count PYspark application which counts the number of words in the documents and output the value in the text file.

Conclusion:- Template offers a large measure of intensity, and it's improving steadily. Microsoft Azure will probably keep adding highlights to enhance the ease of use of Data Factory apparatuses. Begin building pipelines effectively and rapidly utilising Azure Data Factory. This is the complete Azure data factory documentation that will help you.