…continuing on…more thinking out loud…Some years ago I published a long theory&methodology piece, Literary Morphology: Nine Propositions in a Naturalist Theory of Form. In it I proposed the literary form was fundamentally computational. One of my reviewers asked: What’s computation?

Good question. It wasn’t clear to me just how to answer it, but whatever I said must have been sufficient, as the paper got published. And I still don’t have an answer to the question. Yes, I studied computational linguistics, I know what THAT computation was about. But computation itself? That’s still under investigation, no?

Yet, if anything is computation, surely arithmetic is computation. And here things get tricky and interesting. While so-called cognitive revolution in the human sciences is what happened when the idea of computation began percolating through in the 1950s, it certainly wasn’t arithmetic that was in that driver’s seat. It was digital computing. And yet, arithmetic is important in the abstract theory of computation, as axiomatised by Peano and employed by Kurt Gödel his incompleteness theorems.

Counting

Let’s set that aside.

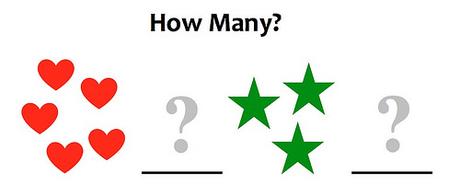

I want to think about arithmetic. We know that many preliterate cultures lack developed number systems. Roughly speaking, they’ll have the equivalent of one, two, three, and many, but that’s it. We know as well that many animals have a basic sense for numerosity that allows them to distinguish small number quantities (generally less than a half-dozen or so). It seems that the basic human move is to attach number terms to the percepts given by the numerosity sense.

Going much beyond that requires writing. Sorta’. We get tally marks on wood, stone, or bone and the use of clay tokens to enumerate herd animals. The written representation of language comes later. That is, it would appear that written language is the child of written number.

But it is one think to write numbers down. It is another thing to compute with them. Computing with Roman numerals, to give the most obvious example, is difficult. And it took awhile for the Hindu-Arabic system to make its way to Europe and be adopted there.

The exact history of these matters is beside the point. The point, rather, is that there IS a history and it is long and complex. Plain ordinary arithmetic, that we teach to school children, is a cultural accomplishment of considerable sophistication.

Calculating

And yet using it is difficult, not rocket-scientist difficult, but it-takes-a-lot-of-practice difficult. And still we make mistakes. It’s just not “natural” to us.

Why? I’m not sure, but think about it for a minute. First we learn to count. On the one hand we have to memorize a sequence of number names. The sequence has to be exactly correct. That’s tricky. But we must also learn how to use that sequence in counting objects. We are taught to talk our way through the series while ‘marking’ or ‘noting’ individual objects in some way. We might be counting out physical blocks or counters, moving them from one place to another as we count them. Or we’ll be given worksheets where a number of objects are represented on a page and we have to write the correct number.

Once we’ve got that mastered, then we have to learn the atomic facts of addition, subtraction, multiplication, and division. These atomic facts take the form:

number1 operation number2 equals number3Thus:

2 + 7 = 9 8 – 6 = 2 4 * 3 = 12 81 / 9 = 9And then we have to learn procedures for using these atomic facts to calculate with larger numbers, and fractions, and decimal fractions. This takes time and practice, hours and hours over a half-dozen or more years in childhood. And it has to be done exactly right for the calculations to be correct.

It’s maddening. I can see at least two difficulties. 1) To a first approximation, these numerical symbols look very much alike, so it’s easy to confuse them. If you are confused about 6 and 9, then you’re going to make mistakes in using a good many atomic facts, and there are several hundred to be memorized. 2) Working with largish to large numbers requires you to string together long sequences of atomic operations and keep tracking of your running value as you do so. The process is unforgiving in a way that ordinary language is not.

Algorithms

Now, what happens when, having mastered this process, you now abstract over it? (Here I’m thinking of Piaget’s notion of reflective abstraction.) That is, you treat the process itself as an object of contemplation.

What do you get? You get the notion of an algorithm and the beginnings of the abstract theory of computation. And that, I assume, is what Alan Turing did. And it’s Alan Turing’s conceptualization of computation which, when implemented in digital hardware, gave rise to the so-called cognitive revolution.

Hmmmm, we thought, so the mind’s executing algorithms. That’s what thought is. Well, maybe it is, maybe it isn’t. And yet surely when doing arithmetic, the mind really is executing algorithms, if often ineffectively. The question is whether or not more primitive processes are also algorithmic. I suspect not, but that’s another story.

Conversation, again

But I want to go back where this line of thinking began, with the idea that ordinary conversation is the seed of (a certain kind of) computation. Thus making language a computational process. What I want is that, when ordinary conversation is internalized and becomes inner speech (Vygotsky), we end up with the mental machinery necessary for, well, language, but also computation.

I suppose what I’m after is some notion of mental registers such that internalizing a conversational other gives you more working registers or allows you to work more effectively with the registers you’ve got. Something like that. Back and forth conversation is one thing. But what’s going on when someone generates a long string of uninterrupted speech, or inner thought, or writing? What’s that internalized other doing that makes this possible?

Arithmetic computation often requires us to string together long sequences of elementary operations. This is quite explicit and can be readily verbalized. In fact, don’t we sometimes vocalize or subvocalize when calculating?

I’ll conclude with one last thought: modus ponens. It’s a rule of inference. On the one hand I know “P implies Q.” On the other hand, you assert “P is true”; from which I conclude, “Q is true.”

Isn’t that how those atomic arithmetic facts are used?

(1) number1 operation number2 equals number3 (2) we are given: ‘number1 operation number2’ (3) thus, by modus ponens, we may conclude: ‘number3’More later.