New working paper posted. Title above, links, summary (abstract), TOC, and introduction below.

- Academia.edu: https://www.academia.edu/126773246/What_Miriam_Yevick_Saw_The_Nature_of_Intelligence_and_the_Prospects_for_A_I_A_Dialog_with_Claude_3_5_Sonnet

- SSRN: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5081013

- ResearchGate: https://www.researchgate.net/publication/387694422_What_Miriam_Yevick_Saw_The_Nature_of_Intelligence_and_the_Prospects_for_AI_A_Dialog_with_Claude_35_Sonnet

Claude’s Summary

1. Miriam Yevick's 1975 work proposed a fundamental distinction between two types of computing: A) Holographic/parallel processing suited for pattern recognition, and B) Sequential/symbolic processing for logical operations. Crucially, she explicitly connected these computational approaches to different types of objects in the world.

2. This connects to the current debate about neural vs. symbolic AI approaches: A) The dominant view suggests brain-inspired (neural) approaches are sufficient. B) Others argue for neuro-symbolic approaches combining both paradigms, and C) Systems like AlphaZero demonstrate the value of hybrid approaches (Monte Carlo tree search for game space exploration, neural networks for position evaluation).

3. The 1988 Benzon/Hays paper formalized “Yevick's Law” showing: A) Simple objects are best represented symbolically, B) Complex objects are best represented holographically, and C) Many real-world problems require both approaches.

4. This framework helps explain: A) Why Chain of Thought prompting works well for math/programming (neural system navigating symbolic space), B) Why AlphaZero works well for chess (symbolic system navigating game tree, neural evaluation of positions), and C) The complementary relationship between these approaches.

5. These insights led to a new definition of intelligence: “The capacity to assign computational capacity to propositional (symbolic) and/or holographic (neural) processes as the nature of the problem requires.”

6. This definition has implications for super-intelligence: A) Current LLMs' breadth of knowledge doesn't constitute true super-intelligence, B) Real super-intelligence would require superior ability to switch between computational modes, and C) This suggests the need for fundamental architectural innovations, not just scaling up existing approaches.

The conversation highlighted how Yevick's prescient insights about the relationship between object types and computational approaches remain highly relevant to current AI development and our understanding of intelligence itself.

CONTENTS

Introduction: Speculative Engineering 2

Yevick’s Idea 2

Why Hasn’t Yevick’s Work Been Cited? 4

From Claude to Speculative Engineering 5

What’s in the Dialog 6

Attribution 7

From Holographic Logic 1975 to Natural Intelligence 1988 8

Yevick’s Holographic Logic 8

Natural Intelligence 13

Chain of Thought 16

Chain of Thought Is the Inverse of the Alphazero Architecture 17

Define Intelligence 18

Implications of My Proposed Definition of Intelligence 20

Summary 22

Claude Reviews Yevick’s Full 1975 Text 25

Generalizing Yevick’s Results 26

Explaining Yevick to Non-Experts 28

Uzkeki Peasants Have Trouble With Simple Geometric Objects 31

Why Yevick’s Work Has Been Neglected 33

Introduction: Speculative Engineering

In the course of the dialog with Anthropic’s Claude 3.5 about artificial intelligence I propose a novel definition:

Intelligence is the capacity to assign computational capacity to propositional (symbolic) and/or holographic (neural) processes as the nature of the problem requires.

Compare this with a version of the conventional definition:

Intelligence is the ability to learn and perform a range of strategies and techniques to solve problems and achieve goals.

Those definitions are very different. My proposal suggests specific mechanisms while the conventional definition does not. While my proposal must be considered speculative, it can be used to guide research (that’s what speculation is for). In fact it can guide two research programs: 1) a scientific program about the mechanisms of human intelligence, and 2) an engineering program about the construction of A.I. systems. The conventional definition is probably correct, in some sense. But it provides very little guidance for any research. Its use is primarily rhetorical, for use in general or philosophical discussions of artificial intelligence.

Which is preferable, a definition that is speculative and useful in guiding research, or one that is (weakly) correct but of little value in guiding research?

This is the issue that hovers over the dialog I have constructed with Claude.

I will get around to explaining why I’ve consulted Claude soon enough. But first I want to talk about where that definition of intelligence came from. The words are mine, but the basic idea is not.

Yevick’s idea

The idea belongs to a mathematician, the late Miriam Yevick. Consider this remark that Yevick published in 1978:

If we consider that both of these modes of identification enter into our mental processes, we might speculate that there is a constant movement (a shifting across boundaries) from one mode to the other: the compacting into one unit of the description of a scene, event, and so forth that has become familiar to us, and the analysis of such into its parts by description. Mastery, skill and holistic grasp of some aspect of the world are attained when this object becomes identifiable as one whole complex unit; new rational knowledge is derived when the arbitrary complex object apprehended is analytically described.

She thought of one of those computational modes as holographic and the other as logical or sequential. She regarded both as essential to human mentation. Yevick analyzed those two modes in mathematical detail in a 1975 article I used to I begin my discourse with Claude (p. 8 below). David Hays and I employed those modes in the 1988 article that I quote from later in the dialog (p. 13) and in a more informal article (about metaphor) that we published at roughly the same time. Though they use different terms, Bengio, LeCun, and Hinton recognize the same distinction in their Turing Award paper.

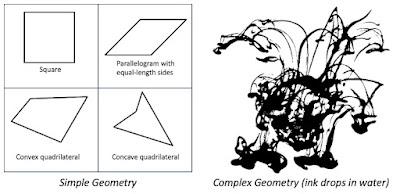

But Yevick’s distinction entails something that Bengio, LeCun, and Hinton do not talk about. She regards the holographic mode as best suited for dealing with one kind of object, one that is geometrically complex, and the logical mode as best suited for a different kind of object, one that is geometrically simple. Now, in the passage I’ve quoted above Yevick has generalized from the visual mode, which she argued in her 1975 paper, to mental processes in general. Hays and I do so in our 1988 article as well. As far as I know, that mathematics has yet to be done – which is one reason I am trying to bring attention to Yevick’s work.

If Yevick is correct, then the current debate we are having about how to proceed is ill-posed. As far as I can tell, the majority view is that scaling up machine learning procedures will prove sufficient to achieving human-scale intelligence (not to mention super-intelligence). Gary Marcus, Subbarao Kambhampati, and various others have been arguing that, no, we also need symbolic (logical) mechanisms. Yevick’s work comes down on this side of the debate as well, and contributes to it by specifying that the two modes are each suited to a different aspect of that world. That would naturally lead to a discussion about the nature of the world, but that is beyond the scope of this document.

In view of the importance of this debate, it does not seem unreasonable. It is not only that billions of dollars are currently being wagered–and that is what it is, gambling, but that they are being wagered on technology that may well bring about fundamental changes in the way we live. Can we not take a step back and think about what we are doing?

Why hasn’t Yevick’s work been cited?

For the past few years, at least since the release of GPT-3, I have been looking for references to Yevick’s work in the current literature. I’ve not found any. Why not?

I have a specific reason to expect that her research would be cited. AlexNet’s success in the 2012 ImageNet Large Scale Visual Recognition Challenge is generally regarded as the breakthrough moment when artificial neural networks came of age. That’s when people knew neural nets held great promise. AlexNet uses a convolutional neural net (CNN) architecture and thus shares its mathematical underpinnings with Yevick’s work, Fourier analysis. Given that connection, why doesn’t anyone seem to know about her work?

Fourier analysis, of course, is quite common. That in itself is no reason to refer to Yevick’s work. But her work dealt with the fundamental nature of visual pattern recognition, of perception and cognition, that makes it relevant. Yet, if the research has advanced without reference to her ideas, why would I think them at all relevant? I began this document with an answer to that question: Her ideas give us a way to formulate an explicit definition of what intelligence is.

How long can we go without such an understanding? Alas, I fear we can go on, and on, and on. How many times will we pass the same tree before we figure out that we’re traveling in circles?

Let’s set that aside for the moment. It’s not simply that researchers in machine learning don’t seem to know about her work. No one does, not even those with a more abstract and theoretical intellectual program.

I can think of three reasons why her work is unknown 1) David Hays and I have over-valued her work. What I’ve said in the opening two sections of this document is between misleading and wrong. 2) Her work is valuable, but everyone else is blind. While it is tempting to give some credence to that explanation, I don’t see that it is a very productive way to think. Nor can I bring myself believe it. I think there is a more plausible account: 3) Her work simply got lost.

Since I explore this possibility with Claude 3.5 at the very end of this document (pp. 33 ff.), I won’t say much about it here. The basic story is simple. On the one hand, she published her crucial paper in 1975, which was the peak of symbolic AI; that’s the year Newell and Simon won the Turing Award. Very few people would have been prepared to listen to it. Furthermore, she couched her ideas in terms of an optical medium, holography, that was fairly new at the time and, though I had given rise to quite a bit of speculation, was of no practical use in AI. Finally, work in artificial neural nets was still in its infancy. It wasn’t until the early 1980s that backpropagation would be developed in a practical way.

From Claude to speculative engineering

I can’t continue her work myself. While I have fairly sophisticated mathematical intuitions, I don’t have the technical skill to do that. When I was working with David Hays, he supplied the mathematical skill that I lacked. Hays is no longer available to me, nor, for that matter, are Walter Freeman or Tim Perper, my other mathematical colleagues. They have all died.

That leaves me with Claude, and Claude does have, in some sense, more mathematical skill than I’ve got. Moreover, Claude has read – for some nontrivial sense of that word ¬– far more than I or any one person ever has and ever will. Claude thus brings a broad range of concepts and ideas to the discussion. Claude, it seems to me, can help define the issues, and lay out a map, pointing out where the dragons lay in wait.

Such an enterprise must necessarily be speculative. The ideas must be held in epistemological suspension. In the preface to my book on music, Beethoven’s Anvil (2001) I characterized my method as speculative engineering:

Engineering is about design and construction: How does the nervous system design and construct music? It is speculative because it must be. The purpose of speculation is to clarify thought. If the speculation itself is clear and well-founded, it will achieve its end even when it is wrong, and many of my speculations must surely be wrong. If I then ask you to consider them, not knowing how to separate the prescient speculations from the mistaken ones, it is because I am confident that we have the means to sort these matters out empirically.

It is in the spirit of speculative engineering that I undertook a series of dialogs with Claude, seven sessions over a period of six days in December (22-27). All of the sessions have been linked into the same context, making it one long discussion.

What’s in the dialog

I begin the discussion with Yevick’s 1975 paper, “Holographic or fourier logic,” and asked Claude to compare her ideas with current approaches. Then I introduce the Turing Award paper that Bengio, LeCun, and Hinton published in 2021. After discussing the relationships between the two papers I introduce AlphaZero as an example. At that point I bring in a paper on natural intelligence that David Hays and I published in 1988. We were particularly concerned about objects that seemed fall between the two modes, such as natural language. A bit later I bring up Chain of Though, which Claude observed was quasi-symbolic (my term). One issue that gets woven into the discourse at various points is sheer computational capacity and how it affects the two computational modes.

It is only then that I introduce my (tentative) definition of intelligence and then compare it with the more-of-less standard definition. We conclude by veering off into super-intelligence. I then ask Claude to summarize the discussion up to this point. I’ve presented that summary on the first page of this document as the abstract.

There are, however, three more sections in the dialog. Once I’d realized that Claude had been working without a full text of Yevick’s 1975 paper I uploaded it and asked Claude to review the previous discussion. Claude seemed particularly impressed with Yevick’s uses of Gödel patterns, but was satisfied that our main discussion still held up.

The last two sections are of a somewhat different nature. In the first I’m asking Claude for help in explaining Yevick’s ideas to people with even less mathematical intuition than I’ve got. This naturally serves as a check on my own thinking. In the last section I bring up a study that A. R. Luria had made of the cognitive capacities of Uzbeki peasants in the 1930s. One of the things he found is that they had difficulty in identifying and working with simple geometric shapes. Claude suggested that was because holographic computation has trouble dealing with simple geometric objects.

Attribution

There is one final issue, which is about the nature of this document, not what it says. Since Claude has more words in this document than I do, why am I the sole author? There is an obvious answer: Claude is a computer while I am a human being. I’m not sure how much longer such an answer will serve. Claude’s role in this document is, however, considerably more substantial than the role played by the word-processing software computer. As a practical matter, however, I can take intellectual responsibility for this document in a way that Claude cannot. That’s why I am the sole author.

Surely the day will come when matters aren’t so simple. I would like to think that Miriam Yevick’s ideas will play a role in bringing that day about. When that happens, Claude’s successors will be able to take full responsibility for intellectual work.