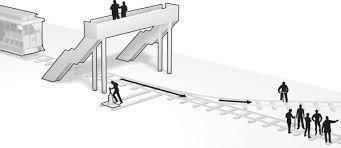

You are a bystander seeing a runaway trolley, about to hit and kill five people.  You can grab a switch and reroute it to a different track where it will kill only one person. Should you? Most people say yes. But suppose you’re on a bridge, and can save the five lives only by pushing a fat man off the bridge into the trolley’s path? Should you? Most say no. Or suppose you’re a doctor with five patients about to die from different organ failures. Should you save them by grabbing someone off the street and harvesting his organs? Aren’t all three cases morally identical?

You can grab a switch and reroute it to a different track where it will kill only one person. Should you? Most people say yes. But suppose you’re on a bridge, and can save the five lives only by pushing a fat man off the bridge into the trolley’s path? Should you? Most say no. Or suppose you’re a doctor with five patients about to die from different organ failures. Should you save them by grabbing someone off the street and harvesting his organs? Aren’t all three cases morally identical?

Our intuitive moral brain treats them differently. Pushing the man off the bridge, or harvesting organs, seem to contravene an ethical taboo against personal violence that the impersonal act of flipping the switch does not.* (This refutes the common idea that humans have a propensity for violence. Ironically, those who believe it may do so because their own built-in anti-violence brain module is set on high.)

He argues for a version of utilitarianism (he calls it deep pragmatism). Now, utilitarianism has a bad rep in philosophy circles. Its precept of “the greatest good for the greatest number” is seen as excluding other valid moral considerations; e.g., in the trolley and doctor situations, violating the rights of the one person sacrificed, and Kant’s dictum that people should always be ends, never means.

Greene’s line of argument (identical to mine in The Case for Rational Optimism) starts with what he deems the key question: what really matters? You can posit a whole array of “goods” but upon analysis they all actually resolve down to one thing: the feelings of beings capable of experiencing feelings. Or, in a word, happiness.

But in any case, nothing ultimately matters except the feelings of feeling beings, and every other value you could name has meaning only insofar as it affects such feelings. Thus the supreme goal (if not the only goal) of moral philosophy should be to maximize good feelings (or happiness, or pleasure, or satisfaction) and minimize bad ones (pain and suffering).

A common misunderstanding is that such utilitarianism is about maximizing wealth. But, while all else equal, more wealth does confer more happiness, all else is never equal and happiness versus suffering is much more complex. Some beggars are happier than some billionaires. The “utility” that utilitarianism targets is not wealth; money is only a means to an end; and the end is feelings.

This is what “the greatest good for the greatest number” is about. Jeremy Bentham, utilitarianism’s founding thinker, imagined assigning a point value to every experience. This is not intended literally; but if you could quantify good versus bad feelings, then the higher the score, the greater the “utility” achieved, and the better the world.

Meantime, if X is willing to sacrifice himself for what he thinks is the greater good, that’s fine; but if X is willing to sacrifice Y for what X thinks is the greater good, that’s not fine at all.

Thus, a true real-world utilitarianism incorporates the kind of inviolable human rights that protect people from being exploited for the supposed good of others – because that truly does maximize happiness, pleasure, and human flourishing, while minimizing pain and suffering.

* “Trolleyology” is big in moral philosophy precincts. For another slant on it, see an article in The Economist’s latest issue.