¨There is little chance that there would be a corona patient in our country if so measures are taken …¨

¨There is little chance that there would be a corona patient in our country if so measures are taken …¨We are still surprised by the lack of drive from governments and health authorities and the nonchalance of the population following the outbreak of the Coronavirus in China. Our hypothesis is that a lack of thinking skills that governments, politicians, health institutions, and the population showed in nearly every country in Europe and in the United States has seriously contributed to the spread of the Coronavirus.

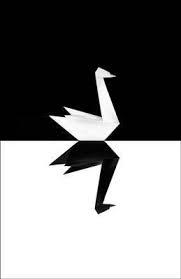

In this post, we will substantiate our hypothesis on the basis of the concept of Black Swan events, as introduced in Nassim Nicholas Taleb´s 2010 book. He discusses opacity, luck, uncertainty, probability, human error, risk, and decision-making in a world we don’t understand. Taleb is a mathematical statistician and risk analyst.

For a long time, it was believed that black swans do not exist because no one had ever been seen. By a Black Swan event, we mean that something is happening that has never happened before and of which we cannot oversee the impact, seriousness, and size. As a result, we do not have ready-made responses based on past experiences.

We believe that if governments, politicians, health care authorities, and the media had knowledge about the Black Swan concept, the approach to attack the virus outbreak would have been much more effective.

If we consider the spread of the virus a Black Swan phenomenon, what does that say about the robustness of the health system, the logistics chains, and the suppliers? Could knowledge of Black Swan phenomena have led to an alternative approach?

The Black Swan theory

A ¨Black Swan” is an outlier, as it lies outside the realm of regular expectations because nothing in the past can convincingly point to its possibility.

- The event is a surprise (to the observer).

- The event has a major effect.

- After the first recorded instance of the event, it is rationalized by hindsight, as if it could have been expected; that is, the relevant data were available but unaccounted for in risk mitigation programs. The same is true for personal perception by individuals.

The COVID-19 crisis: an ¨expected¨ Black Swan?

The coronavirus is carrying an extreme impact, in terms of human lives, dislocation, and economic losses. But is the emergence of such a dangerous virus really an unpredictable outlier that suddenly swooped in from outside our “regular expectations”?

Security services warned already in 2016 that a pandemic would cause serious bed shortages in the intensive care units.

Many people could easily cite several dangerous global outbreaks from the past two decades: SARS in 2004, H1N1 in 2009, and the Ebola outbreak in 2015.

Contagion was a film released in 2011 about a fictional pandemic of a virus called MEV-1 which kills between 25 and 30 percent of those it infected. The origin of the virus in that film even echoes what many scientists think occurred with the coronavirus.

Microsoft co-founder Bill Gates said he had raised the issue of a “large and lethal” pandemic back in 2018. In the same year, the head of the World Health Organization: “A devastating epidemic could start in any country at any time and kill millions of people because we are still not prepared.”

“The pandemic was both predictable and predicted. Yet it took most of the world by surprise. Governments and businesses alike were caught underprepared – both for the health crisis and the resulting social and economic fallout.” WBCSD, 2020

Lots of people went into voluntary quarantine weeks before it was ordered by the government because they already anticipated the outbreak from China and found that the institutions were not responding adequately. They expected a black swan. They intuitively did but Governments, health care organizations, politicians, and the media did not.

In those weeks before the major outbreak in Europe and the US, there was still room to stock up on protective equipment, to scale up the laboratories, to expand the purchase and production of test materials, to prepare for the collection of serum with antibodies when cured patients. Only, it did not happen.

So the Coronavirus was in some circles by no means unexpected, but taking measures consistent with the character of a Black Swan event was omitted. In such a situation relying on data and verified scientific models is impossible, by definition. Therefore, it seems better to take, what is called, robust measures. Measures that are based on acknowledging fundamental uncertainty and reduce vulnerability or fragility for it.

Robustness in complex systems

In 2010, David Orwell proposed in Economyths – How the Science of Complex Systems is transforming economic thought some principles to make a complex system more robust.

- Modularity: the degree to which a system’s components may be separated and recombined.

- Redundancy: the duplication of critical components or functions of a system with the intention of increasing reliability of the system, usually in the case of a backup or fail-safe.

- Diversity: A degree of diversity in a system can help it adapt to change.

- Controlled shut-down: If entities are damaged beyond repair, they must be taken apart from the system, before it infects the whole.

Space shuttles, nuclear plants, railway connections, and electric power distribution networks: all practice robustness. If calamities occur, they shut subsystems off in order to isolate them and to prevent the ripple of errors through the system. Their systems are modularized and triple-redundant.

Why are other critical systems like socio-economical ones not designed for robustness?

Robustness in the Health Care System

Pandemics are clearly unpredictable in their severity, yet, robust health service has to be prepared for different types of emergencies. Could it have been prepared for a virus infection that is spreading as fast as corona and leads to such a broad range of symptoms from very little symptoms to breathing problems?

Panic and adopting

It is clear that many health services and care providers are organized to the limits of their capabilities. Lack of funding has meant that few resources have been spent trying to build robustness to calamities such as a pandemic.

If we lack information, like information about a new virus, it is wise to build in some redundancy into the system. However, during the coronavirus emergency, the opposite approach was taken. It is important to note that this might not have been a conscious decision. People in leading positions panicked and adopted an approach characterized by surviving one day at the time. There was a lack of thinking and planning for the next day or week.

Efficiency, not robustness

Getting the balance right between providing care and supporting health and well-being, and spending as little money as possible is tricky. Lack of funding has been a problem in many countries and long A&E waiting times and intensive care units struggling to admit critically unwell patients are signs that highlight that services were taking high risks when it comes to any unexpected events. Years of cutting funding for health services and care homes have meant that there has been little money spend on preparing workers for a severe crisis such as a pandemic. Lack of a back-up plan to deal with the coronavirus outbreak together with lack of advice and support from the government meant that hospitals lack the capacity to deal with the increased demand because of existing understaffing.

This crisis exposes the system errors that have crept into our systems. For example, excessive-efficiency thinking, through which we want to optimize everything at the lowest possible cost. As a result, we have no stocks and buffers to absorb a shock like this. Those chains are getting longer and longer and carry more and more risk.

Vendor and country Lock-in

Many laboratories work with machines of a certain brand and thus depend on the test materials supplied by the pharmaceutical company. They experience shortages of those test materials. These are not easy to solve, because there is a so-called vendor lock-in; whoever uses the pharmacist’s machines must also use its materials.

Mouth masks, respirators, aprons, test materials, even IVs: the shortage of medical equipment is long. We depend on countries such as China and India for many of these products. Thanks to globalization, there are now far too few medical products. Only hesitant attempts were made to set up the production of critical goods in a country itself.

Robustness in tackling the corona outbreak

Diversity, controlled shut-down, and modularization. There is not just one ‘scientific’ approach to dealing with COVID-19. Different countries are responding in different ways. Singapore, Hong Kong, South Korea, Germany, and New Zealand each provide different examples of how to limit the initial spread of the virus, with different policy mixes. That is the best way to learn quickly in times of huge uncertainty, lack of explanatory models, and reliable data about the effects of token measures.

This is at odds with the widely held view that, for example, Europe should have come to a unified approach

Modularisation and lock-down. By compartmentalizing a system into subsystems (province, city, district, home) and completely isolating them from the larger system, a crisis (a fire, an infection, an electricity failure, the bursting of a water pipe) can be combated. In some countries, the compartmentalization of the coronavirus has only half-hearted been considered.

Differentiate by time, place, and age. Measures often applied to a whole country and to the entire population. But if research could show that young people are least at risk, so when the cafes and restaurants reopen, it may apply to young people first. Or throw open gyms, but only allow youngsters at busy times. In addition, it is possible to look much more regionally. The situation in one area could be different from that in another area, and measures can then be alleviated (or enhanced) there

Differentiating by time, place and age would make it clear that, for example, increases of infections may also be related to special circumstances in a particular location or sector which could provide valuable insights.

Diversity and redundancy. Across the world, scientists have created epidemiological models based on the little we know about COVID-19. Small changes in the assumptions made by the modelers can have large effects on their estimates and implications. By letting models compete, we can find out which assumptions are debatable. That argues for more diversity and redundancy in models as well in national and international health organizations. The crisis demonstrated harshly that those institutions can be dead wrong in their scientific opinions.

Robustness and scientific advice

claim many governments. By doing that, denying that political choices have to be made and avoiding responsibility.

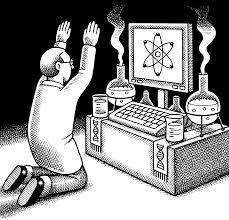

We started these series with that the semi-scientific approach most governments adopted can be better defined as scientism, implying a cosmetic application of science in unwarranted situations considered not amenable to the application of the scientific method or similar scientific standards.

Read the following statements by governmental health care officials critically and refer them to the concept of Robustness. (In italics our opinion)

- It has not been demonstrated that transmission by aerosols plays a role in the spread of Sars-CoV2. Therefore, based on current insights, adjustments to ventilation systems are not necessary… In our opinion, this is typical scientific reasoning: If the hypothesis cannot be verified or falsified, then it cannot be true. However, there can be many reasons why the hypothesis can neither be proven nor rejected. A robust measure would be more appropriate under this uncertainty like increasing ventilation or increasing air temperature. Also, start rigid research into the relation between ventilation and outbreaks of COVID-19, instead of truth-finding.

- The risk of infection at school is smaller than that of a traffic accident outside school. . . Why should you take as a parent the extra risk that has possible deadly consequences? Primitive cultures would never accept low probability- high consequence risks.

- In retrospect, we see that the virus behaves differently than we knew back then…The virus doesn´t behave differently, the models do not reflect the behavior of the virus correctly. This is what is called ¨rationaliziation by hindsight¨.

- Although more transmission is expected among primary school children and their parents, this probably does not lead to many additional ICU registrations. . . Typical statistical reasoning, but why take the risk instead of taking a robust measure?

- National Health Organization simply says that it does not have enough data to determine whether this measure is effective, so we do not prescribe this…This is typical scientific reasoning, not aimed at creating anti-fragility. Test the measure extensive as hospitals have already introduced this measure on the basis of their practical experience and common sense. The measure may be a Black Swan, a never seen before and thus not yet proven but might be nevertheless effective.

To conclude

For Black Swan-like events, it seems more sensible to design robust measures than to rely on scientific models that are inherently based on previous events and therefore do not apply. And even worse, it will give a misplaced feeling of being in control.

Next post: Groupthink

We assume that a lack of basic thinking skills underlies the initial lax attitude of the authorities and what we believe to be an inappropriate approach thereafter.

Has there never been constructive criticism or even opposition that might have led to a faster, more practical policy? Or have there been pressure for harmony or conformity in the state´s decision and advisory committees that resulted in irrational or dysfunctional decision-making outcomes? Have policymakers ever been taken measures to prevent group conformity and group obedience? And what has been the role of the media?

Related posts

- Black Swan Robustness. How to cope with improbable and potentially devastating events, not through forecast, prediction, and reliance on statistical data, but by adhering to the concept of ‘robustness’ in the face of potentially devastating systemic fragility. It refers to the risks imposed by interlinkages and interdependencies in a system, where the failure of a single entity or cluster of entities can cause a cascading failure, which could potentially bring down the entire system.

- Mainstream Thinking about Designing Systems. Many technical systems, but also economic and organizational systems are organized to the limits of its capabilities. There are no redundancies, all components are trimmed to the bare bones. There is no back-up or fail-safe. it is efficient. This is mainstream design thinking. It is based on the idea that it is exactly known what the system does in all circumstances. Nevertheless, this is seldom the case when we are looking at complex systems.

- Robustness in Networks. To make the economy more robust David Orwell in his book Economyths – How the Science of Complex Systems is transforming economic thought – he proposes Modularity, Redundancy, Diversity, and Controlled Shut-down.

- Training in Economics is a Serious Handicap. Economics gains its credibility from its association with hard sciences like physics and mathematics. Fundamentally, it is a mechanistic approach for modelling complex systems, dating back to Newton’s theories about the behavior of independent particles, in timeless and unchanging conditions. In the 19th century, when neoclassical economics was invented, the assumption of stability was required because it would have been impossible to solve equations using the available mathematics tool.