There are three doors. Behind one is a big prize, a car, and behind the two others are goats. The contestant first picks one door without opening it. Then the game show host tells you that he will open one of the other two doors behind which there is a goat. It is assumed (but often untold) that the host knows where the car is, and so deliberately opens a door with a goat behind it. It is also assumed that the contestant wants to win the car, but let me tell you already that if you really want a goat, you can be sure to get one. Then the contestant is given the choice of choosing again. Since there are now two closed doors, the choices are to stay on the door first chosen or to switch to the only other closed door. The question is what the best strategy is, switching or staying. Is the probability of getting the car 50% for staying and switching? No, it turns out that switching is the best strategy, giving the contestant a 2 in 3 chance of winning the car. Why? Well, there are many ways to realize this. My favorite is this. Once the contestant has chosen one door, we know that the chance the car is behind it is 1/3. Thus, the chance it’s behind one of the two others is 2/3. Because the host opens one of the two other doors, switching is equivalent to actually choosing two doors, giving a 2 in 3 chance of getting the car. There we go. Another way to arrive at this (correct) result is two actually do it many times over. Pick three cards, a red (the car) and two black (the goats), and have someone play the host. Do this say 100 times, and you’ll se that if you switch every time, you’l get in the neighborhood of 67 red car(d)s. However, I give it a pretty good chance that if you do this, then after playing a handful of times you’ll anyway realize that the solution of course is 2/3 for switching.

I once many years ago set out to write a code to do the experiment. I would write the code, run it a kadoolian times and expect to see the switching strategy win out two thirds of a kadoolian times. However, much to my surprise, when I started writing the code, if-statements and all, the solution became to glaringly obvious that I gave up doing it. It just seemed so futile at that point. I suspect that my hankering for using simulations to investigate problems was strongly influenced by this experience.

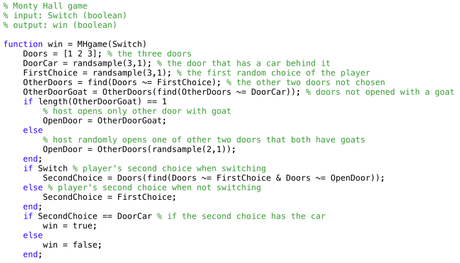

Recently I have introduced several people to the Monty Hall problem - always a great party trick. And again, almost everyone instantly arrives at 50/50, and are as adamant that this is the correct solution as the next guy. So I decided to finally write the code to do it, but made two stipulations: 1) the code must mirror the way the problem is told as closely as possible. This will not be the most efficient code to write or the fastest to execute, but will hopefully be easy for readers to see through, so to speak. And 2) rather than just playing the game many times, I will let a population of individuals play it and compete with each other for a chance to reproduce (aka evolution). For full disclosure, the code is included below.

The Monty Hall code

The evolutionary dynamics

The objective of the organisms is to come up with the best strategy for playing the Monty Hall game. Each organisms has just one “gene”, called MH01, and this gene determines the probability that when playing the game the organism chooses to stay or to switch. The value of MH01 is a number between 0 and 1. If the number of 0, the organism never switches door, and if it is 1, then the organism always switches, and if the value is, say, 0.4825, then there is a 45.25 percent chance that the organism switches door. Easy-peasy.

We do two experiments: 1) comparing two strategies (50% switching vs. 50% staying, or MH01=0 vs. MH01 = 1) without mutations, and 2) starting all individuals with MH01=0 and adding mutations to all offspring.

We start with a population of N=100 individual organisms. One organism is chosen at random among the N individuals to play the game. If it wins it gets to reproduce. In that case a copy of it now replaces one of the N organisms chosen at random (including its parent). Nothing happens if they lose the game. This results in a constant population size and overlapping generations. When an offspring replaces a hapless individual, the MH01 gene can mutate (in experiment 2). This results in MH01 increasing or decreasing by a small uniformly random value. And that’s it.

The evolved solution

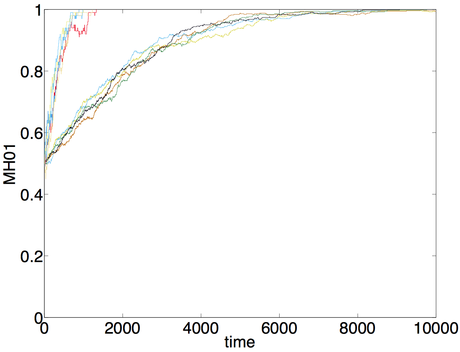

Figure 1: Starting with 50% switchers (MH01=1) and 50% stayers (MH01=0) all ten simulation runs result in the switchers outcompeting the stayers. Switchers win the Monty Hall game twice as often, and the population frequency of MH01=1 thus stochastically increases until it reaches 100% (fixation). Population size is N=100 (the five runs that reach a frequency of 1 after about 1,000 plays) and N=500 (five runs that take about 10,000 plays to go to fixation).

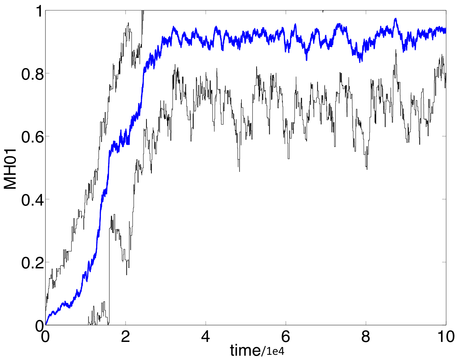

Figure 1: Starting with 50% switchers (MH01=1) and 50% stayers (MH01=0) all ten simulation runs result in the switchers outcompeting the stayers. Switchers win the Monty Hall game twice as often, and the population frequency of MH01=1 thus stochastically increases until it reaches 100% (fixation). Population size is N=100 (the five runs that reach a frequency of 1 after about 1,000 plays) and N=500 (five runs that take about 10,000 plays to go to fixation). Figure 2: Average population value of MH01 with large-effect mutations. Starting with 100% stayers (MH0=0). MH01 increases relatively fast, reaching the largest value after around 30,000 plays. The effect of mutations is uniformly distributed on the interval ±0.05 centered on the current value. Population size is N=100. The black lines are minimum (bottom) and maximum (top) values of MH01 in the population.

Figure 2: Average population value of MH01 with large-effect mutations. Starting with 100% stayers (MH0=0). MH01 increases relatively fast, reaching the largest value after around 30,000 plays. The effect of mutations is uniformly distributed on the interval ±0.05 centered on the current value. Population size is N=100. The black lines are minimum (bottom) and maximum (top) values of MH01 in the population.

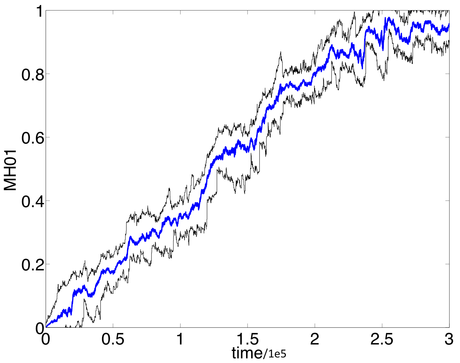

Figure 3: Average population value of MH01 with small-effect mutations. Starting with 100% stayers (MH0=0). MH01 increases relatively slowly, reaching the largest value after around 250,000 plays. The effect of mutations is uniformly distributed on the interval ±0.01 centered on the current value. Population size is N=100. The black lines are minimum (bottom) and maximum (top) values of MH01 in the population. There is less variation in MH01 in this case because the effect of mutations is lower than in the runs shown in figure 2.

Discussion

What a relief! When running simulations like this there is always the risk that for some unseen reason the evolved solution is different from what you anticipated. Thankfully that is not the case here: evolution favors the fittest organisms, and the fittest organisms are those that tend to switch when they play the game. Success!

Why does MH01 not go to fixation? In other words, why does the average population value of MH01 (blue lines) not become 1? The answer is that there is a mutational load (Wikipedia) caused by the effect of mutations. Offspring of a parent with MH01=1 has a 50% chance of having a lower value of MH01, so there will always be some individuals with a suboptimal value of MH01. What is increased in evolutionary dynamics is not fitness but free fitness. Free fitness is the sum of the logarithm of fitness and an entropy term that is non-zero when there is either mutation and/or drift. The term was introduced by Yoh Iwasa in 1988: Free fitness that always increases in evolution.

Let me reiterate that the answer of 2/3 chance to get the car when switching relies on the game host knowing where the car is, and deliberately opening a door that the contestant has not chosen and where there is a goat behind. If the host opens another door at random and there happens to be a goat behind it, then the probability of getting the car is only 50% for switching (and for staying). The probability is thus dependent on the knowledge that the contestant has about the knowledge and intent of the host. Only if the contestant knows that the host knows what he is doing does the chance increase by switching.

You can play the Monty Hall game here, where you can also play the version where the host does not know which door has the car.