Prior to World War II literary criticism centered on philology, shading over to editorial work in one direction, and literary history in a different direction. After the war criticism became more and more important and by the 1960s it was become the focus of the discipline. But it was also becoming problematic. As more critics published about more texts it became clear that interpretations diverged.

What, if anything, are we to make of that? And so we have a couple decades of debate about intentionality, the author, multiple interpretations, and so on. That’s the context in which Johns Hopkins hosted the (in)famous structuralism conference in 1966. At the same time the cognitive science movement was becoming more and more visible, with linguistics, psycholinguistics and AI in the lead [1]. The 1960s also saw Mosteller & Wallace on the authorship of The Federalist Papers. And scholars in literature departments were actually curious about this stuff. I remember a brief conversation I had with the late Don Howard in my senior year at Hopkins. He was urging me to apply to the department for graduate study and, knowing of my interest in computers, mentioned computational stylometrics as something I might be interested in.

So when Jonathan Culler published Structuralist Poetics in 1975 he advocated a notion of literary competence based on Chomsky’s notion of linguistic competence. When Stanley Fish came up with the stillborn notion of affective stylistics (in a famous 1970 essay of that name) he called for “a method, a machine if you will, which in its operation makes observable, or at least accessible, what goes on below the level of self-conscious response.” Though he didn’t talk of computers that surely is what he had in mind. A bit later he would write explicitly, albeit critically, about computational stylometrics; but he was reading the stuff, as though it was important.

That was the mid-1970s context in which I went across the aisle at SUNY Buffalo and hung out with David Hays’s research group in computational linguistics – not merely linguistics, but computational linguistics. The computer! No one in the English Department thought that was at all strange; no one found computation threatening. Sure, why not, see what you can find!

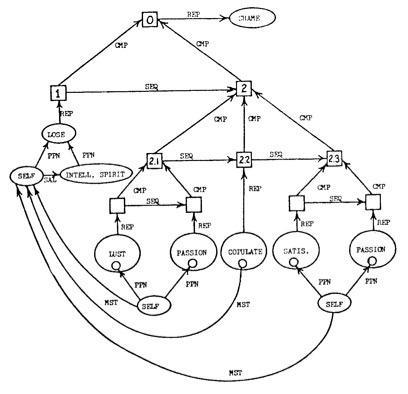

That’s when I published an essay in MLN that had technical diagrams like this:

Was it a bit strange and unusual? Of course. But no one was worried that I was threatening the integrity of humanistic discourse by thinking in those terms. It was all part of the big exploration.

And it’s not as though no one was skeptical about computing in those days. Hubert Dreyfus had published What Computers Can’t Do in 1972. Joseph Weizenbaum, who’d created the (in)famous ELIZA, published Computer Power and Human Reason in 1976. The end of the decade would see John Searle publish his well-known Chinese room argument. Skepticism, yes. But a threat to humanities discourse, not really.

And yet, as I’ve argued in 3 Quarks Daily, that’s also when things began to shut down. That’s when Geoffrey Hartman rejected “modern ‘rithmatics’—semiotics, linguistics, and technical structuralism.” That’s when, in “Lévi-Strauss and the Protocols of Distance” (Diacritics vol. 5, no. 3), Eugenio Donato ignored Lévi-Strauss’s analysis of myth in favor of meta-commentary on the relation between author and text. Things were in flux; contradictory currents were whirling about.

And when the decade was over, the profession rejected its dalliances with these other (more technical) disciplines and consolidated its conceptual forces around interpretation and all-but accepting the inevitability, perhaps even the necessity, of multiple interpretations. Interpretation itself was no longer problematic. It had become merely a tool, ready to hand for whatever needed hammering. Linguistics no longer beckoned. Structuralism was now safely in the past. Literary criticism was well on its way to becoming Theory, and Theory become Critique, a kind of transcendental discourse in a secular world.

And that, I think, is where we are today. Yes, digital criticism seems to be all over the place, but its use of computers is circumscribed. No one is talking of computation as a model for literary mentation, as I did back in the 1970s. At the same time, of course, digital criticism is also deeply problematic, hence the LARB hatchet-job last spring. And that’s why the uncomprehending reviewer of my essay at NLH could dismiss my “combination of diagrams and pattern tracing” as being “reminiscent of old school structuralism,” and everyone knows, don’t they? that that’s over and done with.

But old school structuralism isn’t the issue. The issue is how do we think about literature, about texts, minds, groups of people, and how they interact with one another. Is interpretive discourse all we need [2]? I don’t think so.

To recap: After World War II literary criticism devoted more energy to interpreting texts until that became the central focus of the discipline. As that happened, interpretation became problematic and the discipline became deeply self-conscious about methods and theories. In that context it was willing to look outward, not only toward other disciplines, but toward disciplines with distinctly a different, more obviously technical, intellectual style. That centrifugal movement came to an apogee in the 1970s even as a countervailing centripetal movement developed. The countervailing movement won and literary criticism became a continent unto itself.

* * * * *

[1] You might want to look at a brief chronology where I run cognitive science and literary theory in parallel, For the Historical Record: Cog Sci and Lit Theory, A Chronology, May 17, 2016, URL: http://new-savanna.blogspot.com/2011/03/for-historical-record-cog-sci-and-lit.html

[2] On that last question, see my recent post, Why is Literary Criticism done almost exclusively in discursive prose?, January 2, 2017, URL: http://new-savanna.blogspot.com/2017/01/why-is-literary-criticism-done-almost.html

This is an issue I’ve explored in a working paper, Prospects: The Limits of Discursive Thinking and the Future of Literary Criticism, November 2015, URL: https://www.academia.edu/17168348/Prospects_The_Limits_of_Discursive_Thinking_and_the_Future_of_Literary_Criticism