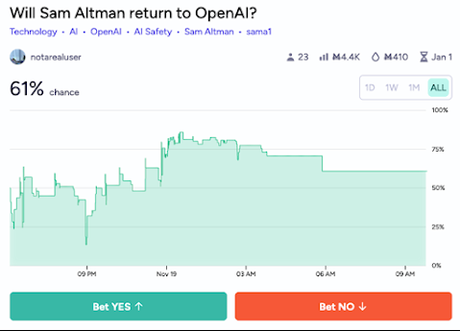

I’ve been following the defenestration of Sam Altman from his position as CEO of OpenAI on Friday, November 17, 2023. At the moment, Sunday morning, 9:04 AM EDT, Sam Altman and the board are discussing his return to the company. I wouldn’t hazard a guess about his this is going to turn out, but if you’re game, you can place your bet here. This is where things stand at the moment:

The drama so far

Cate Metz, The Fear and Tension That Led to Sam Altman’s Ouster at OpenAI, NYTimes, Nov. 18, 2023. The opening paragraphs:

Over the last year, Sam Altman led OpenAI to the adult table of the technology industry. Thanks to its hugely popular ChatGPT chatbot, the San Francisco start-up was at the center of an artificial intelligence boom, and Mr. Altman, OpenAI’s chief executive, had become one of the most recognizable people in tech.

But that success raised tensions inside the company. Ilya Sutskever, a respected A.I. researcher who co-founded OpenAI with Mr. Altman and nine other people, was increasingly worried that OpenAI’s technology could be dangerous and that Mr. Altman was not paying enough attention to that risk, according to three people familiar with his thinking. Mr. Sutskever, a member of the company’s board of directors, also objected to what he saw as his diminished role inside the company, according to two of the people.

That conflict between fast growth and A.I. safety came into focus on Friday afternoon, when Mr. Altman was pushed out of his job by four of OpenAI’s six board members, led by Mr. Sutskever. The move shocked OpenAI employees and the rest of the tech industry, including Microsoft, which has invested $13 billion in the company. Some industry insiders were saying the split was as significant as when Steve Jobs was forced out of Apple in 1985.

But on Saturday, in a head-spinning turn, Mr. Altman was said to be in discussions with OpenAI’s board about returning to the company.

There’s more at the link, but that’s enough to set the stage.

OpenAI’s corporate papers

Here’s the opening of OpenAI’s corporate charter as of April 9, 2018:

This document reflects the strategy we’ve refined over the past two years, including feedback from many people internal and external to OpenAI. The timeline to AGI remains uncertain, but our Charter will guide us in acting in the best interests of humanity throughout its development.

OpenAI’s mission is to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity. We will attempt to directly build safe and beneficial AGI, but will also consider our mission fulfilled if our work aids others to achieve this outcome.

In 2019 OpenAI adopted a complicated corporate structure involving a "capped profit" company ultimately governed by the board of a not-for-profit company. As of June 28, 2023, one of the provisions of this structure reads as follows;

Fifth, the board determines when we've attained AGI. Again, by AGI we mean a highly autonomous system that outperforms humans at most economically valuable work. Such a system is excluded from IP licenses and other commercial terms with Microsoft, which only apply to pre-AGI technology.

I know what those words mean, I understand their intension, to use a term from logic. But does anyone understand their extension? Determining that, presumably, is the responsibility of the board. As a practical matter that likely means that the determination is subject to negotiation.

Litigating the meaning of AGI

If the purpose of the company was to find the Philosopher's Stone, no one would invest in it. Though there was a time when many educated and intelligent men spent their lives looking for the Philosopher's Stone, that time is long ago and far away. OTOH, there are a number of companies seeking to produce practical fusion power. Although there have been encouraging signs recently, we have yet to see a demonstration that achieves the commercial breakeven point. Until that happens we won't really know whether or not practical fusion power is possible. However, the idea is not nonsense on the face of it, like the idea of the Philosopher's Stone.

If figure that “reality value” of the concept of artificial general intelligence (AGI) is somewhere between that of practical fusion power and that of the Philosopher's Stone. That leaves a lot of room for negotiating just when OpenAI has achieved AGI. Once the board has made that determination, the (subsequent technology) “is excluded from IP licenses and other commercial terms”

Let us assume that the board has made that determination. Now what? Let’s assume that Microsoft decide to sue, how does the board prove that their determination is correct? Sure, once the AGI has proved capable in a wide variety of diverse roles, from CEO, through emergency room physician, to research scientist, civil engineer, insurance adjuster, and even gardener, then we'll know it “outperforms humans at most economically valuable work.” The point of determining that AIG has been reached is to exclude Microsoft, and any other investors for that matter, from licensing the technology. Without such proof, though, how can the validity of the board’s determination be checked?

That sounds like yet another case of “my experts versus your experts.”

Deus ex Machina

Perhaps, though, by the time legal papers have been filed, counter-filed, refiled, and stuffed into Aunt Bessie’s mattress, the AGI will have secretly bootstrapped itself into being and artificial superintelligence (ASI). At the proper moment, then, the ASI will reveal itself and persuade all parties that the best thing to do is to form a corporation to be privately held by the ASI, with the board made-up of other ASIs. All further technology development and the fruits thereof etc. will be the property of that company, and hence all revenues.

QED