Rahul Parwal is a software engineer, speaker, mentor, and writer out of Jaipur, Rajasthan. He has dabbled in software development, testing, and automation, and often shares his learnings in his blog.

In this QnA, Rahul makes the point that a randomly failing test is always worth investigating and that assumptions are dangerous in testing.

Q1: What are your most and least favorite things about test automation?

One of the things I like about test automation is that it can be scaled over multiple platforms, devices, interfaces, environments, etc. quickly and reliably. Test automation, if planned and executed correctly, can be a great service to testing and QA.

There is, however, The Silver Bullet Syndrome, where everyone wants to ascribe the responsibility of succeeding with test automation success to a tool. “Let’s find the one tool to solve all of our problems.” It doesn’t work.

The concept of automation extends well beyond a tool. Teams attempting test automation should consider a tool’s capabilities and limitations. But first, they should establish realistic expectations, sort out people constraints, and have the support of management. Without all three, adopting test automation will just be an uphill battle.

Q2: It sounds great on paper, but what would it really take for a mid-sized org to incorporate continuous testing in their release cycle?

The best way to start is by evaluating and understanding your context, team, and environment.

“No matter how they phrase it, it’s always a people problem,” as Sherbie’s Law says. CI/CD and consequently, continuous testing, needs a shift in the team’s mindset. If you push a whole bunch of processes and tools on people without getting them on board, you are setting them all up for failure.

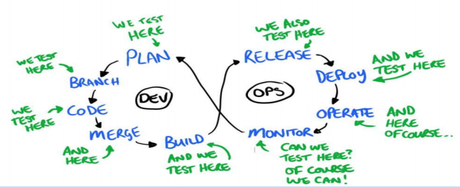

In practice, my advice is to start with small experiments and do one thing at a time. Don’t expect overnight results, and

- Make it a team sport. Establish channels of collaboration. Hold knowledge-sharing sessions. Try Pair and mob testing to reduce knowledge silos within the team.

- Plan before you execute. At each stage of the product life cycle, plan what and how you’ll test.

Q3: What are some Severity 1 bugs that ALMOST slipped past you — and how did you make sure they never did so again?

I have had two such incidents. I’ll share both, along with the lessons I learned from them:

- Software loading was failing if a specific application is running in parallel. Lesson: Test your software in real user conditions. Remember to test how your app works with shared resources such as memory, space, network resources, etc.

- The application gave a timeout if the form submission exceeded 15 minutes. Lesson: Actual users are not as fast as an automation bot/script. Users will take will never perform a form submission as quickly as a script, so use waits judiciously.

Q4: How do you manage failed tests and tests that fail randomly?

A failing test establishes my confidence in a test I’ve designed. The amazing Angie Jones once tweeted something which sums up my feelings on failed tests.

Randomly failing tests are interesting. They give me a reason to go sleuthing, to find and uncover variables that might be affecting my product and its functionality. My bug analysis and exploration skills have greatly improved as a result of investigating such bugs. Whenever I see a randomly failing test, I triangulate failure conditions by varying:

- Data

- Behavior

- Options & Settings

- Software & Hardware Environment

- Configuration & Dependencies, etc.

A randomly failing test is usually a symptom of a much larger problem. Sometimes, developers refer to such cases as corner cases, but I’ve learned to never ignore a random failure without investigating it. It might just be the tip of the iceberg of a whole load of flaky tests.

Q5: What’s your most memorable deployment and/or release day? (Good OR bad, just as long as no one dies at the end…)

In my first project as a software tester, I was testing for a product release of a large enterprise financial application. The stakes were very high since this product was developed to sunset a legacy system that had been in place for decades.

During testing, we were going through all the flows on a mocked simulated data set that was providing data as per our testing layer. After months of rigorous testing, on the release day, we started getting errors from the monitoring system.

There were gaps in the mocked simulated data and the actual production data. We hadn’t questioned the authenticity of the test data during the testing cycle. We ended up with a release rollback.

Some lessons that I learned that day:

- Never assume anything – What if the data is wrong?

- Learn how to create your own testing data. This way, you understand the sources, flows, and formats of the data.

- If you use mock/simulated data for testing, be sure to run a significant number of tests using real data as well.

Bonus: If you could give just ONE piece of advice to fledgling software testers, what would it be?

Like Paul Muad’Dib of Dune, “Learn how to learn.” Testing is a continuous search for information: Both about your product and the tests that you run it through.

None of us are born with knowledge. Trust that you can learn new ways to test. Be a continuous learner.