Solving differential equations numerically is a very mature field with multiple algorithms carefully explained in multiple textbooks. However, when it comes down to actually solving them in practice by nonspecialists, a lot of people, myself included, will often resort to the Euler method. There are two main reasons for this. The first is that it is trivial to remember and code and the second is that Moore’s law has increased the speed of computing sufficiently that we can get away with it. However, I think it’s time to get out of the eighteenth century and at least move into the nineteenth.

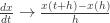

Suppose you have a differential equation

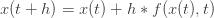

so that

Now what could be simpler than that? There are two problems with this algorithm. The first is that it is only accurate to order

The second most popular algorithm is probably (4th order) Runge-Kutta, which can generally use larger time steps for the same accuracy. However, a higher order algorithm is not necessarily faster because what really slows down a numerical scheme is the number of function evaluations it needs to make and 4th order Runge-Kutta needs to make a lot of function evaluations. Runge-Kutta is also plagued by the same stability problems as Euler and that can also limit the maximum allowed step size.

Now, these problems have been known for decades if not centuries and work-arounds do exist. In fact, the most sophisticated solvers such as CVODE tend to use adaptive multi-step methods, like Adams-Bashforth (AB) or it’s implicit cousin Adams-Moulton and this is what we should all be implementing in our own codes. Below I will give simple pseudo-code to show how a 2nd order Adams-Bashforth scheme is not much more complicated to code than Euler and uses the same number of function evaluations. In short, with not much effort, you can speed your code immensely by simply switching to AB.

The cleverness of AB is that it uses previous steps to refine the estimate for the next step. In this way, you get a higher order scheme without having to re-evaluate the right hand side of your ODE. You simply reuse your computations. It’s the ultimate green scheme. For the same ODE example above 2nd order AB is simply

You just need to store the previous two evaluations to compute the next step. To start the algorithm, you can just use an Euler algorithm to get the first step.

Here is the 2nd order AB algorithm written in pseudo Julia code for a one dimensional version of the ODE above.

# make x a two dimensional vector for the two time steps

# Set initial condition

x[1]=0;

# Store evaluation of function f(x,t) for later use

fstore[1]= f(x[1],h)

# Take Euler step

x[2] = x[1] + h*fstore[1]

# store function at time = 2h

fstore[2] = f(x[2],2*h)

# Store computed values for output

xout[1]=x[1]

xout[2]=x[2]

# Take AB steps

for t = 3:Tfinal

# set update indices for x based on parity of t

index2 = t%2+1

index1 = (t-1)%2+1

# 2nd order AB step

x[index1] = x[index2]+h*(1.5*fstore[2]-0.5*fstore[1]))

# update stored function

fstore[index1] = f(x[index1],t*h)

xout[t]=x[index1]

end

return xout