Chris Kim and I recently published a paper in eLife:

Learning recurrent dynamics in spiking networks.

Spiking activity of neurons engaged in learning and performing a task show complex spatiotemporal dynamics. While the output of recurrent network models can learn to perform various tasks, the possible range of recurrent dynamics that emerge after learning remains unknown. Here we show that modifying the recurrent connectivity with a recursive least squares algorithm provides sufficient flexibility for synaptic and spiking rate dynamics of spiking networks to produce a wide range of spatiotemporal activity. We apply the training method to learn arbitrary firing patterns, stabilize irregular spiking activity in a network of excitatory and inhibitory neurons respecting Dale's law, and reproduce the heterogeneous spiking rate patterns of cortical neurons engaged in motor planning and movement. We identify sufficient conditions for successful learning, characterize two types of learning errors, and assess the network capacity. Our findings show that synaptically-coupled recurrent spiking networks possess a vast computational capability that can support the diverse activity patterns in the brain.

The ideas that eventually led to this paper were seeded by two events. The first was about five years ago when I heard Dean Buonomano talk about his work with Rodrigo Laje on how to tame chaos in a network of rate neurons. Dean and Rodrigo expanded on the work by Larry Abbott and David Sussillo. The guiding idea from these two influential works stems from the "the echo state machine" or "reservoir computing". Basically, this idea exploits the inherent chaotic dynamics of a recurrent neural network to project inputs onto diverse trajectories from which a simple learning rule can be deployed to extract a desired output.

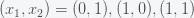

To explain the details of this idea and our work, I need to go back to Minsky and Papert and their iconic 1969 book on feedforward neural networks (called perceptrons), who divided learning problems into two types. The first type is linearly separable, which means that if you want to learn a classifier on some inputs, then a single linear plane can be drawn to separate the two input classes on the space of all inputs. The classic example is the OR function. When given inputs

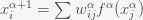

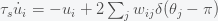

Mathematically, we can write a perceptron as

The idea of reservoir computing is to make a linearly inseparable problem separable by processing the inputs. The antecedent is the support vector machine or kernel method, which projects the data to a higher dimension such that an inseparable problem is separable. In the XOR example, if we can add a dimension and map (0,0) and (1,1) to (0,0,0) and (1,1,0) and map (1,0) and (0,1) to (1,0,1) and (0,1,1) then the problem is separable. The hard part is finding the mapping or kernels to do this. Reservoir computing uses the orbit of a chaotic system as a kernel. Chaos, by definition, causes initial conditions to diverge exponentially and by following a trajectory for as long as you want you can make as high dimensional a space as you want; in high enough dimensions all points are linearly separable if they are far enough apart. However, the defining feature of chaos is also a bug because any slight error in your input will also diverge exponentially and thus the kernel is inherently unstable. The Sussillo and Abbott breakthrough was that they showed you could have your cake and eat it too. They stabilized the chaos using feedback and/or learning while still preserving the separating property. This then allowed training of the output layer to work extremely efficiently. Laje and Bunomano took this one step further by showing that you could directly train the recurrent network to stabilize chaos. My thought at that time was why are chaotic patterns so special? Why can't you learn any pattern?

The second pivotal event came in a conversation with the ever so insightful Kechen Zhang when I gave a talk at Hopkins. In that conversation, we discussed how perhaps it was possible that any internal neuron mechanism, such as nonlinear dendrites, could be reproduced by adding more neurons to the network and thus from an operational point of view it didn't matter if you had the biology correct. There would always exist a recurrent network that could do your job. The problem was to find the properties that make a network "universal" in that it could reproduce the dynamics of any other network or any dynamical system. After this conversation, I was certain that this was true and began spouting this idea to anyone who would listen.

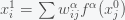

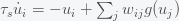

One of the people I mentioned this to was Chris Kim when he contacted me for a job in my lab in 2015. Later Chris told me that he thought my idea was crazy or impossible to prove but he took the job anyway because he wanted to be in Maryland where his family lived. So, upon his arrival in the fall of 2016, I tasked him with training a recurrent neural network to follow arbitrary patterns. I also told him that we should do it on a network of spiking neurons. I thought that doing this on a set of rate neurons would be too easy or already done so we should move to spiking neurons. Michael Buice and I had just recently published our paper on computing finite size corrections to a spiking network of coupled theta neurons with linear synapses. Since we had good control of the dynamics of this network, I thought it would be the ideal system. The network has the form

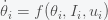

Whenever neuron

In our paper, we show that if the synapses are fast enough, i.e.

where

Addendum: The dates of events may not all be correct. I think my conversation with Kechen came before Dean's paper but my recollection is foggy. Memories are not reliable.