From Science of Doom, which is like Skeptical Science but hard core:

---------------------

Upwards Longwave Radiation

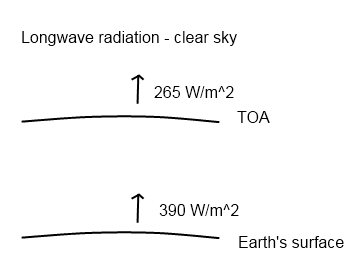

So let’s try and look at it again and see if starts to make sense. Here is the earth’s longwave energy budget – considering first the energy radiated up:

Of course, the earth’s radiation from the surface depends on the actual temperature. This is the average upwards flux. And it also depends slightly on the factor called “emissivity” but that doesn’t have a big effect.

Of course, the earth’s radiation from the surface depends on the actual temperature. This is the average upwards flux. And it also depends slightly on the factor called “emissivity” but that doesn’t have a big effect.

The value at the top of atmosphere (TOA) is what we measure by satellite – again that is the average for a clear sky. Cloudy skies produce a different (lower) number.

These values alone should be enough to tell us that something significant is happening to the longwave radiation. Where is it going? It is being absorbed and re-radiated. Some upwards – so it continues on its journey to the top of the atmosphere and out into space – and some back downwards to the earth’s surface. This downwards component adds to the shortwave radiation from the sun and helps to increase the surface temperature.

As a result the longwave radiation upwards from the earth’s surface is higher than the upwards value at the top of the atmosphere.

---------------------

To make this easy for beginners, I have put their sleight of hand in bold.

1. We are agreed that overall average emissions from Earth out to space ≈ 239 W/m2.

2. I am prepared to accept their readings of 390 W/m2 and 265 W/m2 for clear skies, which are assumed to be one-third of the surface.

3. Therefore, emissions to space from clouds, two-thirds of the surface, must ≈ 226 W/m2 to get the overall 239 W/m2 average (3 x 239 = 265 + 226 + 226).

4. Clouds are high up and cold. Let's say 6 km up, where it's 249 K. If clouds were perfect blackbodies (100% emissivity) they would be emitting 217 W/m2 (248^4/10^8 x 5.67), so more gets to space than they actually emit. Nobody's sure what their overall average emissivity is, let's call it 75% (mid-range of various estimates of ranges). So actually, they are emitting 163 W/m2, and a lot more gets to space that they actually emit (OK, this does not make sense any more, but that's what happens when you follow their logic).

5. So sure, there is 125 W/m2 'missing' with clear skies (390 - 265). But for cloudy areas, there is 63 W/m2 more going to space than clouds actually emit in the first place (226 - 163), and there is twice as much cloud as clear sky. How do they explain the 'extra' radiation? They don't of course, because that would give the game away*.

6. Now go back and read the bits in bold!

* The actual reason for the apparent discrepancy is as follows: the atmosphere is warmer at sea level than at the tropopause because of the gravito-thermal effect. All warm atoms and molecules emit radiation, be they land, ocean, water vapour, water droplets, CO2, whatever. So there is more being emitted at sea level than from higher up. If you measure from space, you are (indirectly) just measuring the temperature higher up and not the temperature at ground level/cloud level. Or maybe you are measuring some mix of the temperature at low and high altitudes - if you light a camp fire in the Arctic at night and measure it from a distance with an IR thermometer, you get a lower reading than if you light a campfire in the Sahara desert by day and measure it from the same distance. Simples!