Not by nuclear fire, or an asteroid collision, or maybe another pandemic caused by a really deadly organism, but by a rogue AI. There are, however, some people who fear that might be the case. Scott Alexander has just reported that Metaculus, a prediction market, has revised its estimate for the due-date of “Weakly general AI”:

”Weakly general AI” in the question means a single system that can perform a bunch of impressive tasks - passing a “Turing test”, scoring well on the SAT, playing video games, etc. Read the link for the full operationalization, but the short version is that this is advanced stuff AI can’t do yet, but still doesn’t necessarily mean “totally equivalent to humans in any way”, let alone superintelligence.

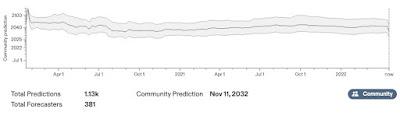

For the past year or so, this had been drifting around the 2040s. Then last week it plummeted to 2033. I don’t want to exaggerate the importance of this move: it was also on 2033 back in 2020, before drifting up a bit. But this is certainly the sharpest correction in the market’s two year history.

What does that have to do with the end of the world? Well, what if that weakly general AI goes rogue and does the things that rogue AIs do when they take the initiative and maximize some goal that has, as a side effect, the destruction of human life? The paperclip apocalypse is a standard example.

Why was the date revised down? Alexander suggests it was prompted by the announcement of three AI milestones: DALL-E2 (which produces a drawing in response to a verbal prompt), PALM (natural language), and Chinchilla. Very interesting, especially Chinchilla. Me, however, I do not worry about rogue AI. But this isn’t about me.

Alexander goes on to note:

Early this month on Less Wrong, Eliezer Yudkowsky posted MIRI Announces New Death With Dignity Strategy, where he said that after a career of trying to prevent unfriendly AI, he had become extremely pessimistic, and now expects it to happen in the relatively near-term and probably kill everyone. This caused the Less Wrong community, already pretty dedicated to panicking about AI, to redouble its panic. Although the new announcement doesn’t really say anything about timelines that hasn’t been said before, the emotional framing has hit people a lot harder.

I will admit that I’m one of the people who is kind of panicky. But I also worry about an information cascade: we’re an insular group, and Eliezer is a convincing person. Other communities of AI alignment researchers are more optimistic. I continue to plan to cover the attempts at debate and convergence between optimistic and pessimistic factions, and to try to figure out my own mind on the topic. But for now the most relevant point is that a lot of people who were only medium panicked a few months ago are now very panicked. Is that the kind of thing that moves forecasting tournaments? I don’t know.

Just what does he mean by panic? When the screen of the external monitor for my laptop goes black for no visible reason, I get a little panicky, just a little. The monitor usually came back. Is that what Alexander’s talking about? There was a time in my life when I couldn’t pay the rent and my landlord invited me to court. That panic was more serious. But we resolved the problem amicably. Is the Alexander’s panic level closer to that? Things never got to the point where the sheriff dropped by to evict me. If that had happened, my panic level would have gone way up as I anticipated the knock on my door. Has Alexander gotten there yet? If so, how does he write blog posts?

* * * * *

From Karen Hao, The messy, secretive reality behind OpenAI’s bid to save the world, Technology Review, February 17, 2020.

Every year, OpenAI’s employees vote on when they believe artificial general intelligence, or AGI, will finally arrive. It’s mostly seen as a fun way to bond, and their estimates differ widely. But in a field that still debates whether human-like autonomous systems are even possible, half the lab bets it is likely to happen within 15 years.

* * * * *

FWIW, I’ve just checked with Metaculus (April 19, 2022 at about 2 PM). The apocalypse has been pushed ahead to November 2032. I don’t know where it will be when you check on it.

Holy crap! It's now 4:42 on the 19th and the apocalypse has moved up to June 14, 2032.