Know how to create custom robots.txt for your blogger hosted site.

To view/verify your live custom robots.txt file write www.yoursite.blogspot.com/robots.txt in browsers address bar with the active internet connection.

Creating a custom robots.txt in blogger will help crawler index more files and your all the pages will be indexed by the search engines. By default settings of your blogger site will only index your latest 26 pages. Hence, most of your old pages are gone into dark side of a search engines. If you create a customized robot.txt file then your will let the crawler index more pages. Therefore visibility in searches will be higher than usual.

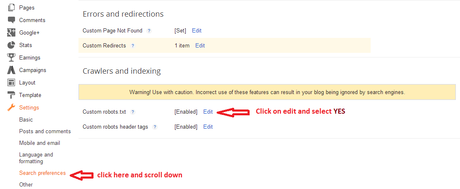

To do this you need to go to settings > click on search preference > scroll down and click on EDIT link > then click on Yes to and copy paste the following lines.NEW ROBOTS.TXT Should look like this:# Blogger Sitemap generated on 2014.07.28

# The following user agent is used by Google AdSense (If you are using AdSense then this will appear) to display advertisement. Do not remove this user-agent. Else your Adunit will show an error message.User-agent: Mediapartners-GoogleDisallow:

# The following user agent is used by Microsoft Research to display advertisement in case you are using Microsoft Advt. Do not remove this user-agent. Else your Adunit will show an error message.

User-agent: MSR-ISRCCrawler

Disallow:# The following user agent is used by Amazon Affiliate Program to display advertisement in case you are using Microsoft Advt. Do not remove this user-agent. Else your Adunit will show an error message.User-agent: AMZNKAssocBot

Disallow:UPDATE Disallow: /p/about.html (using this, you can disallow about-us page to be crawled by AdSense who crawls your site once in every week. Apply this method to those pages you don't want AdSense to crawl)

You can also use Allow: /Publishig Year/Month/post-url.html to allow a specific page to be crawled by AdSense

# The following user agent (*) allows any bot (any user agent) to access any part of your site except yoursite/search.

User-agent: *Disallow: /searchAllow: /

(UPDATE [SEO Tips])User-agent: Googlebot# disallow all files ending with these extensions to prevent googlebot to crawl

Disallow: /*.php$

Disallow: /*.js$

Disallow: /*.inc$

Disallow: /*.css$

Disallow: /*.gz$

Disallow: /*.wmv$

Disallow: /*.cgi$

Disallow: /*.xhtml$

# Sitemap: http://yoursite.blogspot.com/feeds/posts/default?orderby=UPDATEDSitemap: http://yoursite.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

(UPDATE [SEO Tips]: it is advised you keep this within 100 links per page i.e. you can set this to 99 - recommended after google latest update)

Sitemap: http://yoursite.blogspot.com/atom.xml?redirect=false&start-index=501&max-results=500

Note: Replace yoursite with your site url. If your site has more than 500 pages then add a new line with the same text but replace start-index=501 with start-index=1001 then continue adding a new line for each 500 pages and replace start-index with 1501/2001/2501/3001 till your every pages is being covered...(UPDATE: make sure that your sitemap has less than 100 links per page)Note: If you wish to disallow any particular post then use the followings:Disallow: /Publishig Year/Month/post-url.html or you can simply copy paste the url link without site name. You can also block a particular page with "/p/page-url.html"OLD ROBOTS.TXTUser-agent: Mediapartners-GoogleDisallow: User-agent: *Disallow: /searchAllow: /Sitemap: http://yoursite.blogspot.com/feeds/posts/default?orderby=UPDATED

See the image below on "How to write custom Robots.Txt file for your blogger site" You can use Google Webmaster tools to verify if your custom robots.txt has an error in it or it is working as you have set up.To do this login to your www.google.com/webmastertools >> select your site (if you have already setup your site else register your site) >> click on manage site >> expand Crawl >> click on Robots.Txt Tester or use the following link https://www.google.com/webmasters/tools/robots-testing-tool?hl=en&siteUrl=[Your URL]

You can use Google Webmaster tools to verify if your custom robots.txt has an error in it or it is working as you have set up.To do this login to your www.google.com/webmastertools >> select your site (if you have already setup your site else register your site) >> click on manage site >> expand Crawl >> click on Robots.Txt Tester or use the following link https://www.google.com/webmasters/tools/robots-testing-tool?hl=en&siteUrl=[Your URL]