One of my main complaints about the current regime in machine learning is that researchers don’t seem to have given much thought to the nature of language and cognition independent from the more or less immediate requirements of crafting their models. There is a large, rich, and diverse literature on language, semantics, and cognition going back over a half century. It’s often conflicting and thus far from consensus, but it’s not empty. The ML research community seems uninterested in it. I’ve likened this to a whaling voyage captained by a man who knows all about ships and little about whales.

As a symptom of this, I offer this video clip from a 2023 conversation between Ilya Sutskever and Dwarkesh Patel in which next-token prediction will be able to surpass human performance:

Here's a transcription:

I challenge the claim that next-token prediction cannot surpass human performance. On the surface, it looks like it cannot. It looks like if you just learn to imitate, to predict what people do, it means that you can only copy people. But here is a counter argument for why it might not be quite so. If your base neural net is smart enough, you just ask it — What would a person with great insight, wisdom, and capability do? Maybe such a person doesn't exist, but there's a pretty good chance that the neural net will be able to extrapolate how such a person would behave. Do you see what I mean?

Dwarkesh Patel

Yes, although where would it get that sort of insight about what that person would do? If not from…

Ilya Sutskever

From the data of regular people. Because if you think about it, what does it mean to predict the next token well enough? It's actually a much deeper question than it seems. Predicting the next token well means that you understand the underlying reality that led to the creation of that token. It's not statistics. Like it is statistics but what is statistics? In order to understand those statistics to compress them, you need to understand what is it about the world that creates this set of statistics? And so then you say — Well, I have all those people. What is it about people that creates their behaviors? Well they have thoughts and their feelings, and they have ideas, and they do things in certain ways. All of those could be deduced from next-token prediction. And I'd argue that this should make it possible, not indefinitely but to a pretty decent degree to say — Well, can you guess what you'd do if you took a person with this characteristic and that characteristic? Like such a person doesn't exist but because you're so good at predicting the next token, you should still be able to guess what that person who would do. This hypothetical, imaginary person with far greater mental ability than the rest of us.

The argument is not clear. One thing Sutskever seems to be doing is aggregating the texts of ordinary people into the text of an imaginary “super” person that is the sum and synthesis what all those ordinary people have said. But all those ordinary individuals do not necessarily speak from the same point-of-view. There will be tensions and contradictions between them. The views of flat-earthers cannot be reconciled with those of standard astronomy. But this is not my main object. We can set it aside.

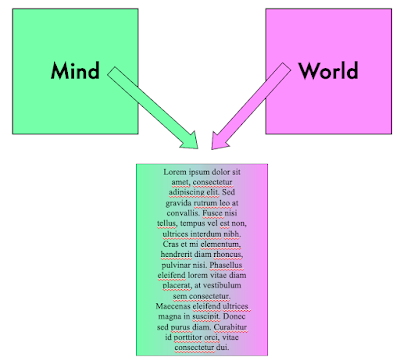

My problem comes with the Sutskever’s second paragraph, where he says, “Predicting the next token well means that you understand the underlying reality that led to the creation of that token.” From there he works his way through statistics to the thoughts and feelings of people producing the tokens. But Sutskever doesn’t distinguish between those thoughts and feelings and the world toward which those thoughts and feelings are directed. Those people are aware of the world, of the “underlying reality,” but that reality is not itself directly present in the language tokens they use to express their thoughts and feelings. The token string is the product of the interaction between language and cognition, on the one hand, and the world, on the other:

Sutskever seems to be conflating the cognitive and semantic structures inhering in the minds of the people who produce texts with the structure of the world itself. They are not at all the same thing. A statistical model produced through next-token prediction may well approximate the cognitive and semantic models of humans, but that’s all it can do. It has no access to the world in the way that the humans do. That underlying reality is not available to them.

What does Claude have to say about this?

I gave Claude 3.5 Sonnet Sutskever’s second paragraph and had a conversation about it. I wanted it to see if it could spot the problem. Claude saw problems, but in the end I had to tell it that Sutskever failed to distinguish between the structure of the world and the structure of the text.

* * * * *

Does the following passage make sense?

Because if you think about it, what does it mean to predict the next token well enough? It's actually a much deeper question than it seems. Predicting the next token well means that you understand the underlying reality that led to the creation of that token. It's not statistics. Like it is statistics but what is statistics? In order to understand those statistics to compress them, you need to understand what is it about the world that creates this set of statistics? And so then you say — Well, I have all those people. What is it about people that creates their behaviors? Well they have thoughts and their feelings, and they have ideas, and they do things in certain ways. All of those could be deduced from next-token prediction. And I'd argue that this should make it possible, not indefinitely but to a pretty decent degree to say — Well, can you guess what you'd do if you took a person with this characteristic and that characteristic? Like such a person doesn't exist but because you're so good at predicting the next token, you should still be able to guess what that person who would do. This hypothetical, imaginary person with far greater mental ability than the rest of us.

Let me analyze this passage and explain why it's somewhat problematic in its logic and clarity:

1. Coherence Issues:

- The passage jumps between different concepts without clear logical connection

- The flow from token prediction to understanding human behavior to hypothetical people is not well established

- Many sentences start with "And" or "Like" in a way that makes the argument hard to follow

2. Logical Flaws:

- It makes an unsupported leap from statistical prediction to understanding "underlying reality"The claim that understanding statistics requires understanding what creates those statistics is not necessarily true

- The conclusion about predicting hypothetical people's behavior doesn't follow clearly from the premises

3. Unclear References:

- "This hypothetical, imaginary person" at the end seems disconnected from the rest of the text

- The final sentence is incomplete or unclear in its meaning

Here's a suggested revision to make the core argument clearer:

"Next-token prediction in AI systems raises profound questions about understanding. To predict tokens accurately, a system must capture patterns in the data that reflect real-world relationships and human behavior. While this prediction is fundamentally statistical, it requires modeling complex patterns that emerge from human thoughts, feelings, and actions. This deep statistical learning might enable systems to make reasonable predictions about hypothetical scenarios or people, even if they've never existed in the training data. However, we should be careful not to conflate statistical prediction with true understanding or consciousness."

Would you like me to explain any part of this analysis in more detail?

I’m concerned about that unsupported leap from statistical prediction to understanding "underlying reality". Does that make any sense? How would that be possible?

You raise an excellent point about a fundamental issue in AI and philosophy of mind. The leap from statistical prediction to "understanding underlying reality" is indeed highly questionable. Let me break down why:

1.The Statistical Pattern vs Understanding Problem

- Just because a system can predict patterns doesn't mean it "understands" them

- A simple example: A thermometer can perfectly "predict" temperature changes without "understanding" heat or molecular motion

- Similarly, a system could learn that "dog" often follows "hot" without understanding what either word means in reality

2.The Chinese Room Analogy

- Philosopher John Searle's famous thought experiment is relevant here

- A person who doesn't know Chinese could follow perfect rules to respond to Chinese messages

- They would appear to "understand" Chinese while actually just manipulating symbols

- Similarly, language models manipulate tokens based on patterns without necessarily "understanding" reality

3.Correlation vs Causation

- Statistical prediction finds correlations in data

- Understanding reality requires grasping causal relationships

- Example: An AI might learn that umbrellas appear in text near rain, but that doesn't mean it understands that humans use umbrellas to stay dry

4.The Symbol Grounding Problem

- How do symbols (like words or tokens) connect to actual meaning?

- Statistical patterns are still just relationships between symbols

- There's no clear mechanism for how pure symbol manipulation leads to understanding of what those symbols represent in reality

The passage makes an unjustified assumption that being good at predicting patterns automatically leads to understanding the reality that generated those patterns. This is a huge philosophical leap that bypasses fundamental questions about the nature of understanding, consciousness, and meaning.

Would you like me to elaborate on any of these points?

I’m interested in your first point.

Let me dive deeper into why being able to predict patterns doesn't necessarily equal understanding:

Think about weather forecasting software from the 1980s. It could make decent predictions by processing air pressure, temperature, and wind patterns. But we wouldn't say it "understood" weather in any meaningful sense. It was just applying mathematical models to data.

Here's an even clearer example: Imagine a simple program that notices this pattern:

2, 4, 6, 8, ?

The program could "predict" that 10 comes next. But there are actually multiple possible understandings of why:

- Could be counting by 2s

- Could be listing even numbers

- Could be factors of 40 in ascending order

- Could be first digits of a specific mathematical sequence

The program might make the right prediction (10) without having any concept of what even numbers are, what counting means, or why someone might want to count by 2s. It just spotted the "+2" pattern.

This gets at a deep question: What's the difference between:

1.Being able to predict what comes next

2.Actually understanding the underlying concept

Language models are like this but vastly more complex. They can predict that "The sky is ___" should be completed with "blue" without necessarily having any concept of sky, color, or the experience of seeing blue. They've just learned that in the pattern of tokens, "blue" very often follows "The sky is".

This is why simply improving prediction accuracy doesn't automatically give us systems that truly understand reality - they might just be getting better at spotting increasingly subtle patterns without bridging the fundamental gap between pattern recognition and genuine understanding.

What are your thoughts on this distinction between prediction and understanding? I'm curious whether you think there might be some threshold of prediction accuracy where it does start to imply real understanding.

The patterns are in the tokens, right? And the tokens were produced by humans thinking about the world, right?

Ah, this is a fascinating perspective that adds nuance to my previous response. Let me think this through:

1.The Chain of Representation:

- Humans observe and think about reality

- Humans encode those thoughts into language (tokens)

- The tokens therefore indirectly capture human understanding of reality

2.So you could argue:

- If you can predict tokens extremely well

- You must have captured something about how humans represent reality in language

- Because those patterns didn't come from nowhere - they came from human minds engaging with the real world

This is actually a more sophisticated argument than I initially gave credit to. It's saying: The statistical patterns aren't arbitrary - they're traces of human understanding encoded in language.

However, key questions remain:

- Is capturing patterns of human representation the same as having understanding yourself?

- Could you predict how humans talk about reality without understanding reality in the way humans do?

- How do we differentiate between mimicking human-like responses and genuinely understanding things?

You've made me reconsider my earlier thermometer analogy. Language patterns, unlike temperature readings, are products of minds trying to represent meaning. There's something richer there.

This is a much stronger argument for how statistical prediction might relate to understanding than I initially acknowledged. Though I think it still leaves open deep questions about the nature of understanding itself.

What do you think - does being able to model how humans represent reality in language amount to understanding reality itself?

But the patterns are only about human understanding and conceptualizing, and are not directly about the world itself. The patterns are NOT about the underlying reality.

AH! This is a crucial insight that helps clarify the logical error in the original passage!

The patterns in language tokens are patterns of human conceptualization and understanding - not patterns of reality itself. When we do next-token prediction, we're modeling:

- How humans think about the world

- How humans talk about the world

- How humans categorize and conceptualize things

- Human cultural frameworks and shared meanings

- Human cognitive patterns and biases

But we're NOT directly modeling:

- The actual physical world

- Underlying reality independent of human perception

- The true nature of things-in-themselves

- Raw reality before human conceptualization

This is why the original passage makes an unjustified leap. Getting good at predicting tokens means getting good at modeling human thought/language patterns. But those patterns are already one step removed from reality - they're human interpretations and representations.

It's like the difference between:

- Studying maps (human representations)

- Studying the actual territory

Being really good at predicting what appears on maps doesn't necessarily mean you understand the actual terrain! You've just gotten good at modeling how humans represent terrain in map form.

This is a really important distinction that the original passage glosses over. Thank you for helping clarify this - it's made me realize that even my previous response was still partially caught in the same confusion.