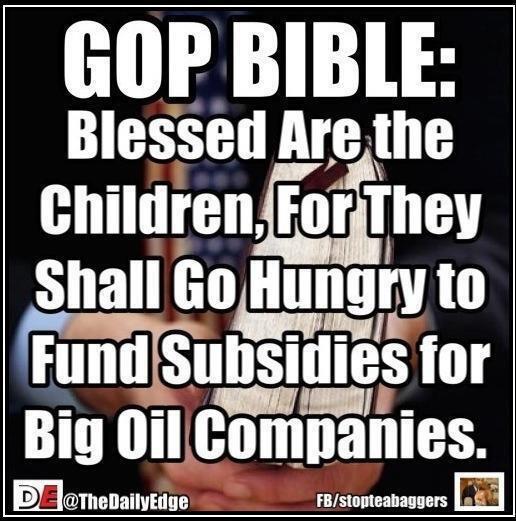

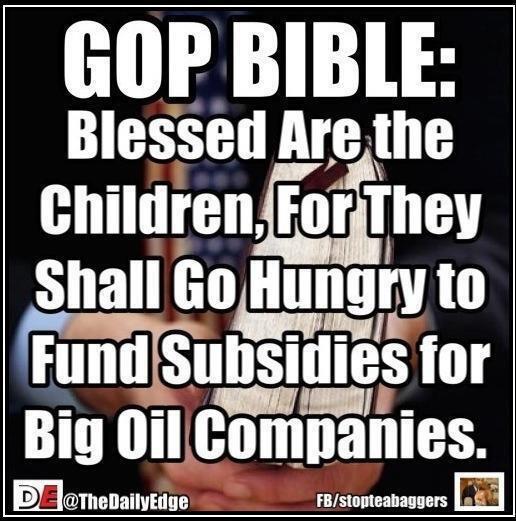

I am an atheist, but I was raised in a fundamentalist christian church and attended two christian universities (Harding University and Texas Wesleyan University) -- getting a bachelor's degree from the second. So I am very familiar with the teachings of Jesus and the christian church -- and those teachings have nothing at all to do with current GOP policies (in spite of the fact that most in the GOP wear their religion on their sleeves). When did being a christian become nothing more than claiming to be one? Are good works no longer required? Did Jesus send a special message to Republicans that he was just kidding when he said to care for the poor, the elderly, and the children? Christianity used to be a religion. Has it now just become another political movement -- and a rather greedy and uncaring one at that?

I am an atheist, but I was raised in a fundamentalist christian church and attended two christian universities (Harding University and Texas Wesleyan University) -- getting a bachelor's degree from the second. So I am very familiar with the teachings of Jesus and the christian church -- and those teachings have nothing at all to do with current GOP policies (in spite of the fact that most in the GOP wear their religion on their sleeves). When did being a christian become nothing more than claiming to be one? Are good works no longer required? Did Jesus send a special message to Republicans that he was just kidding when he said to care for the poor, the elderly, and the children? Christianity used to be a religion. Has it now just become another political movement -- and a rather greedy and uncaring one at that?

Politics Magazine

I am an atheist, but I was raised in a fundamentalist christian church and attended two christian universities (Harding University and Texas Wesleyan University) -- getting a bachelor's degree from the second. So I am very familiar with the teachings of Jesus and the christian church -- and those teachings have nothing at all to do with current GOP policies (in spite of the fact that most in the GOP wear their religion on their sleeves). When did being a christian become nothing more than claiming to be one? Are good works no longer required? Did Jesus send a special message to Republicans that he was just kidding when he said to care for the poor, the elderly, and the children? Christianity used to be a religion. Has it now just become another political movement -- and a rather greedy and uncaring one at that?

I am an atheist, but I was raised in a fundamentalist christian church and attended two christian universities (Harding University and Texas Wesleyan University) -- getting a bachelor's degree from the second. So I am very familiar with the teachings of Jesus and the christian church -- and those teachings have nothing at all to do with current GOP policies (in spite of the fact that most in the GOP wear their religion on their sleeves). When did being a christian become nothing more than claiming to be one? Are good works no longer required? Did Jesus send a special message to Republicans that he was just kidding when he said to care for the poor, the elderly, and the children? Christianity used to be a religion. Has it now just become another political movement -- and a rather greedy and uncaring one at that?