One of the things that I’ve often wondered about is whether making the effort to spread your scientific article’s message as far and wide as possible on social media actually brings you more citations.

One of the things that I’ve often wondered about is whether making the effort to spread your scientific article’s message as far and wide as possible on social media actually brings you more citations.

While there’s more than enough justification to promote your work widely for non-academic purposes, there is some doubt as to whether the effort reaps academic awards as well.

Back in 2011 (the Pleistocene of social media in science), Gunther Eysenbach examined 286 articles in the obscure Journal of Medical Internet Research, finding that yes, highly cited papers did indeed have more tweets. But he concluded:

Social media activity either increases citations or reflects the underlying qualities of the article that also predict citations …

Subsequent work has established similar positive relationships between social-media exposure and citation rates (e.g., for 208739 PubMed articles; > 10000 blog posts of articles published in > 20 journals), weak relationships (e.g., using 27856 PLoS One articles; based on 1380143 articles from PubMed in 2013), or none at all (e.g., for 130 papers in International Journal of Public Health).

While the research available suggests that, on average, the more social-media exposure a paper gets, the more likely it is to be cited, the potential confounding problem raised by Eysenbach remains — are interesting papers that command a lot of social-media attention also those that would garner scientific interest anyway? In other words, are popular papers just popular in both realms, meaning that such papers are going to achieve high citation rates anyway?

The obvious question that arises from this query is — from a purely academic perspective — should we even bother promoting our papers on social media?

As someone who has spent probably far too much of my career engaged in social and traditional media to get my messages out, I should be satisfied that the exposure outside of academia is a good thing in its own right. Yes, I’ve had some policy successes from my work, and I would like to think that I’ve changed a few minds in the right direction.

However, given that my academic bread and butter is still citations, how can we determine whether social media actually brings more citations to your papers than they would otherwise get?

Considering that this is a blog post and not a paper, I have only done the least amount of work possible to ‘investigate’ this problem. That means I’ve only looked at data from my own publications because I had them on hand.

My reasoning was this — if social and traditional media brought more citations to a paper than they would have otherwise received, I hypothesise that there would be an additive effect of the journal’s citation potential (in this case, the 2018 Impact Factor; but see here) and its social-media hype (Altmetrics score) on article citation rate.

I also hypothesised that if a paper received a high Almetrics score, it would inflate the paper’s citation rate beyond what would be expected from its journal’s Impact Factor alone (i.e., an interaction effect).

So, taking all of my papers with an Altmetrics score > 20 (arbitrary, but this did remove some of the older papers that first came out when Altmetrics wasn’t yet a thing — i.e., I’m old now), I recorded the paper’s latest Altmetrics score, its citation rate (total Google citations ÷ number of years since first published), and the 2018 Impact Factor of the journal in which it was published. I also removed all papers published in 2019 because I thought it might skew the citation-rate data (i.e., not enough time elapsed to obtain many citations). There were 68 papers included in the final sample.

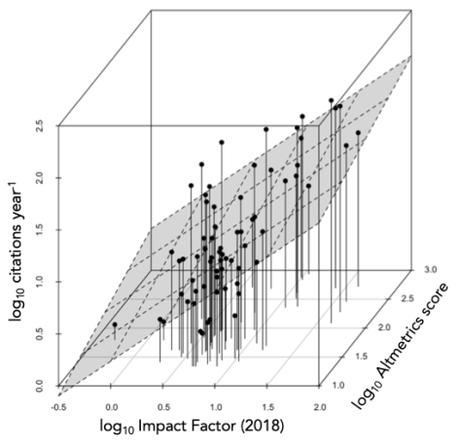

The relationships were highly skewed and a bit of a mess (see 3-D plot below), so I was forced to treat all relationships as power laws (log-log relationships). So keep that in mind for the interpretation of the estimated slopes.

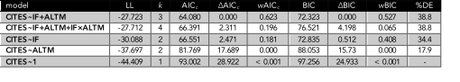

Running six general linear models, and comparing them using information criteria, I get the following results from my sample:

CITES = citation rate; IF = 2018 Impact Factor; ALTM = Altmetrics score; LL = log-likelihood; k = number of model parameters; AICc = Akaike’s information criterion (raw, Δ = difference to top model, and w = weight); BIC = Bayesian information criterion (raw, Δ = difference to top model, and w = weight); %DE = % deviance explained.

The slope estimates for the top-ranked model were — Impact Factor: 0.665 (± 0.141) and Altmetrics: 0.248 (± 0.114).

So, based on both Akaike’s information criterion (corrected for sample size), and the Bayesian information criterion, we can safely say that there is indeed an additive effect of Impact Factor and Altmetrics on citation rate, explaining 38.8% of the deviance. This model has a lot more weight than the Impact Factor model only, suggesting that yes, there is indeed an additive effect (hypothesis 1). You can see this graphically by the slight tilt of the fitted plane toward the viewer in the figure above. But if we look closely, most of the deviance explained is from Impact Factor (34.4%), meaning that only about 4.4% is additionally explained by Altmetrics.

Looking at hypothesis 2, it might appear that there’s quite a bit of support for the interaction model (second model, with between 6.5 and 19.6% of the BIC and AIC support, respectively); however, the deviance explained is almost exactly the same as the top-ranked model. In this case, we can safely say that there’s little support for an interaction effect, thus rejecting hypothesis 2.

What can I conclude with this extremely restrictive sample? Well, it appears that higher social/traditional media exposure does impart a modest citation effect beyond what would be expected anyway, but it’s fairly weak. But there’s no support for Altmetrics inflating the citation potential of journals with an already high citation history.

Of course, it would be good to do this with a much larger sample of non-Corey papers to see if the relationships hold, but it’s nearly holiday time now and I can’t be bothered.

CJA Bradshaw