Over the past decade, scraping data has become a must-have for companies or individuals that work or need tons of data. Earlier the scraping was done by hand mostly because there wasn’t that much data on the internet, and in the early days of scraper, the services were very expensive.

Today, it is a different story. You have tons of scraper services that you can choose from, which often can cause confusion – which is the best, which is the cheapest etc. The bigger problem is proxies. Most scrapers need to rely on proxy addresses to avoid getting bans – but not all of them work as advertised. As much as there are web scrapers, there are even more proxy providers. So which one should you go for?

To help you out with your choice today, we are doing to review Scrapinghub’s Crawlera, so that you can decide if it would be a good service for your needs.

Official Website: Crawlera.io

Our Expert Rating and Test Results

Overall

8.8/10 8.8/10- Scraping Performance - 9.1/10 9.1/10

- Anonymity - 8.6/10 8.6/10

- Locations - 8.4/10 8.4/10

- Success Rate - 9.2/10 9.2/10

History

The story of Crawlera started way back in 2007 when founders Shane Evans and Pablo Hoffman got the idea to develop a crawler, as this was the time when companies needed them. A year later, the first candidate was released under the name Scrapy. It was a fully open-source python-based framework designed for scraping and crawling.

Scrapinghub, as a company, was born two years later in 2010. Since then, they have developed and released several tools offering a wide variety of services for a lot of companies. On their website, they claim that today they service over 2000 companies, including a lot of Fortune 500 companies. This speaks a lot.

Crawlera, as we know today, was released in 2012. The service was advertised and released as a proxy solution for web scrapers. Crawlera is a proxy management solution that provides the reliability that people were looking for from proxy services. In simple terms, it can grab data from websites and manage proxies at the same time.

Features

One of the main features that Crawlera is very happy to promote is the simulation of human behavior. What this does is basically simulate various browsers and versions for the request so that the website’s server doesn’t get suspicious.

Another great feature that Crawlera has is the ability to detect banned proxy addresses and exclude them from the pool for the request that is currently running. To avoid getting your IP addresses banned, the service uses an intelligent IP rotation and management. Both of these combined will ensure that you get the least amount of IP addresses banned and will keep the banned ones out of the way.

The last of the main features is scalability. It doesn’t matter if you need to scrape something of a homepage or you need thousands of records, Crawlera’s scalability will be able to adapt to any requirements that you have.

Subscription Prices

The pricing plans that Crawlera offers are pretty straightforward. At the time of the writing, they have five plans: C10, C50, C100, C200, and Enterprise. The first four are similar, but there are a few differences. The main ones are the number of concurrent requests and the number of monthly requests.

C10 offers ten concurring requests, C50 offers 50 concurring requests, C100 has 100, and C200 has 200. The second difference with the monthly requests is the following: C10 – 150k monthly requests, C50 – 1 million, C100 – 3 million, and C200.

Regarding the other features, all four packages are similar, offering 24/5 (yes, five days a week) support, unlimited bandwidth, and custom user agents.

The biggest and baldest package is Enterprise, and it has everything, and everything is unlimited. You can make as much of a monthly and concurrent request as you want. On top of that, you also get 24/7 priority support, dedicated IP addresses, and the ability to use residential IPs, geo-targeting, and personalized onboarding.

As you can see, the packages are separated in a way so that you can choose the one that suits your needs the best. This includes everything from small scraping projects for personal use or small companies to big data harvesting projects for enterprise companies.

Crawlera offers a 7-day trial on the Enterprise package only. As for the other packages, you can get a 7-day money-back guarantee, but you would still need to go through the process of payment.

How to use Crawlera

Unlike some of its competitors, Crawlera is not a service with a user interface. For example, import.io has an interface where you can select visually the data that you want to grab. Crawlera works only with commands and code.

Once you choose the plan you want to use and set everything up, you will need to log in to the dashboard where you can grab the API key that you will be using for the scraping.

Since Crawlera can be used in various programming languages, and each one has several different parameters that can be changed, we will not go into great detail. Instead, we will cover the basics and the parameters that you can change

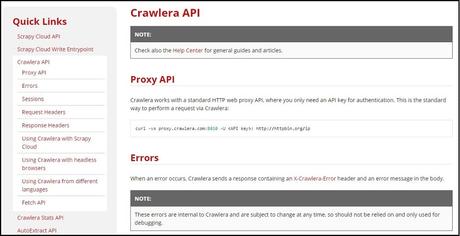

The most basic usage is the following:

curl -vx proxy.crawlera.com:8010 -U <API key>: http://httpbin.org/ip

This request will get you all the data from http://httpbin.org/ip, and all you would need to edit is the API key. This is only good for testing to see if your account is active, and the API key is valid.

Once you go over the basics, you get a few more options that you can add or modify. For starters, you can set up your sessions. What this does is that it enables the same slave to be used for every request for up to 30 minutes.

You can also use request headers to modify the behavior of the crawler and make it more human-like. There are multiple parameters that can be used here:

- X-Crawlera-UA (User-Agent Behavior)

- X-Crawlera-Profile (Create profiles for crawling that you can use repeatedly)

- X-Crawlera-No-Bancheck (Disable ban checks)

- X-Crawlera-Cookies (Enable or disable cookies)

- X-Crawlera-Timeout (Modify timeout time)

- X-Crawlera-Session (Create, modify or delete sessions_

- X-Crawlera-JobId (Identifications for different crawling processes)

- X-Crawlera-Max-Retries (Modify the number of maximum retries)

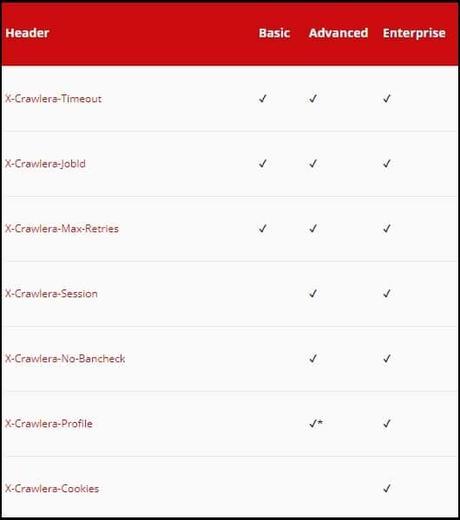

Each one has a specific setting that you can review in greater detail in their documentation section and bear in mind that not all of these parameters are available for all packages.

Speaking of headers, you can also customize the return headers on Crawlera and bypass the default settings and parameters.

Crawlera can be used with different programming languages, and those are:

- Python

- PHP

- Ruby

- js

- Java

- C#

Ease of use

The main question: is Crawlera easy to use? To be fairly honest, no. Unlike some of its competitors, like the formerly mentioned import.io, this is a service that will take some time to learn. If you are a beginner, you might have a hard time getting the grasp, and you might wonder if it’s worth spending so much time on learning it and if you should go for a competitor.

Customer Support

This is where Crawlera shines. For starters, you get 24/5 support for the first four packages. Some people might be put off by this, but we believe that it wouldn’t be a problem, simply because most people work from Monday to Friday, so no fuss there. The most expensive package, Enterprise, offers 24/7 priority support, and they do their best to help you out if you have a problem or guide you if you are not sure what to do.

Human support aside, their FAQ and Documentation section has all the information, examples, and explanations that you would need to get started. They do cover the simple examples, but the explanations for the parameters is more than enough so that you can see how it works.

Our verdict – Is Crawlera recommended?

So, is Crawlera any good? The answer is a bit complicated. Crawlera is good and not so good at the same time.

On the one hand, you have an excellent scraper with a built-in proxy management service, which is pretty smart and does its best to avoid getting banned and manages everything automatically so that you don’t have to.

On the other hand, you have that service that you would need to set up yourself, which can get slightly technical. The documentation sections will help, and the support staff will be an additional backup, but at the end of the day, there is a lot of typing involved.

To summarize, if you are scraping for the first time and haven’t written a single line of code or command, you are better off looking for another scraper. But, if you don’t mind getting your hand dirty and play around with the commands and parameters, then you should give it a try. If it doesn’t suit your needs or is too complicated, you have seven days to get your money back.