Generally speaking, the vast majority of augmented reality applications that enhance the world around us by overlaying digital content on images displayed on smartphone, tablet or computer screens are aimed squarely at the sighted user. A team from the Fluid Interfaces Group at MIT’s Media Lab has developed a chunky finger-worn device called EyeRing that translates images of objects captured through a camera lens into aural feedback to aid the blind.

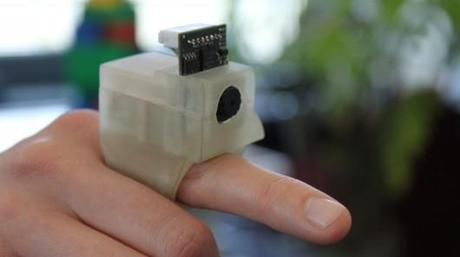

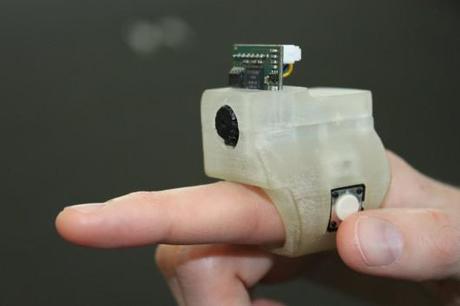

The EyeRing system was created by Suranga Nanayakkara (who currently directs the Augmented Senses Research Group at Singapore University of Technology and Design), PhD student Roy Shilkrot and associate professor and founder of the Fluid Interfaces Group, Pattie Maes. It features a 3D-printed ABS nylon outer housing containing a small VGA camera unit, a 16 MHz AVR processor, a Bluetooth radio module and a 3.7V Li-ion battery. There’s also a mini-USB port for charging the battery and reprogramming the unit, a power on/off switch and a thumb-activated button for confirming commands.

The user points the ring’s camera at an object, tells the system what kind of information is needed via a microphone on the earphone cord and then clicks the button on the side of the unit. The camera snaps a photo and sends it to a Bluetooth-linked smartphone. A specially-developed Android app processes the image using computer-vision algorithms according to the preset mode selected by the user and uses a text-to-speech module to announce the appropriate results through earphones plugged into the smartphone.

The proof-of-concept prototype EyeRing system can currently be used to identify currency, text, pricing information on tags, and colors, and the preset mode can be changed by double-clicking the button and speaking a different command into the microphone. The information is also displayed on the screen of the smartphone in text form.

Other applications for the system include cane-free navigation. The current version determines the distance from objects by comparing two images taken by the camera, and can even create a 3D map of the surrounding environment. Future implementations might include the ability to shoot live video and provide all sorts of useful information to the wearer.

The EyeRing project is still very much a work in progress, with Maes confirming that any moves toward commercialization are likely at least two years away. The group is already working on an iOS flavor, though, and size and shape refinements are certain to be made.

Single-user tests with a volunteer have confirmed the existing system’s assistance potential, but the addition of more sensors (such as an infrared light source or a laser module, a second camera, a depth sensor or inertial sensors) to the finger-worn device could open it up to whole new levels of usefulness.

Source: MIT