Eye-hand coordination

But before I get to that I want to acknowledge what seems to me some remarkable work by researchers at OpenAI, a robotic hand that solves Rubik’s Cube. The cube algorithm is (old school) symbolic (as Gary Marcus points out) but visual perceptual and manipulation are achieved by (new school) neural networks. I think the visuo-manipulative work is wonderful.

But does it display intelligence? I don’t much care. I think it’s wonderful. And perhaps the most wonderful aspect is the interaction between visual perception on the one hand and hapsis (touch) and movement on the other. My instinct/intuition is to say that THERE’s where you’re going to get “intelligence”, whatever that is, from that intermodal coordination. Why? Because that interaction is just a bit more abstract than either perception or movement alone; it must take place in an abstract space that encompasses both but isn’t OF either.

Of course, back in the old school world of symbolic intelligence, the sensorimotor world of manipulation was peripheral to intelligence, which was about things like theorem proving, chess, and expert systems of scientific and technical knowledge. That began to change, I believe, in the 1970s. For one thing work on natural language forced researchers to integrate perception (of the auditory signal) with cognition (semantic meaning). And that’s when things began to collapse.

But we needn’t go into that here.

So what?

I remember reading Searle’s (in)famous Chinese Room argument when it came out in Behavior and Brain Sciences in, I believe 1980, and being both puzzled and unimpressed. By that time I’d been reading quite widely in computational linguistics and written a dissertation in which I made use of computational semantics in analyzing a Shakespeare sonnet and in discussing narrative order. Searle’s argument simply did not connect with any of the many concepts and techniques used in modeling thought. Understanding Searle’s argument wasn’t going to help us develop better computer models nor, for that matter, would it be of much use to psychologists and neuroscientists.

Just what was it good for?

I suspect the same is true for the older arguments of Hubert Dreyfus, which I’ve never read in full. Of course, where Searle was arguing within the Anglo-American analytic philosophical tradition, Dreyfus was a Heideggerian arguing within the Continental tradition. The intellectual style is different, but the result is the same.

At the moment I’ve been looking at an article Dreyfus published in 2007, “Why Heideggerian AI failed and how fixing it would require making it more Heideggerian” (Artificial Intelligence 171, 2007, 1137-1160). He reviews a half century of work in AI, including the line of research pioneered by Rodney Brooks in the 1980s, which he finds akin to an idea advanced by Merleau-Ponty, “that intelligence is founded on and presupposes the more basic way of coping we share with animals” (p. 1141) and that requires giving up on the notion of internal symbolic representations of the world. That, as far as I can tell, is what he means by Heideggerian AI. He goes on to critique other varieties of “pseudo Heidegerian AI” until he arrives at Walter Freeman’s “Merleau-Pontian” neurodynamics (pp. 1150 ff).

Of which he approves. Nor am I surprised at this. I’d read quite a bit of Freeman when I was working on my book about music, Beethoven’s Anvil, and adopted his neurodynamics as the basic framework in which to understand music as a medium of group interaction. I had quite a bit of correspondence with him and know he was talking to Dreyfus and, for that matter, knew he was interested in Continental philosophy. But, I wonder, how much did he actually owe to Continental philosophy?

I don’t know. Freeman’s neurodynamic approach was pretty mature by the time he had these conversations with Dreyfus. It’s possible that he’d been influenced by Continental thought early in his career, as I had been, but I don’t actually know that. As far as I know, Freeman did all the work: laboratory observation, mathematical analysis, and computational modeling. Continental philosophy came late to his game and perhaps more as a vehicle for presenting his ideas to a wider intellectual community, and in opposition to AI, than as a fundamental source of technical insight.

For that is what is required, technical insight. Did he get technical insight from early-career reading of Continental thought? I don’t know.

A bit about how I got here

While I have never really thought the brain/mind was computational top-to-bottom, at least I don’t think I did, I have long be fascinated by the insight computation affords us into the mind and currently believe that some aspects of the mind are irreducibly computational. But not the whole shebang, top-to-bottom.

And I was certainly influenced by Continental thought in my undergraduate years, Merleau-Ponty in particular. I studied his Phenomenology of Perception, not for any course, but on my own, underlining passages in two or three colors, making marginal annotations, and indexing key passages on the end pages. Perhaps that “inoculated” me against going whole-hog for a computational view of the mind.

I was also strongly influence by Lévi-Strauss, also, of course, a Continental thinker, but of a somewhat different style. As I was interested in literature, it was his thinking on symbolic systems and, above all, mythology, that captured my attention. I like his tables, diagrams, and pseudo-mathematical expressions. It seemed to me that if THAT’s what was going on in myth, then we need more of it. And that, in turn, led me to the cognitive sciences (by way of “Kubla Khan” [1]).

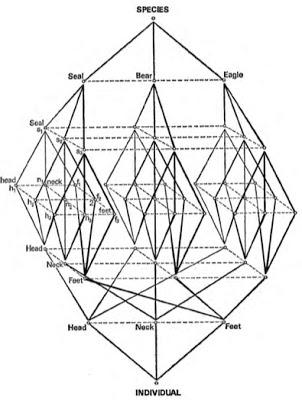

When I read The Savage Mind I was particular struck by what he called the totemic operator:

There’s no need to explain it. I just liked it.

That would have been in, say, 1966 or 1967. In 1969 Karl Pribram published an article in Scientific American in which he argued that the hologram provided a good model for memory in the nervous system. I read the article carefully, no doubt several times, examined the illustrations, and concluded, hmmm, that’s a bit like Lévi-Strauss’ totemic operator. Just why I concluded that, that would be complicated and tiresome to explain. It is sufficient for my present purposes that I reached that conclusion. And went on to read all the Pribram I could find. Wouldn’t you know, Walter Freeman had worked with Pribram.

And what I got from Pribram is certainly one of the things that kept me from accepting the mind-as-computer whole. Something else was going on, and holograms were a better metaphor than computers. And so, for that matter, are analog control systems, which David Hays and I incorporated into our thinking by adopting some ideas from William Powers, Behavior: The Control of Perception (1973).

Again I ask, so what?

I suppose that, to some extent, I’m being driven by how those anti-computation arguments – by Searle, Dreyfus, and others – are used. The philosophers can think whatever they want. I’m particularly concerned about students of literature. For example, form is an important concept, but the profession (of academic literary scholars) does not have a consensus view of it. I believe that without adopting the idea of computation, in appropriate measure, we cannot formulate a coherent account of literary form, something I argued at length in 2006, though not quite in those terms [2].

In THIS intellectual context, Dreyfus/Searle kinds of arguments can be used to defend the border between “true humanities” and the depredations of compu-think. It’s all well and good for Dreyfus to endorse Freeman, by humanist readers of his arguments are unlikely to actually read Freemen, much less consider his ideas. They may embrace “complexity”, but mostly as a word trailing a mathematical aura, nothing more. There is plenty of THAT to go around, but it’s not worth much.

So where does that leave us? I’m not sure. I guess the question is whether or not those arguments ever lead anyone across the borders or, for that matter, to they prompt computationalist to take a deeper view. I don’t know.

References

[1] See my working paper, especially section 4, “Into Lévi-Strauss and Out Through ‘Kubla Khan’”, Beyond Lévi-Strauss on Myth: Objectification, Computation, and Cognition, February 2015, 30 pp., https://www.academia.edu/10541585/Beyond_L%C3%A9vi-Strauss_on_Myth_Objectification_Computation_and_Cognition.

[2] William Benzon, Literary Morphology: Nine Propositions in a Naturalist Theory of Form, PsyArt: An Online Journal for the Psychological Study of the Arts, August 2006, Article 060608. You will find a downloadable PDF here, https://www.academia.edu/235110/Literary_Morphology_Nine_Propositions_in_a_Naturalist_Theory_of_Form.