What is an apple?

"It's not even a difficult question. We all know what an apple is." you're screaming at the screen.

But do we all know what an apple is?

So, add one Braeburn plus one Granny Smith in their shared-common-brand term, is the answer two BraeSmiths or two GrannyBurns?

Why is this so difficult? Why should adding one to one give us so many problems? Well, 'math' is a derivative or offshoot of The Counting System, where a 'basket' is filled with 'apples' until it's full, and the contents are 'countable'. Right?

Apple is the tactly agreed term for a fruit of the apple tree that is basically the same as all other fruit of the apple tree within arbitrary boundaries of size and shape and taste and value. But then what is 'cider', measured in 'apples'? And what is an 'apple pie' taking into account the additional shared terms like flour, sugar, grease?

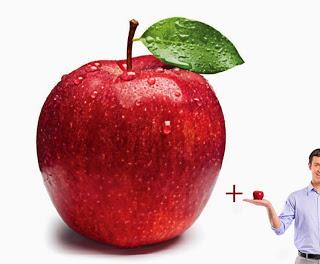

"It's EASY," says math. We just strip it down to its basic component one apple plus one apple = two apples. So what about this random case among millions, "Giant-sized apple plus normal-sized apple?" 1 + 1 =/= 2. How many apples now? What is our true definition of apple? What is our true definition of a 'countable unit'?

My basic contention is this, "Math is (fundamentally) broken, without this 'little lie' to ourselves every time we use it. You can't just strip the ONE-ness from Item A and apply it to Item B to count its unit value or worth or one-ness. The one-ness from Snail can't be reapplied to Aston Martin DB10 - there can be no such thing as mathematically-adoptive-oneness.

That is as absurd as saying Zero and Infinity actually exist. If ONE doesn't exist, then all branches of math containing 'one' don't exist, or are imaginary. So, when we 'count' or 'use math' what are we really doing?