I now want to take a look at AI, chess, and language from a Piagetian point of view. While he is best known for his work in developmental psychology, Piaget was also interested in the development of concepts over historical time, which he called genetic epistemology, and more generally, in the construction of mental mechanisms. He was particularly interested in abstraction and in something he called reflective abstraction. The concept is a slippery one. Ernst von Glasersfeld has a useful account (Abstraction, Re-Presentation, and Reflection: An Interpretation of Experience and of Piaget’s Approach) from which I abstract, if you will, perhaps an over-simplified idea, which is that major steps in cognitive evolution involve taking a mechanism that is operative at one level and making it an object which is operated on by mechanisms at a new and higher level.

Let us take chess as an example. We know that all possible chess games can be arranged as a tree – something we examined earlier, Search! What enables us to entertain the idea that chess is a paradigmatic case of cultural evolution? But we have only known that since the mathematician Ernest Zermlo published “Über eine Anwendung der Mengenlehre auf die Theorie des Schachspiels” (“On an application of set theory to the theory of chess”) in 1913. Ever since the game emerged players have been exploring that tree, but without explicitly knowing it. It was only when Zermelo had published the idea that the chess tree became an object that could be explicitly examined had explored as such.

I don’t know when that idea crossed into the chess world. In a quick search I found out that Alexander Kotov used it a book, Think Like a Grand Master, which was translated into English in 1971. Kotive wrote of building an “analysis tree.” I assume that chess players became aware of the idea sooner than that, perhaps not long after Zermelo’s paper was published. In any event, for my present purposes, the date is irrelevant. What is important is simply that it happened. The tree structure has been central to all work in computer chess.

The tree structure is central to the activity of search. But there is more to chess than searching for possible moves. The moves must be evaluated and a strategy has to be executed. Various means have been developed to do those things with the result that computers can now play chess better than any human. Chess is a “solved” problem. And the components of various solutions are objects for explicit examination and design.

Almost.

Unlike earlier chess problems, which have been completed based on symbolic technology, some of the most recent chess programs, such as AlphaZero, use neural nets for the evaluation function. What those nets are doing is opaque. We know how to build them, but we don’t know what they do.

And that brings us to language.

Language has been investigated for centuries. More specifically, it has been subject to formal analysis for the last three quarters of a century, but cognitive scientists have come to little agreement about syntax much less semantics. Nonetheless large language models are now capable of very impressive language performance. Like all neural network models, however, these models are opaque. But what if we could figure out how they worked internally?

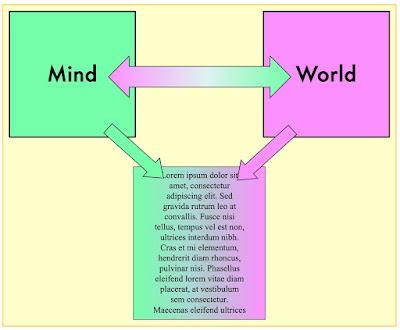

Consider following diagram, which I have commented about in my paper on GPT-3: GPT-3: Waterloo or Rubicon? Here be Dragons:

Texts are one product of the interaction of the human mind and the world. LLMs are trained on large bodies of these texts. It follows that the internal structure of these models must somehow, we don’t know how, reflect the nature of that interaction. If we could understand the internal structure of these models, wouldn’t that be a reflective abstraction over the processes of the human mind in the same way that the chess tree is a reflective abstraction over the human mind as it is engaged in the game of chess?

Yes, the chess tree is not all of chess, but only a part. And we know how to augment that part with evaluation functions. Figuring out how LLMs work would not be equivalent to knowing how the mind works, but might be a start. To know and be able to manipulate the conceptual structure that is latent in LLMs, that would be a major intellectual accomplishment.