A collection of articles for learning the basic of Neural networks

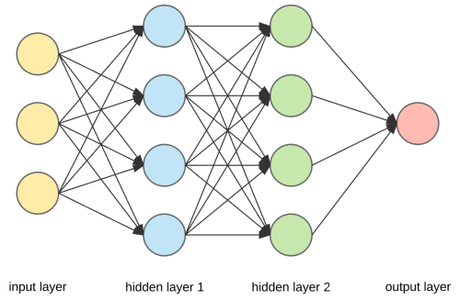

The basic concepts of neural networks taking a do it by yourself approach. More specifically the students will learn how a neural network is a structured as a collection of perceptron forming multiple layers. These systems can be trained using backpropagation and gradient descent techniques. Neural network examples will be implemented in python without the need of particular libraries.

https://aaidm.buas.nl/?page_id=87

We define Artificial Intelligence (in short AI) as the theory and development of computer systems able to perform tasks normally requiring human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.

Artificial Intelligence Applications

Perceprons are the basic building block of neural networks. In short, they are algorithms for supervised learning of binary classifiers. A binary classifier is a function which can decide whether or not an input, represented by a vector of numbers, belongs to some specific class

What is a Perceptron

Image recognition is a classical example where neural networks are used; in particular they learn to identify images that contain dogs by analyzing example images that have been manually categorized (labelled) as “dog” or “no dog”. Once the system “learn” the difference it can be used for identifying dogs in other images.

The Basic of a Neural Network

Gradient descent is a first-order iterative optimization algorithm for finding a local minimum of a differentiable function. To find a local minimum of a function using gradient descent, we take steps proportional to the negative of the gradient (or approximate gradient) of the function at the current point.

Gradient Descent and Cost Functions

In machine learning, backpropagation is a widely used algorithm in training feedforward neural networks for supervised learning. Generalizations of backpropagation exist for other artificial neural networks, and for functions generally – a class of algorithms referred to generically as “backpropagation”.

Back-Propagation

More on Back-propagation and practical aspects of AI

Genetic Algorithms is a technique for optimizing a particular model that is inspired by biological evolution theories. When we design a neural network we usually make a lot of assumption on its characteristics such as, for example, type of activation of the neurons, hidden layers, type of connections, way of back-propagate the feedback etc. As these aspects could be considered features of a particular network, a genetic algorithm approach could help in finding the optimal configuration of these parameters.

Introduction to Genetic Algorithms and more practical aspects of AI