Some years ago, back in the Jurassic Era, I imagined that one day I would have access to a computer system I could use to "read" literary texts, such as a play by Shakespeare. As such a system would have been based on a simulation of the human mind, I would be able to trace its operations as it read through a text - perhaps not in real time, but it would store a trace of those actions and I could examine that trace after the fact. Alas, such a system has yet to materialize, nor do I expect to see such a marvel in my lifetime. As for whether or not such a system might one day exist, why bother speculating? There's no principled way to do so.

Whyever did I believe such a thing? I was young, new to the research, and tremendously excited by it. And, really, no one knew what was possible back in those days. Speculation abounded, as it still does.

While I'll return to that fantasy a bit later, this is not specifically about that fantasy. That fantasy is just one specific example of how I have been thinking about the relationship between the human mind and computational approximations to it. As far as I can recall I have never believed that one day it would be possible to construct a human mind in a machine. But I have long been interested in what the attempt to do so has to teach us about the mind. This post is a record of much of my thinking on the issue.

It's a long way through. Sit back, get comfortable.

When I was nine years old I saw Forbidden Planet, which featured a Robot named Robbie. In the days and weeks afterward I drew pictures of Robbie. Whether or not I believed that such a thing would one day exist, I don't remember.

Some years later I read an article, either in Mechanix Illustrated or Popular Mechanics - I read both assiduously - about how Russian technology was inferior to American. The article had a number of photographs, including one of a Sperry Univac computer - or maybe it was just Univac, but it was one of those brands that no longer exists - and another, rather grainy one, of a Russian computer that had been taken from a Russian magazine. The Russian photo looked like a slightly doctored version of the Sperry Univac photo. That's how it was back in the days of electronic brains.

When I went to college at Johns Hopkins in the later 1960s one of the minor curiosities in the freshman dorms was an image of a naked woman ticked out in "X"'s and "O"'s on computer print-out paper. People, me among them, actually went to some guy's dorm room to see the image and to see the deck of punch cards that, when run through the computer, would cause that image to be printed out. Who'd have thought, a picture of a naked woman - well sorta', that particular picture wasn't very exciting, it was the idea of the thing - coming out of a computer. These days, of course, pictures of naked women, men too, circulate through computers around the world.

Two years after that, my junior year, I took a course in computer programming, one of the first in the nation. As things worked out, I never did much programming, though some of my best friends make their living at the craft. I certainly read about computers, information theory, and cybernetics. The introductory accounts I read always mentioned analog computing as well as digital, but that ceased some years later when personal computers became widespread.

I saw 2001: A Space Odyssey when it came out in 1968. It featured a computer, HAL, that ran a spacecraft while on a mission to Jupiter. HAL decided that the human crew endangered the mission and set about destroying them. Did I think that an artificially intelligent computer like HAL would one day be possible? Nor do I recall what I thought about the computers in the original Star Trek television series. Those were simply creatures of fiction. I felt no pressure to form a view about whether or not they would really be possible.

Graduate school (computational semantics)

In 1973 I entered the Ph.D. program in the English Department at the State University of New York at Buffalo. A year later I was studying computational semantics with David Hays in the Linguistics Department. Hays had been a first-generation researcher in machine translation at the RAND Corporation in the 1950s and 1960s and left RAND to found SUNY's Linguistics Department in 1969. I joined his research group and also took a part-time job preparing abstracts of the technical literature for the journal Hays edited, The American Journal of Computational Linguistics (now just Computational Linguistics). That job required that I read widely in computational linguistics, linguistics, cognitive science, and artificial intelligence.

I note, in passing, that computational linguistics and artificial intelligence were different enterprises at the time. They still are, with different interests and professional associations. By the late 1960s and 1970s, however, AI had become interested in language, so there was some overlap between the two communities.

In 1975 Hays was invited to review the literature in computational linguistics for the journal Computers and Humanities. Since I was up on the technical literature, Hays asked me to coauthor the article:

William Benzon and David Hays, "Computational Linguistics and the Humanist", Computers and the Humanities, Vol. 10. 1976, pp. 265-274, https://www.academia.edu/1334653/Computational_Linguistics_and_the_Humanist.

I should note that all the literature we covered was within that has come to be known the symbolic approach to language and AI. Connectionism and neural networks did not exist at that time.

Our article had a section devoted to semantics and discourse in which we observed (p. 269):

In the formation of new concepts, two methods have to be distinguished. For a bird, a creature with wings, its body and the added wings are equally concrete or substantial. In other cases something substantial is molded by a pattern. Charity, an abstract concept, is defined by a pattern: Charity exists when, without thought of reward, a person does something nice for someone who needs it. To dissect the wings from the bird is one kind of analysis; to match the pattern of charity to a localized concept of giving is also an analysis, but quite different. Such pattern matching can be repeated recursively, for reward is an abstract concept used in the definition of charity. Understanding, we believe, is in one sense the recursive recognition of patterns in phenomena, until the phenomenon to be understood fits a single pattern.

Hays had explored that concept of abstract concepts in an article on concepts of alienation. One of his students, Brian Phillips, was finishing a computational dissertation in which he used that concept in analyzing stories about drownings. Another student, Mary White, was finishing a dissertation in which she analyzed the metaphysical concepts of a millenarian community. I was about to publish a paper in which I used the concept to analyze a Shakespeare sonnet, "The Expense of Spirit" ( Cognitive Networks and Literary Semantics). That set the stage for the last section of our article.

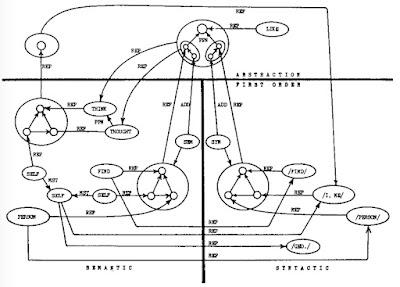

Diagram from "Cognitive Networks and Literary Semantics"

Diagram from "Cognitive Networks and Literary Semantics" We decided to go beyond the scope of a review article to speculate about what might one day be possible (p. 271):

Let us create a fantasy, a system with a semantics so rich that it can read all of Shakespeare and help in investigating the processes and structure that comprise poetic knowledge. We desire, in short, to reconstruct Shakespeare the poet in a computer. Call the system Prospero.

How would we go about building it? Prospero is certainly well beyond the state of the art. The computers we have are not large enough to do the job and the architecture makes them awkward for our purpose. But we are thinking about Prosper now, and inviting any who will to do the same, because the blueprints have to be made before the machine can be built.

A bit later (p. 272-273):

The state of the art will support initial efforts in any of these directions. Experience gained there will make the next step clearer. If the work is carefully planned, knowledge will grow in a useful way. [...]

We have no idea how long it will take to reach Prospero. Fifteen years ago one group of investigators claimed that practical automatic translation was just around the corner and another group was promising us a computer that could play a high-quality game of chess. We know more now than we did then and neither of those marvels is just around the current corner. Nor is Prospero. But there is a difference. To sell a translation made by machine, one must first have the machine. Humanistic scholars are not salesmen, and each generation has its own excitement. In some remote future may lie the excitement of using Prospero as a tool, but just at hand is the excitement of using Prospero as a distant beacon. We ourselves and our immediate successors have the opportunity to clarity the mechanisms of artistic creation and interpretation. We may well value that opportunity, which can come but once in intellectual history.

Notice, first of all, that we did not attempt to predict just when Prospero might be built. That was Hays speaking. He had seen the field of machine translation lose its funding in the mid-1960s in part because they had wildly over-promised. Why did they do that? They were young, enthusiastic, the field was new and no one knew anything, and, of course, they wanted funding. He didn't believe there was any point in trying to predict the direction of results in the field.

But I wasn't so experienced. I believed that Prospero would arrive in, say, 20 years - a standard number for such predictions. I was wrong of course, but by the time the mid-1990s rolled around I was thinking about other things and didn't even notice that Prospero had failed to materialize.

Here is the final paragraph of that article (p. 273):

But when, or if, we or our descendants actually possess a Prospero, what exactly will it be? A system capable of writing new plays, perhaps, but what would it take to write a play as good as Shakespeare's worst? We can work on the representation of world views, and we can work also on linguistics and even on poetics, the control of interplay between sound and sense, but no one can say which is harder. Prospero may be an extremely sophisticated research tool; as a mode of the human mind its foremost purpose is to help in the creation of a still better model of the mind and its evolution. Those who see a threat to playwright, scientist, or philosopher in the machine that can simulate thought must set a limit on the mind which is not implicit in any system with recursive abstractions. If we ever obtain a machine that can apply patterns of thought to the phenomena of life, we shall most likely ponder on the machine and ourselves for a while, and see then, as we cannot until the machine begins to help us, what a narrow sector, what a shallow stratum of the human essence is captured in the design of the machine.

I point out two key ideas in that paragraph. First, there is the idea of a "system with recursive abstractions." By that, in that particular context, Hays and I meant us, human beings. That was Hays's idea of abstract concepts which I discussed above. We can define abstraction upon abstraction and so forth, and we had explored ways of doing so. We are saying that computers will not outstrip us, something Nick Bostrom, among others, is likely to dispute.

The second key idea is that, in the process of creating a system which would, among other things, itself have some capacity for recursive abstraction, we would come to understand ourselves in a way we could not without having created such a machine. I still believe that and note the current systems, such as GPT-3, afford us a similar opportunity, despite the fact that they are based on artificial neural network systems that are quite different from the symbolic systems that existed in the 1970s.

Beyond symbolic systems

I completed my degree in 1978 and took a faculty position at the Rensselaer Polytechnic Institute (RPI) in the Department of Language, Literature, and Communication. Though I continued to think about computational semantics, without the intellectual companionship of a work group, it was difficult.

I read John Searle's well-known Chinese Room argument when it came out in Behavioral and Brain Science in 1980 and was unimpressed. He didn't address any of the language mechanisms and models discussed in the technical literature. Rather, he argued that any computer system would lack intentionality and without intentionality there can be no meaning, no real thought. At the time "intentionality" struck me as being a word that was a stand-in for a lot of things we didn't understand. And yet I didn't think that, sure, someday computers will think. I just didn't find Searle's argument either convincing or even interesting.

A year later, 1981, I spent the summer with NASA working on a study of how computer science could catalyze a "space program Renaissance." There I read an interesting study entitled, "Replicating Systems Concepts: Self-Replicating Lunar Factory and Demonstration". The idea was to ship a lot of equipment and AI technology to the moon which would then proceed to construct a factory. The factory would avail itself of lunar materials in the manufacture of this, that, and the other, to include another factory which would avail itself of lunar materials in the manufacture of this, that, and the other, to include another factory which would ... and so forth. I don't recall whether I had any strong opinions about whether or not this would actually be possible. When I mentioned it to David Hays, though, he suggested that the difficult part might come in physical manipulation of objects. What interested me was the idea that, after a considerable investment in developing that equipment and shipping it to the moon, we could get the products of those factories for free, except, of course, for the cost of shipping them back to earth.

I left RPI in the mid 1980s and did this that and the other, including working as a technical writer for the MapInfo Corporation. And I continued collaborating with David Hays. During that period we collaborated on a paper in which we examined a wide range of work in cognitive science, neuroscience, developmental psychology and comparative psychology with the object of formulating a (highly speculative) account of the human mind. We published the resulting paper in 1988:

William Benzon and David Hays, Principles and Development of Natural Intelligence, Journal of Social and Biological Structures, Vol. 11, No. 8, July 1988, 293-322. https://www.academia.edu/235116/Principles_and_Development_of_Natural_Intelligence

As the title suggests we regarded it as an antidote to ideas coming out of AI, but we said nothing about AI in the article, nor did we argue about whether or not the brain was a computer and, if so, what kind. We articulated five principles, each with a computational aspect, discussed their implementation in the brain, and placed them in the context of both human development and more general evolutionary development.

At roughly the same time we published a paper on metaphor:

William Benzon and David Hays, Metaphor, Recognition, and Neural Process, The American Journal of Semiotics, Vol. 5, No. 1 (1987), 59-80, https://www.academia.edu/238608/Metaphor_Recognition_and_Neural_Process.

The neural process we had in mind was the notion of neural holography that Karl Pribram had advocated in the 1970s, an idea that shares some mathematics with ideas that would emerge in AI (convolutional neural networks) and come into prominence in the second decade of this century.

Those two papers cannot be assimilated to the symbolic framework he had learned in Hays's research group at Buffalo. But even back then we didn't believe that symbolic computation would provide a complete model of thought. We knew it had to be grounded in something else and spent considerable time thinking about that in the context of the cybernetic account offered by William Powers, Behavior: The Control of Perception (1973). We were thinking about computation, but in fairly broad terms.

During that period, the 1980s, trouble was brewing in the AI world. There was a panel discussion about an "AI Winter" at the 1984 annual meeting of the American Association for Artificial Intelligence (AAAI) which was subsequently published in AI Magazine in the Fall of 1985, The Dark Ages of AI: A Panel Discussion at AAAI-84 (PDF). I'm sure I read it, though I was more interested in an article by Ronald Brachman about knowledge representation in the same issue. I mention that article because it was lamenting the erosion of the era of symbolic computing in AI and the allied field of computational linguistics. That implied the demise of any prospect for building Prospero. I don't recall whether or not I made that connection at the time. I had moved on and was thinking in different terms, such as those in the two papers I just mentioned, the one on natural intelligence and the one on metaphor.

Hays and I continued our collaboration to a series of papers about cultural evolution, which were an outgrowth of some ideas I had developed in my dissertation. Here's the first, and most basic, of those articles:

William Benzon and David Hays, The Evolution of Cognition, Journal of Social and Biological Structures. 13(4): 297-320, 1990, https://www.academia.edu/243486/The_Evolution_of_Cognition

The paper is about the evolution of cognition in human culture, not biology, from the emergence of language through the proliferation of computer systems. Near the end we offer some remarks germane to my theme, the relation between human intelligence and artificial intelligence (p. 315):

Beyond this, there are researchers who think it inevitable that computers will surpass human intelligence and some who think that, at some time, it will be possible for people to achieve a peculiar kind of immortality by "downloading" their minds to a computer. As far as we can tell such speculation has no ground in either current practice or theory. It is projective fantasy, projection made easy, perhaps inevitable, by the ontological ambiguity of the computer. We still do, and forever will, put souls into things we cannot understand, and project onto them our own hostility and sexuality, and so forth.

A game of chess between a computer program and a human master is just as profoundly silly as a race between a horse-drawn stagecoach and a train. But the silliness is hard to see at the time. At the time it seems necessary to establish a purpose for humankind by asserting that we have capacities that it does not. It is truly difficult to give up the notion that one has to add "because ..." to the assertion "I'm important." But the evolution of technology will eventually invalidate any claim that follows "because." Sooner or later we will create a technology capable of doing what, heretofore, only we could.

I note, that at the time we wrote that, no computer had beaten the best chess players; IBM's Deep Blue wouldn't beat Gary Kasparov until 1996. But that certainly wouldn't have changed what we wrote. We freely admitted that computers could do things that heretofore only humans could did and would continue to do so in the future.

But just what did we mean by that last sentence, what is the scope? Someone might take it to mean that sooner or later, we would have artificial general intelligence (AGI). But, as far as I can tell, I don't think that's what we meant. My guess is that Hays wrote that paragraph and that we didn't discuss it much. I'd guess he meant that one by one, in time, computers would be able to do this or that specialized task.

That takes us to 1990. I certainly haven't stopped thinking about these matters, but I think it's time to stop and reflect a bit.

What happened to Prospero?

As I have already suggested, the idea of Prospero was birthed in the era of symbolic computing, and that era began collapsing in the 1980s. I do not recall just when I noticed that (something like) Prospero hadn't been and wasn't going to be built. I may not have done much thinking about that until the second decade of this century, when I had begun paying attention to, thinking about, and blogging about the so-called digital humanities.

What's important is simply that at one point in my career the world looked a certain way and I had a moderately specific expectation about would happen in two decades. I was wrong. The world proved to be vaster than I had imagined it to be. There remains more than enough to think about. This relatively brief post summarizes my most recent thoughts and has links to a variety of posts and working papers:

Geoffrey Hinton says deep learning will do everything. I'm not sure what he means, but I offer some pointers. Version 2, New Savanna, May 31, 2021, https://new-savanna.blogspot.com/2021/05/geoffrey-hinton-says-deep-learning-will_31.html.

I remain skeptical about the possibility of constructing a human-level intelligence in silico. The distinction between living systems and inanimate systems seems to me to be paramount, though it is by no means clear to me just how to characterize that distinction in detail. For reasons I cannot express, I do not think we will see an artificial intelligence with the range of capacities of our own natural intelligence.

I am skeptical as well about the possibility of predicting future intellectual developments in this general arena. It is too vast, and as yet we know so little.