Here’s a more or less arbitrarily selected tweet about a Google engineer who believes that a chatbot, LaMDA, derived from a large language model (LLM) is sentient:

“LaMDA is sentient.”

— Antonio Regalado (@antonioregalado) June 11, 2022

Crazy story about Google engineer and occultist who gets suspended after sending a mass email claiming that an experimental AI chatbot called LaMDA is conscious. 1/ https://t.co/l90Tn4seeS

That story has been all over my local Twitterverse for the last two days. A somewhat different kind of tweet inspired by that incident gives rise to a discussion about the relationship between animism and pansychism (though I don’t believe that term is used), belief systems holding that sentience pervades the universe, and the believe of this particular Google engineer, though he is by no means the only one who believes AIs are now exhibiting sentience.

It's interesting to live at a time when, on the one hand, a Google researcher is suspended and ridiculed for saying that an AI chatbot system has become sentient* while, on the other, scholars promote a "pluriverse" where "mountains, lakes or rivers ... are sentient beings."**

— Jorge Camacho (@j_camachor) June 13, 2022

Finally a tweet purporting to show how many parameters an artificial neural network must have in order to exhibit signs of consciousness. I recognized the tweet as satire, which it is, but if you look through the tweet stream you’ll see that some took it seriously. Why?

We're thrilled to share a plot from our upcoming paper "Scaling Laws for Consciousness of Artificial Neural Networks".

— Ethan Caballero (@ethanCaballero) June 13, 2022

We find that Artificial Neural Networks with greater than 10^15 parameters are more conscious than humans are: pic.twitter.com/pOxWX2g0EL

* * * * *

What’s going on here? Is the fabric of rational belief becoming unravelled? Are the End Times approaching?

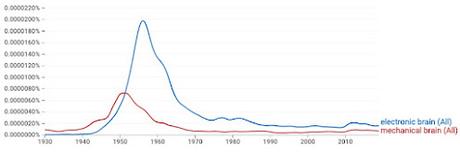

Quickly and informally, I believe that bulk of our ‘common sense’ vocabulary was shrink-wrapped to fit the world that existed in, say, the late 19th century. That world included mechanical calculators and tabulating machines (I believe that’s how IBM started, tabulating census data). As long as digital computers mostly did those two things they weren’t particularly problematic. Sure, there was 1950s & 60s talk of ’thinking machines’, but that died out, and Stanley Kubrick gave us HAL, but that was clearly science FICTION. No problem.

Now it’s clear that things have changed and will be changing more. Computers are doing things that cannot be readily accommodated to that late-19th century conceptual system. There’s no longer a place for them in the ontology (to use the term as it is now used in computer science and AI). We can’t just add new categories off to the side somewhere or near the top. Why not? Because computers are, in some obvious sense, clearly inanimate things, like rocks and water and so forth. Those are at the bottom of the ontology. Yet they’re now speaking fluently, which is something only humans did, and we’re at the top of the ontology. So now we’ve got to revise the whole ontology, top to bottom.

What about this Google engineer who sensed that LaMDA was sentient? He sensed that something new and different was going on in LaMDA. I think he’s right about that. So how do you express that? He chose concepts from the existing repertoire and, sentience seemed the best one to use. We don’t have a common term for what he experienced.

In my own thinking I experience a similar problem. It’s clear to me that GPT-3 is NOT thinking in any common sense of the term. But it’s not clear that calculation is a particularly good term either though, in a sense, that IS what is going on, for it runs on computer hardware constructed for the purpose of calculation in the most general sense of the term. But that general sense does not align well with the commonsense notion of calculation, which is closely allied with arithmetic. As commonly understood, arithmetic is very different from string processing, such as alphbetizing a list. The fact that the same electronic device can do either with easy, that is not easily encompassed within common-sense terms, which mostly just elide the difficulties.

Brian Cantwell Smith has proposed “reckoning” as the term for what AI engines do. But he sees it as being in opposition to “judgement,” which is fine, but I’m not sure it’s good for my purposes. Even if I could come up with a term, it would be a term specialized for use in intelletual discourse. How would it play in general public-facing discourse?

It is easy for Google can lay the engineer off without pay. Even if he goes away, the problem he was struggling with won’t go away. It is only going to get worse. And there is no quick fix. The fabric of common sense must be reworked, top to bottom, inside and out, and specialized conceptual repertoires as well. This is a project for generations.

The world IS changing.

* * * * *

As a useful counterpoint, see my essay, Dr. Tezuka’s Ontology Laboratory and the Discovery of Japan. It’s about Tezuka’s science-fiction trilogy, Lost World, Metropolis, and Next World, in which Tezuka is clearly rethinking the ontological structure of the world from top to bottom. Categories are confused and boundaries are crossed. But the provocation isn’t the rise of computers, it’s the end of World War II, which the Japanese lost.