Intention, in the philosophical sense, is a bit different from the common use of the term. It includes the common usage, but is more subtle. It is often glossed as “aboutness.” When you merely see something, or smell it, hear it, touch it, even think about it, and so forth, you have a mental state that is about that thing, whatever it is. You have an intentional stance toward it. You intend it.

Intention is at the crux of Searle’s famous Chinese Room argument about artificial intelligence. He argues that, no matter how subtle and sophisticated it may be, an AI system is unable to comprehend meaning. It is only syntactic. It may be able to pass whatever Turing test you throw at it, it's just a (philosophical) zombie. Why? Because it lacks intentionality.

When I first read Searle’s argument in 1980 I thought that it was something of a cop-out – I may still think that. “Intention” was just a filler for a whole bunch of still we don’t understand. Perhaps so.

But I don’t really want to argue that here. I want to talk about intention in the philosophical sense. This is something we need to develop step by step.

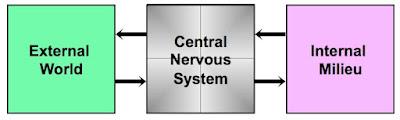

Let’s start with this simple diagram:

It represents the fact that the central nervous system (CNS) is coupled to two worlds, each external to it. To the left we have the external world. The CNS is aware of that world through various senses (vision, hearing, smell, touch, taste, and perhaps others) and we act in that world through the motor system. But the CNS is also coupled to the internal milieu, with which it shares a physical body. The net is aware of that milieu by chemical sensors indicating contents of the blood stream and of the lungs, and by sensors in the joints and muscles. And it acts in the world through control of the endocrine system and the smooth muscles. Roughly speaking the CNS guides the organism’s actions in the external world so as to preserve the integrity of the internal milieu. When that integrity is gone, the organism is dead.

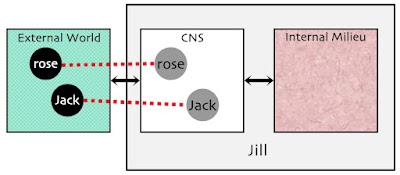

Now consider this diagram, which I call an intention diagram:

It is obviously an elaboration of the previous diagram. At the middle and right we have some person named “Jill.” Her internal milieu is to the right while I’ve represented her central nervous system (CNS) in the middle. At the left we have the external world.

I’ve represented two things in the external world, a rose, and a person named “Jack” – though it could just as easily be a dog or a crow, whatever. In Jill’s CNS there is some representation of that rose, represented by a gray circle. Another gray circle represents Jack. We need not worry about the nature of either of these representations; each is no doubt complex, with that for Jack being more complex.

Let us imagine that Jill sees the rose, and sees Jack. Or perhaps she only smells the rose and is listening to Jack’s voice while looking elsewhere. Maybe she senses neither, but is only thinking of them. Whatever the case may be, she has an intentional attitude toward then; she is intending them. In the philosophical sense.

Those dotted red lines indicate Jill’s intentionality, her intentional attitudes. The do not correspond to any physical signal moving from the rose to Jill or from Jack to Jill. Such signals may exist, but they would have to be represented in some other way. Those intentional lines reflect (aspects of) Jill’s intentional relationship(s) to the world around her. They depend on the whole system, not on this or that discrete part. They represent aboutness.