New working paper. Title above, links, abstract, and introduction below.

Academia.edu: https://www.academia.edu/108129357/ChatGPT_tells_20_versions_of_its_prototypical_story_with_a_short_note_on_method

SSRN: https://ssrn.com/abstract=4602347

ResearchGate: https://www.researchgate.net/publication/374723644_ChatGPT_tells_20_versions_of_its_prototypical_story_with_a_short_note_on_method

Abstract: ChatGPT responds the prompt, "story", with a simple story. 10 stories elicited by that prompt in a single session have a greater variety of protagonists than 10 stories each elicited in its own session. Prototype: 19 stories were about protagonists who venture into the world and learn things that benefit their community. ChatGPT's response to that simple prompt gives us a clue about the structure of the underlying model.

Introduction: ChatGPT’s prototypical story is about exploration

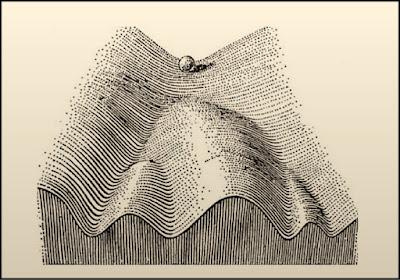

I have been systematically investigating ChatGPT’s story-telling behavior since early January of this year (2023).[1] I issued a working paper early in March where I began teasing out some regularities in this behavior.[2] One might say I was looking for ChatGPT’s story grammar, but that terminology has the wrong connotations since it has its origins in work on symbolic computing going back to the 1970s. I’m pretty sure that’s not how ChatGPT generates stories. Just what it is doing, that’s not so clear. At the moment I’m inclined to think that it’s more like an epigenetic landscape:

That discussion, however, is well beyond the scope of this introduction.

Twenty stories

This introduction is about the 20 stories I had ChatGPT generate this morning (10.13.23). Each story was generated by a single word: story. I generated the first ten stories in one single session while I used individual sessions for each of the last ten stories. Why did I make that distinction? Knowing that ChatGPT takes the entire session to date into account when it responds to a prompt, I assumed that generating many stories in a single session would have some effect of the stories. I conjectured that there would be greater variety among the 10 stories generated in the single session than among the 10 stories where each was generated in its own session.

A quick look at the protagonists in the 20 stories suggests that this is true. Two out of the first 10 stories have a protagonist named “Elara,” while five out of the send sent of 10 are named “Elara.” That quick and dirty assessment, however, needs to be confirmed by further analysis.

I note further that all but one of the stories is set in a fairy tale world, with magic and supernatural phenomena, about a protagonist who goes out explores the world, in one way or another, and then returns home where she enriches that land. The exception is the sixth story in the first set, “Lily ventures into the Urban Garden” (supplied the story name). In this story Lily meets an artist named Jasper and they make chalk drawings all over the place and teach others to do so. There are no supernatural or magical phenomena in the story.

Given that that one-word prompt, “story,” places no restrictions on ChatGPT, why does ChatGPT return to that one kind of story? In the absence of any further information, I have to assume that kind of story is an abstraction over all the stories that ChatGPT has encountered during training. That, in effect, is ChatGPT’s prototypical story.

Think about that for a moment. The large language model (LLM) at the core of ChatGPT was created by a GPT engine given the task of predicting the next word in an input string. Those strings come from millions of texts in a corpus that embodies a large percentage of the texts that are publicly available on the world-wide web. The prototypical story is the model’s response to that single word, story, and thus somehow embodies predictive information about, well, stories.

The fact that ChatGPT generates that particular generic story in response to the prompt, story, is a clue about the structure of the underlying model.

We should note, however, that ChatGPT is the result of modifying and fine-tuning the underlying LLM so as to make it more suitable for conversational interaction. I assume that these modifications have had some effect on the kinds of stories it tells, not to mention is allowed to tell, for some topics have been forbidden to it. I note also that , obviously, ChatGPT is not confined to telling that one kind of story, but you need to make further specifications to elicit other kinds of stories.

It should be abundantly clear, however, that whatever ChatGPT is doing, it is not a “stochastic parrot,” as one formulation would have it, nor is it “autocomplete on steroids,” in another formulation. ChatGPT’s language model has a great deal of structure and it is that structure that allows it to produce coherent prose. I would further argue that we can learn a great deal about that structure by systematically investigating the kinds of texts it generates. That’s what I have been doing with stories.

Mechanistic interpretation and beyond

We have just barely begun the task of figuring out how the simple task, predict the next word, eventuates in such a complex and sophisticated model. Understanding how these models works is complex, and the means and methods are not at all obvious. Students of mechanistic interpretation, as it is called, like to “pop the hood” so they can look at the engine, poke and prod it, to see how it works. But that isn’t sufficient for figuring out what’s going on.

You can’t understand what the parts of a mechanism are doing unless you know what the mechanism is trying to do. Early in How the Mind Works (p. 22) Steven Pinker asks us to imagine that we’re in an antique shop:

...an antique store, we may find a contraption that is inscrutable until we figure out what it was designed to do. When we realize that it is an olive-pitter, we suddenly understand that the metal ring is designed to hold the olive, and the lever lowers an X-shaped blade through one end, pushing the pit out through the other end. The shapes and arrangements of the springs, hinges, blades, levers, and rings all make sense in a satisfying rush of insight. We even understand why canned olives have an X-shaped incision at one end.

To belabor the example and put it to use as an analogy for mechanistic interpretability, someone with a good feel for mechanisms can tell you a great deal about how the parts of this strange device articulate, the range of motion for each part, the stresses operating on each joint, the amount of force require to move the parts, and so forth. But, still, when you put all that together, that will not tell you what the device was designed to do.

The same is true, I believe, for the mechanistic investigation of Large Language Models (and other types of neural net models). These models are quite different from mechanical devices such as olive-pitters or internal combustion engines. Their parts are informatic, bits and bytes, rather than physical objects, and there are many more of them, “billions and billions” as Karl Sagan used to say. Moreover, olive-pitters and internal combustion engines were designed by human engineers while LLMs were not designed by anyone. Rather, they evolved through a process whereby a software engine, designed by engineers and scientists, “consumed” a large corpus of texts. The very strangeness of the resulting model, its “black box” nature, makes it imperative that we investigate the structure of the texts that it produces.

Of course scholars of various kinds have been investigating language and texts for decades, if not centuries, and much remains mysterious. However, we can work with LLMs in ways we cannot work with humans and their texts. The kind of systematic generation of texts that I have been doing with ChatGPT is difficult if not impossible to do with texts generated by humans. I have devised tasks (some simple, some not) that put “pressure” on ChatGPT in a way that exposes the “joints” in texts that give us clues about the underlying mechanisms (“carve Nature at its joints,” Plato, Phaedrus). Moreover, while we can readily “pop the hood” on LLMs, we cannot do that with humans.

There is no reason to think that we cannot progress further in understanding LLMs by using and expanding upon the techniques I’ve used for investigating ChatGPT and combining them with work on mechanistic interpretability. The two lines of investigation are necessary and complementary.

* * * * *

Note about the stories: I generated all of them on the morning of October 13, 2023 in a session that began at 7:15 AM. The September 25 Version of ChatGPT was running.

References

[1] Click on the link for “ChatGPT stories” at my blog, New Savanna: https://new-savanna.blogspot.com/search/label/ChatGPT%20stories. My first post about stories is, ChatGPT, stories, and ring-composition, New Savanna, Jan. 6, 2023, https://new-savanna.blogspot.com/2023/01/chatgpt-stories-and-ring-composition.html. MS明朝

[2] ChatGPT tells stories, and a note about reverse engineering: A Working Paper, Version 3, August 27, 2023, https://www.academia.edu/97862447/ChatGPT_tells_stories_and_a_note_about_reverse_engineering_A_Working_Paper_Version_3. MS明朝

* * * * *

The working paper contains the complete texts of the 20 stories that ChatGPT generated.