First of all I present the insight that sent me down this path, a comment by Graham Neubig in an online conversation that I was not a part of. Then I set that insight in the context of and insight by Sydney Lamb (meaning resides in relations), a first-generation researcher in machine translation and computational linguistics. I think take a grounding case by Julian Michael, that of color, and suggest that it can be extended by the work of Peter Gärdenfors on conceptual spaces.

A clue: an isomorphic transform into meaning space

At the 58th Annual Meeting of the Association for Computational Linguistics Emily M. Bender and Alexander Koller delivered a paper, Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data [1], where NLU means natural language understanding. The issue is pretty much the one I laid out in my previous posts in the sections “No words, only signifiers” and “Martin Kay, ‘an ignorance model’” [2]. A lively discussion ensured online which Julian Michael has summarized and commented on in a recent blog post [3].

In that post Michael quotes a remark by Graham Neubig:

One thing from the twitter thread that it doesn’t seem made it into the paper... is the idea of how pre-training on form might learn something like an “isomorphic transform” onto meaning space. In other words, it will make it much easier to ground form to meaning with a minimal amount of grounding. There are also concrete ways to measure this, e.g. through work by Lena Voita or Dani Yogatama... This actually seems like an important point to me, and saying “training only on form cannot surface meaning,” while true, might be a little bit too harsh— something like “training on form makes it easier to surface meaning, but at least a little bit of grounding is necessary to do so” may be a bit more fair.That’s my point of departure in this post, that notion of “an ‘isomorphic transform’ onto meaning space.” I am going to sketch a framework in which we can begin unpacking that idea. But it may take awhile to get there.

Meaning is in relations

I want to develop an idea I have from Sydney Lamb, that meaning resides in relations. The idea is grounded in the “old school” world of symbolic computation, where language is conceived as a relational network of items. The meaning of any item in the network is a function of its position in the network.

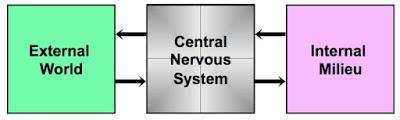

Let’s start with this simple diagram:

It represents the fact that the central nervous system (CNS) is coupled to two worlds, each external to it. To the left we have the external world. The CNS is aware of that world through various senses (vision, hearing, smell, touch, taste, and perhaps others) and we act in that world through the motor system. But the CNS is also coupled to the internal milieu, with which it shares a physical body. The net is aware of that milieu by chemical sensors indicating contents of the blood stream and of the lungs, and by sensors in the joints and muscles. And it acts in the world through control of the endocrine system and the smooth muscles. Roughly speaking the CNS guides the organism’s actions in the external world so as to preserve the integrity of the internal milieu. When that integrity is gone, the organism is dead.

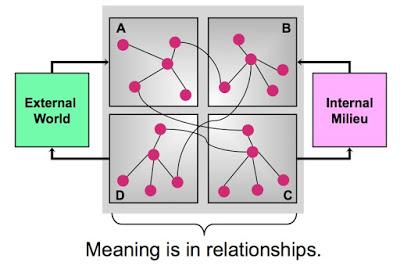

Now consider this more differentiated presentation of the same facts:

I have divided the CNS into four sections: A) senses the external world, B) senses the internal milieu, D) guides action in the internal milieu, and D) guides action in the external world. I rather doubt that even a very simple animal, such as C. elegans, with 302 neurons, is so simple. But I trust my point will survive that oversimplification.

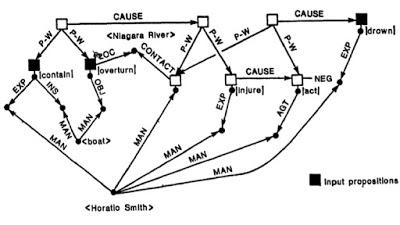

Lamb’s point is that the “meaning” or “significance” of any of those nodes – let’s not worry at the moment whether they’re physical neurons or more abstract entities – is a function of its position in the entire network, with its inputs from and outputs to the external world and the inner milieu [4]. To appreciate the full force of Lamb’s point we need to recall the diagrams typical of old school symbolic computing, such as this diagram from Brian Philips we used in the previous post:

All of the nodes and edges have labels. Lamb’s point is that those labels exist for our convenience, they aren’t actually a part of the system itself. If we think of that network as a fragment from a human cognitive system – and I’m pretty sure that’s how Philips thought about it, even if he could not justify it in detail (no one could, not then, not now) – then it is ultimately connected to both the external world and the inner milieu. All those labels fall away; they serve no purpose. Alas, Philips was not building a sophisticated robot, and so those labels are necessary fictions.

All of the nodes and edges have labels. Lamb’s point is that those labels exist for our convenience, they aren’t actually a part of the system itself. If we think of that network as a fragment from a human cognitive system – and I’m pretty sure that’s how Philips thought about it, even if he could not justify it in detail (no one could, not then, not now) – then it is ultimately connected to both the external world and the inner milieu. All those labels fall away; they serve no purpose. Alas, Philips was not building a sophisticated robot, and so those labels are necessary fictions.

But we’re interested in the full real case, a human being making their way in the world. In that case let us assume that, for one thing, the necessary diagram is WAY more complex, and that the nodes and edges do not represent individual neurons. Rather, they represent various entities that are implemented in neurons, sensations, thoughts, perceptions, and so forth. Just how such things are realized in neural structures is, of course, a matter of some importance and is being pursued by hundreds of thousands of investigators around the world. But we need not worry about that now. We’re about to fry some rather more abstract fish (if you will).

Some of those nodes will represent signifiers, to use the Saussurian terminology I used in my previous post, and some will represent signifieds. What’s the difference between a signifier and a signified? Their position in the network as a whole. That’s all. No more, no less. Now, it seems to me, we can begin thinking about Neubig’s “isomorphic transform” onto meaning space.

Let us notice, first of all, that language exists as strings of signifiers in the external world. In the case that interests us, those are strings of written characters that have been encoded into computer-readable form. Let us assume that the signifiers – which bear a major portion of meaning, no? – exist in some high dimensional network in mental space. This is, of course, an abstract space rather than the physical space of neurons, which is necessarily three dimensional. However many dimensions this mental space has, each signifier exists at some point in that space and, as such, we can specify that point by a vector containing its value along each dimension.

What happens when one writes? Well, one produces a string of signifiers. The distance between signifiers on this string, and their ordering relative to one another, are a function of the relative distances and orientations of their associated signifieds in mental space. That’s where to look for Neubig’s isometric transform into meaning space. What GPT-3, and a host of other NLP engines, does is to examine the distances and ordering of signifiers in the string and compute over them so as to reverse engineer the distances and orientations of the associated signifieds in high-dimensional mental space.

Is the result perfect? Of course not – but then how do we really know? It’s not as though we’ve got a well-accepted model of human conceptual space just lying around on a shelf somewhere. GPT-3’s language model is perhaps as good as we’ve got at the moment, and we can’t even open the hood and examine it. We know its effectiveness by examining how it performs. And it performs very well.

Metaphysics: The dimensionality of mind and world

Let’s bring this down to earth. Going back to the top where we began, Bender and Koller proposed a thought experiment involving a superintelligent octopus listening in on a conversation between two people. Julian Michael [3] proposes the following:

As a concrete example, consider an extension to the octopus test concerning color—a grounded concept if there ever was one. Suppose our octopus O is still underwater, and he:The thing about color is that it is much investigated and well (if not completely) understood, from genetics up through cultural variation in color terms. And color is understood in terms of three dimensions, hue (warm to cool), saturation, and brightness (light to dark).

Et cetera, for however many scalar concepts you think are necessary to span color space with sufficient fidelity. A while after interposing on A and B, O gets fed up with his benthic, meaningless existence and decides to meet A face-to-face. He follows the cable to the surface, meets A, and asks her to demonstrate what it means for a color to be light, warm, saturated, etc., and similarly for their opposites. After grounding these words, it stands to reason that O can immediately ground all color terms—a much larger subset of his lexicon. He can now demonstrate full, meaningful use of words like green and lavender, even if he never saw them used in a grounded context. This raises the question: When, or from where, did O learn the meaning of the word “lavender”?

- Understands where all color words lie on a spectrum from light to dark... But he doesn’t know what light or dark mean.

- Understands where all color words lie on a spectrum from warm to cool... But he doesn’t understand what warm or cool mean.

- Understands where all color words lie on a spectrum of saturated to washed out... But he doesn’t understand what saturated or washed out mean.

It’s hard for me to accept any answer other than “partly underwater, and partly on land.” Bender acknowledges this issue in the chat as well:

The thing about language is that it is not unstructured or random, there is a lot of information there in the patterns. So as soon as you can get a toe hold somewhere, then you can (in principle, though I don’t want to say it’s easy or that such systems exist), combine the toe hold + the structure to get a long ways.

And that brings us to the work of Peter Gärdenfors, who has developed a very sophisticated geometry of conceptual spaces [5, 6, 7]. And he means real geometry, not mere metaphor. He starts with color, but then, over the course of two books, extends the idea of conceptual spaces, and their associated dimensions, to a full and satisfying range of examples.

This is not the time and place to even attempt a précis of his theory – though I recommend his recent article [5], which contains some remarks about computational implementation, as a starting point. Perhaps the crucial point is that he regards his account of mental spaces as being different from both classic symbolic accounts of mind (such as that embodied by the Brian Philips example) and artificial neural networks, such as, for example, GPT-3. Though I am perhaps interpreting him a bit, he sees mental spaces as perhaps a tertium quid between the two. In particular, to the extent that Gärdenfors is more or less correct, we have a coherent and explicit way of understanding the success of neural network models such as GPT-3.

It seems to me that what Gärdenfors is looking at is what me might call, for lack of a better term, the metaphysical structure of the world.

Metaphysical structure of the world? WTF!!??

I don’t mean physical structure of the world, which is a subject for the various physical and, I suppose, biological sciences. I mean metaphysical. Just what that is, I’m not sure, so I’m making this it. The metaphysical structure of the world is that structure that makes the world intelligible to us; it exists in the relationship between us, homo sapiens sapiens, and the world. What is the world that it is perceptible, that we can move around in it in a coherent fashion?

Imagine, for example, that the world consisted entirely of elliptically shaped objects. Some are perfectly circular, others only nearly circular. Still others seem almost flattened into lines. And we have everything in between. In this world things beneficial to us are a random selection from the full population of possible elliptical beings, and the same with things dangerous to us. Thus there are no simple and obvious perceptual cues that separate good things from bad things. A very good elliptical being may differ from a very bad being in a very minor way, difficult to detect. Such a world would be all but impossible to live in.

That is not the world we have. Yes, there are cases where small differences are critical. But they don’t dominate. Our world is intelligible. And so it is possible to construct a conceptual system capable of navigating in the external world so as to preserve and even enhance the integrity of the internal milieu. That, I believe, is what Gärdenfors is looking at when he talks of dimensionality and conceptual spaces.

Beyond blind success

We now have a way of thinking about just why language models such as GPT-3 can be so successful even though, as I argued in my previous post, they have no direct access to the realm of signifieds, of meaning. So what?

Where next with GPT-3 and other such models? At the moment such models represent the success of blind groping. As far as I can tell the creators of such models have little or no commitment to some theory of how language and mind work. They just know how to create engines that produce remarkable results. As for those remarkable results, they don’t know how their engines achieve them. They know only that they do.

I do not see this as a long-term strategy for success. I have suggested a way of thinking about how these models can succeed in some remarkable degree. I rather suspect that such a framework would be useful in looking under the hood to see just what these models are doing. When I talk of GPT-3 as a crossing of the Rubicon, that is what I mean. Given a way of thinking about how such models can work, we are at the threshold of remarkable developments.

But if the AI community refuses to attend to this framework, or similar ones, then it will, sooner or later, crash and burn, as machine translation did in the mid 1960s, and as symbolic computationally, more generally, did in the mid 1980s (Waterloo).

Yes, I am attached to the ideas I’ve just presented. I know that they are highly speculative. I also know that speculation is the ONLY way forward. What I have done, others can do as well, if not better.

We have no choice but to move forward.

Unless, of course, the investors chicken out.

* * * * *

Posts in this series are gathered under this link: Rubicon-Waterloo.

Appendix: The road ahead

A bit revised from a comment I made at Marginal Revolution; this post elaborates on material in yellow:

Yes, GPT-3 [may] be a game changer. But to get there from here we need to rethink a lot of things. And where that's going (that is, where I think it best should go) is more than I can do in a comment.

Right now, we're doing it wrong, headed in the wrong direction. AGI, a really good one, isn't going to be what we're imagining it to be, e.g. the Star Trek computer.

Think AI as platform, not feature (Andreessen). Obvious implication, the basic computer will be an AI-as-platform. Every human will get their own as an very young child. They're grow with it; it'll grow with them. The child will care for it as with a pet. Hence we have ethical obligations to them. As the child grows, so does the pet – the pet will likely have to migrate to other physical platforms from time to time.

Machine learning was the key breakthrough. Rodney Brooks' Gengis, with its subsumption architecture, was a key development as well, for it was directed at robots moving about in the world. FWIW Brooks has teamed up with Gary Marcus and they think we need to add some old school symbolic computing into the mix. I think they're right.

Machines, however, have a hard time learning the natural world as humans do. We're born primed to deal with that world with millions of years of evolutionary history behind us. Machines, alas, are a blank slate.

The native environment for computers is, of course, the computational environment. That's where to apply machine learning. Note that writing code is one of GPT-3's skills.

So, the AGI of the future, let's call it GPT-42, will be looking in two directions, toward the world of computers and toward the human world. It will be learning in both, but in different styles and to different ends. In its interaction with other artificial computational entities GPT-42 is in its native milieu. In its interaction with us, well, we'll necessarily be in the driver's seat.

Where are we with respect to the hockey stick growth curve? For the last 3/4 quarters of a century, since the end of WWII, we've been moving horizontally, along a plateau, developing tech. GPT-3 is one signal that we've reached the toe of the next curve. But to move up the curve, as I've said, we have to rethink the whole shebang.

We're IN the Singularity. Here be dragons.

[Superintelligent computers emerging out of the FOOM is bullshit.]

* * * * *References

ADDENDUM: A friend of mine, David Porush, has reminded me that Neal Stephenson has written of such a tutor in The Diamond Age: Or, A Young Lady's Illustrated Primer (1995). I then remembered that I have played the role of such a tutor in real life, The Freedoniad: A Tale of Epic Adventure in which Two BFFs Travel the Universe and End up in Dunkirk, New York.

[1] Emily M. Bender and Alexander Koller, Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data, Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 5185–5198 July 5 - 10, 2020.

[2] 1. No meaning, no how: GPT-3 as Rubicon and Waterloo, a personal view, New Savanna, blog post, July 27, 2020, https://new-savanna.blogspot.com/2020/07/1-no-meaning-no-how-gpt-3-as-rubicon.html.

[3] Julian Michael, To Dissect an Octopus: Making Sense of the Form/Meaning Debate, https://blog.julianmichael.org/2020/07/23/to-dissect-an-octopus.html?fbclid=IwAR0LfzVkrmiMBggkm0tJyTN8hgZks5bN0b5Wg4MO96GWZBx9FomqhIJH4LQ.

[4] Sydney Lamb, Pathways of the Brain, John Benjamins, 1999. See also Lamb’s most recent account of his language model, Sydney M. Lamb, Linguistic Structure: A Plausible Theory, Language Under Discussion, 2016, 4(1): 1–37. https://journals.helsinki.fi/lud/article/view/229.

[5] Peter Gärdenfors, Conceptual Spaces: The Geometry of Thought, MIT Press, 2000.

[6] Peter Gärdenfors, The Geometry of Meaning: Semantics Based on Conceptual Spaces, MIT Press, 2014.

[7] Peter Gärdenfors, An Epigenetic Approach to Semantic Categories, IEEE Transactions on Cognitive and Developmental Systems (Volume: 12 , Issue: 2, June 2020 ) 139 – 147. DOI: 10.1109/TCDS.2018.2833387 (sci-hub link, https://sci-hub.tw/10.1109/TCDS.2018.2833387)