Now I’m going to play around with it in Photoshop, which allows you to apply filters to an image and then, in you wish, to blend the filtered image with the unfiltered image. In this case I’m using the Wave option with the Distort filter. The next two photos show what happens when I use two different blending options (called fading in Photoshop):

To my eye the in each case the filtered version of the image blends rather well with the original. I could have made the filtered version less obtrusive or more so. In these cases I made it strong enough that you really had to notice it but not so strong that it overwhelmed the original image.

Here’s another rather different image, one no sensible photographer would shoot:

The setting sun is low in the sky and reflecting strongly off a glass encased building, so strongly that the camera really can’t deal with it. Also, I’m shooting through the branches of a small tree or bush nearby and they show up as a mis-shaped dark area in the left half of the shot. I like to take such shots to see what I can pull out of them

Here’s the same image as filtered by the Wave option in the Distort filter. In this case I used a square wave rather than the sine wave I used in the previous examples:

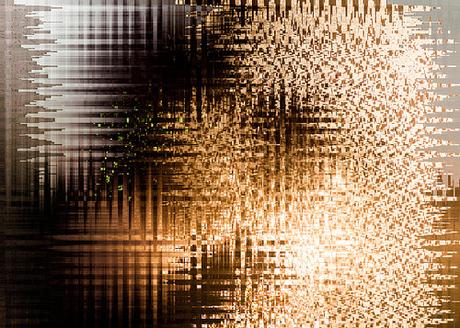

Now I blend the filtered version with the original:

As I’ve been experimenting with this ¬– over the last couple of weeks – I’ve been thinking that when the blends are harmonious the filtered version must be interacting with the visual system in a way that runs parallel with what the visual system is doing when processing the original image. All it does is magnifies something that’s already going on.

Now, take a look at an article in Google’s research blog, Inceptionism: Going Deeper into Neural Networks, by Alexander Mordvintsev, Christopher Olah, and Mike Tyka. I’m not going to explain the article because, frankly, I’ve not read it yet. But here’s a couple of paragraphs early in the article:

One of the challenges of neural networks is understanding what exactly goes on at each layer. We know that after training, each layer progressively extracts higher and higher-level features of the image, until the final layer essentially makes a decision on what the image shows. For example, the first layer maybe looks for edges or corners. Intermediate layers interpret the basic features to look for overall shapes or components, like a door or a leaf. The final few layers assemble those into complete interpretations—these neurons activate in response to very complex things such as entire buildings or trees.OK, so we’re looking at what’s going on in the inner layers of neural networks for visual processing. And if you look at some of their examples, the look a bit like my photos that have been filtered and then blended. And that’s why I’ll get around to reading that article one of these days.

One way to visualize what goes on is to turn the network upside down and ask it to enhance an input image in such a way as to elicit a particular interpretation. Say you want to know what sort of image would result in “Banana.” Start with an image full of random noise, then gradually tweak the image towards what the neural net considers a banana (see related work in [1], [2], [3], [4]). By itself, that doesn’t work very well, but it does if we impose a prior constraint that the image should have similar statistics to natural images, such as neighboring pixels needing to be correlated.

* * * * *

Now, by way of contrast, here’s a different filtered version of the second image.

I used a high amplitude sine wave filter. And here’s two blended versions: