Last year I posted a brief tutorial explaining how to upload a custom corpus into the Natural Language Toolkit. The post receives frequent hits via search engines, and I’ve received a handful of emails asking for further explanation. (The NLTK discussion forums remain tricky to navigate, and Natural Language Processing with Python is not very clear about this basic but vital step. It’s discussed partially and in passing in Chapter 3.)

Here’s a more detailed description of the process, as well as information about preparing the corpus for analysis.

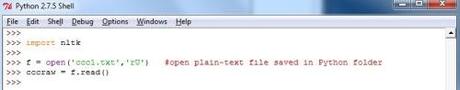

1. In the Python IDLE window, import the Natural Language Toolkit. Then, open the corpus and convert it into raw text. It’s important that the corpus be in a plain text format. It is also important that the plain text file be saved in the main Python folder, not under Documents. The path for the file should look something like this: c:\Python27\corpus.txt

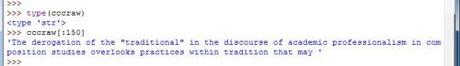

Using the ‘type’ command, we can see that the uploaded corpus at this point is simply a string of raw text (‘str’) as it exists in the .txt file itself.

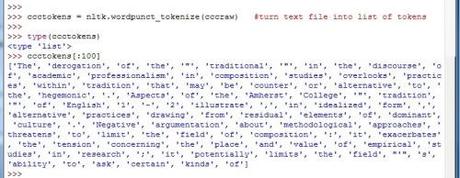

2. The next step involves ‘tokenizing’ the raw text string. Tokenization turns the raw text into tokens: each word, number, symbol, and punctuation mark becomes its own entity in a long list. The line ccctokens[:100] shows the first 100 items in the now-tokenized corpus. Compare this list to the raw string above, listed after cccraw[:150].

Tokenization is an essential step. Running analyses on raw text is not as accurate as running analyses on tokenized text.

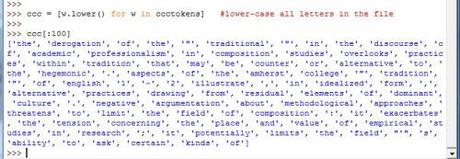

3. Next, all the words in the tokenized corpus need to be converted to lower-case. This step ensures that the NLTK does not count the same word as two different tokens simply due to orthography. Without lower-casing the text, the NLTK will, e.g., count ‘rhetoric’ and ‘Rhetoric’ as different items. Obviously, some research questions would want to take this difference into account, but otherwise, skipping this step might muddy your results.

Now the corpus is ready to be analyzed with all the power of the Natural Language Toolkit. The example corpus above is a collection of abstracts from the journal College Composition and Communication. The definition ID’s used in these examples (ccc, ccctokens, cccraw) can obviously be changed to whatever you want, but it’s a good idea to keep track of them on paper so that you obviate the need to constantly scroll up and down in the IDLE window.