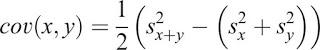

This post will review nonlinear covariation analysis developed by Müller & Sternad (2003). This purports to address several issues with UCM.Movements are never exactly the same twice. There is noise in the system at all times and so any given execution will vary slightly from any other. the key insight of the motor abundance hypothesis is that there are multiple ways to do any task and so not all variability is equal. Only some of this variability will impact performance relative to a task goal; the rest will not stop you from achieving that goal. This insight is formalised in UCM and optimal control theory by only having motor control processes deployed to control the former variability. The latter is simply ignored (the manifold defining this subset of possible states is left 'uncontrolled'). This works because variability in one degree of freedom can be compensated for using another. If, as I reach for my coffee, my elbow begins to swing out my wrist and fingers can bend in the other direction so I still reach the cup. If this happens, the overall movement trajectory remains on the manifold and that elbow variation can be left alone. For Latash, this compensation is the signature of a synergy in action (in fact, it's his strict definition of a synergy). Müller & Sternad note, however, that all this work has a problem. The covariation that occurs in a signature is often non-linear, and often involves more than two components. However, the tools deployed so far involve linear covariation analysis using linearised data, on pairs of components. Linearisation makes the data unsuitable for covariation analysis (it's no longer on an interval scale) while working with pairs limits the power of the techniques. Their solution is a randomisation method to calculate covariation across multiple nonlinear relations. The basic form is this equation:

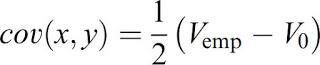

V(emp) is the variance you can measure empirically; V0 is the variance you'd expect if covariation was zero. Then then implement a slightly more generalised version but these two equations show the underlying structure of the analysis quite nicely. If you can get those numbers, you can compute the covariation between multiple nonlinearly related variables and quantify any synergies present in your data.V(emp) is easy; you literally just compute the observed variance. But how can you possibly figure out V0? Randomisation MethodsM&S then walk through a clear example to show how to estimate V0 using randomisation methods.

V(emp) is the variance you can measure empirically; V0 is the variance you'd expect if covariation was zero. Then then implement a slightly more generalised version but these two equations show the underlying structure of the analysis quite nicely. If you can get those numbers, you can compute the covariation between multiple nonlinearly related variables and quantify any synergies present in your data.V(emp) is easy; you literally just compute the observed variance. But how can you possibly figure out V0? Randomisation MethodsM&S then walk through a clear example to show how to estimate V0 using randomisation methods.  Imagine an arm who's job it is to land on a target at (20,40). There are three joint angles to worry about; α, β, and γ. M&S produced 10,000 normally distributed random angles for each joint, and found the 100 triplets that produced the most accurate landings. By definition, there should be some covariation going on here - the end result was success. You can now measure V(emp). You can then estimate V0 by randomisation. Make a table with the triplets for the 100 successful reaches listed, and then randomly and independently mix the order of the columns for α, β, and γ. What you then have is three columns of data with most of the covariation removed, and it still has the same mean and variance of the true data set. Compute your measure of variance and that's what you'd expect with no covariation. They also repeat this process 100 times to get a sense of the distribution of the variance measure in randomised data sets, because of course a given randomisation might accidentally preserve or create some covariation of its own. The mean of this distribution is your final estimate of V0 and you can compute the covariation and test it for difference from 0. They then generalise this method and add a generalised correlation coefficient R.Some Notes

Imagine an arm who's job it is to land on a target at (20,40). There are three joint angles to worry about; α, β, and γ. M&S produced 10,000 normally distributed random angles for each joint, and found the 100 triplets that produced the most accurate landings. By definition, there should be some covariation going on here - the end result was success. You can now measure V(emp). You can then estimate V0 by randomisation. Make a table with the triplets for the 100 successful reaches listed, and then randomly and independently mix the order of the columns for α, β, and γ. What you then have is three columns of data with most of the covariation removed, and it still has the same mean and variance of the true data set. Compute your measure of variance and that's what you'd expect with no covariation. They also repeat this process 100 times to get a sense of the distribution of the variance measure in randomised data sets, because of course a given randomisation might accidentally preserve or create some covariation of its own. The mean of this distribution is your final estimate of V0 and you can compute the covariation and test it for difference from 0. They then generalise this method and add a generalised correlation coefficient R.Some Notes

- M&S demonstrate the randomisation on a subset of the trials. the best thing to do is do it on the whole data set, unless that set is so big the procedure begins to take ridiculous amounts of time, in which case a generous sample of the data can be used.

- You can assess variance using anything you like; standard deviations, or the bivariate variable error used in the example. All you need is a valid way to combine the data into a single measure.

- This procedure lets you not only compute all the necessary elements, but estimate your certainty about them too.

- I do not yet have any idea if this procedure can be applied to data prior to or as part of a UCM analysis or if that even makes sense. The authors don't do it, although this might just be because working to integrate methods is not something we're good at.

- If I get a chance I will implement their example in Matlab and post the code here. It was excellently clear!