Each year IBM offers five technologies that its scientists believe will change the world within the next years. They call it "The 5 in 5" forecast. "This year IBM presents The 5 in 5 in five sensory categories, through innovations that will touch our lives and see us into the future." ["The 5 in 5," IBM, December 2012] Bernard Meyerson, IBM's Chief Innovation Officer, writes, "New technologies make it possible for machines to mimic and augment the senses. Today, we see the beginnings of sensing machines in self-parking cars and biometric security–and the future is wide open. This year, we focused the IBM Next 5 in 5, our 2012 forecast of inventions that sill change your world in the next five years, on how computers will mimic the senses: Touch - You will be able to reach out and touch through your phone; Sight - A pixel will be worth a thousand words; Hearing - Computers will hear what matters; Taste - Digital taste buds will help you to eat healthier; [and] Smell - Computers will have a sense of smell." ["The IBM Next 5 in 5: Our 2012 Forcecast of Inventions that Will Change the World Within Five Years," Building a Smarter Planet, 17 December 2012] The following video provides a quick overview of how computers are going to obtain sensing capabilities to improve our lives in the years ahead.

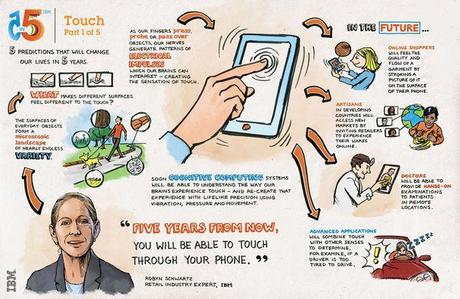

The first sense discussed by IBM is touch. The 5 in 5 website states:

"In the 1970s, when a telephone company encouraged us to 'reach out and touch someone, it had no idea that a few decades later that could be more than a metaphor. Infrared and haptic technologies will enable a smart phone's touchscreen technology and vibration capabilities to simulate the physical sensation of touching something. So you could experience the silkiness of that catalog's Egyptian cotton sheets instead of just relying on some copywriter to convince you."

The site also provides the following infographic and video to supplement this description.

Although Ms. Schwartz stresses the potential of touchscreen technologies for improving online shopping experiences, these technologies have truly life-changing potential in the medical field.

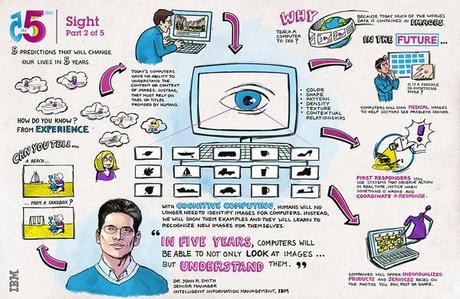

The next sense discussed on The 5 in 5 website is sight. It states:

"Recognition systems can pinpoint a face in a crowd. In the future, computer vision might save a life by analyzing patterns to make sense of visuals in the context of big data. In industries as varied as healthcare, retail and agriculture, a system could gather information and detect anomalies specific to the task — such as spotting a tiny area of diseased tissue in an MRI and applying it to the patient's medical history for faster, more accurate diagnosis and treatment."

While this description focuses on the potential health benefits of recognition technologies, the security uses are also obvious. My suspicion is that recognition technologies will come under very close scrutiny because of the potential privacy issues that will arise. The following infographic and video accompanied the description.

With Google's AI system having recently proven that a computer, by itself, can identify and understand (and even create) a picture of a cat. Smith's prediction that "in five years, computers will be able not only to look at images, but understand them," isn't much of a stretch.

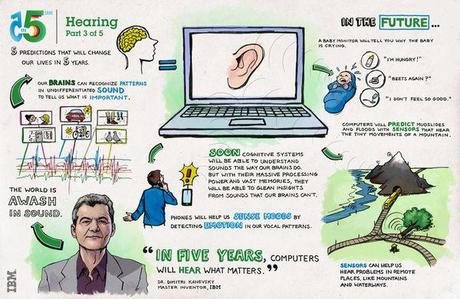

The next sense that IBM predicts computers will be capable of exploiting is hearing. The websites states:

"Before the tree fell in the forest, did anyone hear it? Sensors that pick up sound patterns and frequency changes will be able to predict weakness in a bridge before it buckles, the deeper meaning of your baby's cry or, yes, a tree breaking down internally before it falls. By analyzing verbal traits and including multi-sensory information, machine hearing and speech recognition could even be sensitive enough to advance dialogue across languages and cultures."

Using sound to enhance our understanding of the world isn't new. Navies, for example, have been using sonar to detect submarines for decades. And law enforcement and military organizations have been using gunfire locator systems to help them pinpoint the location of individuals using firearms.

The time may well arrive when computers can help us learn what animals are saying to each other (and to us) by analyzing the sounds they make. I think of the Disney/Pixar Animation Studios film "Up" and the translation collar worn by the dogs. We may find out that chasing squirrels is all they really do have on their minds. Or we could all become Dr. Doolitttle's or animal whisperers. But, as Dr. Kanevsky points out, there are better uses for such technologies.

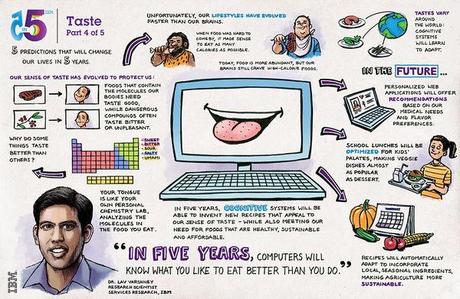

The next sense that computers are likely to develop is taste. The website states:

"The challenge of providing food — whether it's for impoverished populations, people on restricted diets or picky kids—is in finding a way to meet both nutritional needs and personal preferences. In the works: a way to compute 'perfect' meals using an algorithmic recipe of favorite flavors and optimal nutrition. No more need for substitute foods when you can have a personalized menu that satisfies both the calorie count and the palate."

Every parent who has ever had a child with a finicky appetite can appreciate how beneficial such a capability could be. Taste is such a personalized thing that no program will ever be able to provide a school menu that will satisfy every child's palate; but, it can probably help improve the diet for most students. The following infographic and video provide additional insights.

Tastes that appeal to us changes over time. Sometimes we just grow up and learn to enjoy different flavors and sometimes we discover new flavors that please our palates. To learn more about what flavors are expected to be trending this coming year, read my post entitled McCormick® Flavor Forecast® 2013.

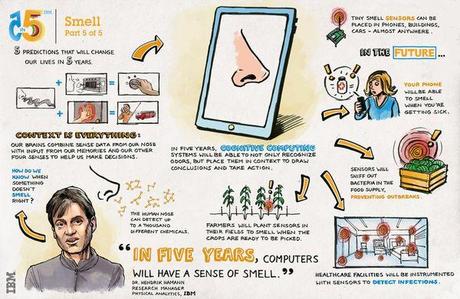

Our sense of taste, of course, is closely connected to our sense of smell. Smell is the final sense that the IBM website discusses. It states:

"When you call a friend to say how you're doing, your phone will know on the full story. Soon, sensors will detect and distinguish odors: a chemical, a biomarker, even molecules in the breath that affect personal health. The same smell technology, combined with deep learning systems, could troubleshoot operating-room hygiene, crops' soil conditions or a city's sanitation system before the human nose knows there's a problem."

Clearly, this is another very sensitive area. While it may be helpful to call your doctor when you are sick and let your phone help him or her diagnose the problem, privacy issues are bound create lots of legal challenges to such technologies. A husband calling his wife from the local bar may not want his home phone to tell his wife how much he has been drinking, but, for all of our sake, the smell of his breath should inform his car not to let him drive. The following infographic and video explain more.

IBM's Meyerson concludes, "In the era of cognitive systems, humans and machines will collaborate to produce better results–each bringing their own superior skills to the partnership. The machines will be more rational and analytic. We’ll provide the judgment, empathy, morale compass and creativity. Indeed, in my view, cognitive systems will help us overcome the 'bandwidth' limits of the individual human." He says that those limits include:

- "Limits to our ability to deal with complexity. We have difficulty processing large amounts of information that comes at us rapidly. We also have problems understanding the interactions of the elements of large systems–such as all of the moving parts in the global economy. With cognitive computing, we will be able to harvest insights from huge quantities of data, understand complex situations, make accurate predictions about the future, and anticipate the unintended consequences of actions.

- "Limits to our expertise. This is especially important when we're trying to address problems that cut across intellectual and industrial domains. With the help of cognitive systems, we will be able to see the big picture and make better decisions. These systems can learn and tell us things we didn't even ask for.

- "Limits to our objectivity. We all possess biases based on our personal experiences, our egos and emotions, and our intuition about what works and what doesn't. Cognitive systems can help remove our blinders and make it possible for us to have clearer understandings of the situations we're in.

- "Limits to our senses. We can only take in and make sense of so much stuff. With cognitive systems, computer sensors teamed with analytics engines will vastly extend our ability to gather and process sense-based information."

Meyerson indicates that he doesn't "believe that cognitive systems will usurp the role of human thinkers." Like many other futurists, he believes "they'll make us more capable and more successful – and, hopefully, better stewards of the planet." I agree with him; but, there are a lot of issues we need to work out before all these capabilities come together.