The ecological approach provides a different job description for the brain (Charles, Golonka & Wilson, 2014). We are embedded in environments rich in information that specifies a wide variety of behaviourally relevant properties. Some of that information is prospective, that is, it is information available now that is about something in the future. Two examples are the information variables you can use to catch a fly ball; both are states of the current time that, when used, allow you to achieve a behavior in the future (specifically, to be in the right place in the right time to catch that ball). Another example is tau and the various other variables for time-to-collision.

This post reviews a paper (van der Meer, Svantesson & van der Weel, 2012) that measured visual evoked potentials in infants longitudinally at two ages, using stimuli that 'loomed' (i.e. looked like they were going to collide with the infants). The data show that the infant brains were not learning to predict the world. Instead, neural activity became more tightly coupled to information about the time-to-collision. We learn to perceive, not predict, the world.

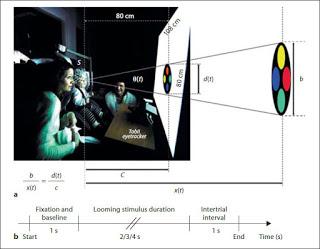

van der Meer et al (2012) conducted a longitudinal EEG study on infants viewing looming virtual objects. The set up had the infants (tested at 5-6 months and again at 12-13 months) view visual stimuli that specified an object approaching all the way to collision under three different accelerations. The authors identified when the stimuli produced visual evoked potentials (VEPs), activity related to the stimuli, and assessed when the VEP peak occurred relative to collision time and the duration of that peak.

Figure 1. Babies in high density EEG rigs watching virtual objects fly at their faces, because science.

There were two differences in these dependent variables with age. The first difference was that the peak VEP occurred closer to actual collision time in the older children. The second difference was that the VEP duration decreased in the older children. In other words, the neural activity evoked by the looming stimulus became more accurate and less variable with respect to the timing of the collision. (This also makes the point that understanding what the brain us doing requires knowing what information it is interacting with - interpreting neural data requires a theory, and changing the theory changes the interpretation.)A looming stimulus does not just create information about time to contact. It also moves with some speed and it changes apparent size (it gets bigger, i.e. it looms!). In a separate analysis, the authors identified when you would expect VEPs if the infants were responding to visual velocity information, visual angle information or actual time-to-contact information. At 5-6 months, 4 infants were responding to velocity and 6 were using time. By 12-13 months, 9 out of the 10 were responding to to time, i.e. they had shifted to the better variable. (The infant who was using velocity at 12 months actually switched from using time when he was younger; the authors note this infant was a late roller and had several months less crawling experience than the others). This data connects to the literature on the use of non-specifying variables.

To summarise: infant brains were not learning to better predict the world. They were becoming more attuned to information about time-to-collision, and the neural activity was getting better at preserving the temporal structure of that information*. We learn to perceive, not predict, the world.

ReferencesCharles, E. P., Wilson, A. D., & Golonka, S. (2014). The most important thing neuropragmatism can do: Providing an alternative to ‘cognitive’ neuroscience. In Pragmatist Neurophilosophy: American Philosophy and the Brain, (eds. Shook, J., & Solymosi, T). Bloomsbury Academic.

Van Der Meer, A. L., Svantesson, M., & Van Der Weel, F. R. (2012). Longitudinal study of looming in infants with high-density EEG. Developmental neuroscience, 34(6), 488-501.

*If you think that sounds like a neural representation, you're going to laugh your ass off at our next paper, currently under review :) Stay tuned!